Sliced Spotify Track Popularity

Jim Gruman

July 20, 2021

Last updated: 2021-10-11

Checks: 7 0

Knit directory: myTidyTuesday/

This reproducible R Markdown analysis was created with workflowr (version 1.6.2). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20210907) was run prior to running the code in the R Markdown file. Setting a seed ensures that any results that rely on randomness, e.g. subsampling or permutations, are reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility.

The results in this page were generated with repository version 1be4dee. See the Past versions tab to see a history of the changes made to the R Markdown and HTML files.

Note that you need to be careful to ensure that all relevant files for the analysis have been committed to Git prior to generating the results (you can use wflow_publish or wflow_git_commit). workflowr only checks the R Markdown file, but you know if there are other scripts or data files that it depends on. Below is the status of the Git repository when the results were generated:

Ignored files:

Ignored: .Rhistory

Ignored: .Rproj.user/

Ignored: catboost_info/

Ignored: data/2021-10-11/

Ignored: data/CNHI_Excel_Chart.xlsx

Ignored: data/CommunityTreemap.jpeg

Ignored: data/Community_Roles.jpeg

Ignored: data/YammerDigitalDataScienceMembership.xlsx

Ignored: data/accountchurn.rds

Ignored: data/acs_poverty.rds

Ignored: data/advancedaccountchurn.rds

Ignored: data/airbnbcatboost.rds

Ignored: data/australiaweather.rds

Ignored: data/fmhpi.rds

Ignored: data/grainstocks.rds

Ignored: data/hike_data.rds

Ignored: data/nber_rs.rmd

Ignored: data/netflixTitles.rmd

Ignored: data/netflixTitles2.rds

Ignored: data/spotifyxgboost.rds

Ignored: data/spotifyxgboostadvanced.rds

Ignored: data/us_states.rds

Ignored: data/us_states_hexgrid.geojson

Ignored: data/weatherstats_toronto_daily.csv

Untracked files:

Untracked: analysis/CHN_1_sp.rds

Untracked: analysis/sample data for r test.xlsx

Untracked: code/YammerReach.R

Untracked: code/work list batch targets.R

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the repository in which changes were made to the R Markdown (analysis/2021_07_20_sliced.Rmd) and HTML (docs/2021_07_20_sliced.html) files. If you’ve configured a remote Git repository (see ?wflow_git_remote), click on the hyperlinks in the table below to view the files as they were in that past version.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| Rmd | 1be4dee | opus1993 | 2021-10-11 | adopt common color palette |

Season 1 Episode 8 features a challenge to predict the popularity of Spotify tracks. The evaluation metric for submissions in this competition is residual mean squared error.

SLICED is like the TV Show Chopped but for data science. Competitors get a never-before-seen dataset and two-hours to code a solution to a prediction challenge. Contestants get points for the best model plus bonus points for data visualization, votes from the audience, and more.

The audience is invited to participate as well. This file consists of my submissions with cleanup and commentary added.

To make the best use of the resources that we have, we will explore the data set for features to select those with the most predictive power, build a random forest to confirm the recipe, and then build one or more ensemble models. If there is time, we will craft some visuals for model explainability.

Let’s load up packages:

suppressPackageStartupMessages({

library(tidyverse) # clean and transform rectangular data

library(hrbrthemes) # plot theming

library(corrr) # visualize numeric correlations

library(tidymodels) # machine learning tools

library(finetune) # racing methods for accelerating hyperparameter tuning

library(textrecipes) # ml tools for text models

library(themis) # ml prep tools for handling unbalanced datasets

library(baguette) # ml tools for bagged decision tree models

library(vip) # interpret model performance

library(SHAPforxgboost) # model explainability

library(DALEXtra) # for model explainability

})

source(here::here("code","_common.R"),

verbose = FALSE,

local = knitr::knit_global())

ggplot2::theme_set(theme_jim(base_size = 12))

#create a data directory

data_dir <- here::here("data",Sys.Date())

if (!file.exists(data_dir)) dir.create(data_dir)

# set a competition metric

mset <- metric_set(rmse)

# set the competition name from the web address

competition_name <- "sliced-s01e08-KJSEks"

zipfile <- paste0(data_dir,"/", competition_name, ".zip")

path_export <- here::here("data",Sys.Date(),paste0(competition_name,".csv"))Import and Exploratory Data Analysis

A quick reminder before downloading the dataset: Go to the web site and accept the competition terms!!!

Import and Skim

We have basic shell commands available to interact with Kaggle here:

# from the Kaggle api https://github.com/Kaggle/kaggle-api

# the leaderboard

shell(glue::glue("kaggle competitions leaderboard { competition_name } -s"))

# the files to download

shell(glue::glue("kaggle competitions files -c { competition_name }"))

# the command to download files

shell(glue::glue("kaggle competitions download -c { competition_name } -p { data_dir }"))

# unzip the files received

shell(glue::glue("unzip { zipfile } -d { data_dir }"))We are reading in the contents of the three datafiles here, unnesting the id_artists column, joining the artists table to each id of the artists, cleaning the genres text, and finally collapsing the genres back.

artists <-

read_csv(file = glue::glue(

{

data_dir

},

"/artists.csv"

)) %>%

mutate(genres = str_remove_all(genres, "\\[|\\]|\\'|\\,"))

train_df <-

read_csv(file = glue::glue(

{

data_dir

},

"/train.csv"

)) %>%

mutate(id_artists = str_remove_all(id_artists, "\\[|\\]|\\'")) %>%

tidytext::unnest_tokens(id_artists, id_artists, to_lower = FALSE) %>%

left_join(artists %>% select(id_artists = id, followers, genres)) %>%

group_by(

id,

popularity,

name,

artists,

duration_ms,

danceability,

energy,

key,

loudness,

speechiness,

acousticness,

instrumentalness,

liveness,

valence,

tempo,

release_year

) %>%

summarize(

genres = str_c(genres, collapse = " "),

followers = mean(followers),

.groups = "drop"

)

test_df <-

read_csv(file = glue::glue(

{

data_dir

},

"/test.csv"

)) %>%

mutate(id_artists = str_remove_all(id_artists, "\\[|\\]|\\'")) %>%

tidytext::unnest_tokens(id_artists, id_artists, to_lower = FALSE) %>%

left_join(artists %>% select(id_artists = id, followers, genres)) %>%

group_by(

id,

name,

artists,

duration_ms,

danceability,

energy,

key,

loudness,

speechiness,

acousticness,

instrumentalness,

liveness,

valence,

tempo,

release_year

) %>%

summarize(

genres = str_c(genres, collapse = " "),

followers = mean(followers),

.groups = "drop"

)Some questions to answer here: What features have missing data, and imputations may be required? What does the outcome variable look like, in terms of imbalance?

skimr::skim(train_df)Outcome variable popularity ranges from 0 to 100. Only followers is missing obvious data. We will take a closer look at the categorical variable levels in a moment.

Field descriptions, from Kaggle:

train id (Unique identifier of track)

name (Name of the song)

popularity (Ranges from 0 to 100)

duration_ms (Integer typically ranging from 200k to 300k)

artists (List of artists mentioned)

id_artists (Ids of mentioned artists)

danceability (Ranges from 0 to 1)

energy (Ranges from 0 to 1)

key (All keys on octave encoded as values ranging from 0 to 11, starting on C as 0, C# as 1 and so on…)

loudness (Float typically ranging from -60 to 0)

speechiness (Ranges from 0 to 1)

acousticness (Ranges from 0 to 1)

instrumentalness (Ranges from 0 to 1)

liveness (Ranges from 0 to 1)

valence (Ranges from 0 to 1)

tempo (Float typically ranging from 50 to 150)

release_year (Year of release)

release_month (Month of year released)

release_day (Day of month released)

artists

id (Id of artist)

followers (Total number of followers of artist)

genres (Genres associated with this artist)

name (Name of artist)

popularity (Popularity of given artist based on all his/her tracks)

Outcome Variable Distribution

Popularity is left skewed with a lot of zero values. Let’s look at the distribution with mean and median:

summarize_popularity <- function(tbl) {

tbl %>%

summarize(

median_popularity = median(popularity),

n = n(),

mean_popularity = mean(popularity),

.groups = "drop"

) %>%

arrange(desc(n))

}

train_df %>%

summarize_popularity()train_df %>%

ggplot(aes(popularity + .1)) +

geom_histogram(bins = 30) +

scale_x_log10(labels = scales::pretty_breaks()) +

labs(

title = "Spotify Playlist Tracks Popularity Distribution",

x = "Popularity"

)

We will work with tree-based machine learning techniques that tolerate more of this than others.

Categorical Feature Plots

train_df %>%

tidytext::unnest_tokens(genres, genres, to_lower = FALSE) %>%

group_by(genres = withfreq(genres)) %>%

summarize_popularity() %>%

mutate(

genres = fct_lump(genres, w = n, 12),

genres = fct_reorder(genres, mean_popularity)

) %>%

slice_max(order_by = n, n = 11) %>%

ggplot(aes(mean_popularity, genres)) +

geom_point(aes(size = n)) +

scale_size_continuous(

guide = "none",

range = c(1, 6)

) +

labs(

x = "Popularity",

y = "",

title = "What of the 11 common genres are most popular?",

subtitle = "Size of points is proportional to frequency in the dataset. Frequency of occurances in (parenthesis)"

)

train_df %>%

group_by(name = withfreq(name)) %>%

summarize_popularity() %>%

mutate(

name = fct_lump(name, w = n, 12),

name = fct_reorder(name, mean_popularity)

) %>%

slice_max(order_by = n, n = 11) %>%

ggplot(aes(mean_popularity, name)) +

geom_point(aes(size = n)) +

scale_size_continuous(

guide = "none",

range = c(1, 6)

) +

labs(

x = "Popularity",

y = "",

title = "What of the 11 most common track names are most popular?",

subtitle = "Size of points is proportional to frequency in the dataset. Frequency of occurances in (parenthesis)"

)

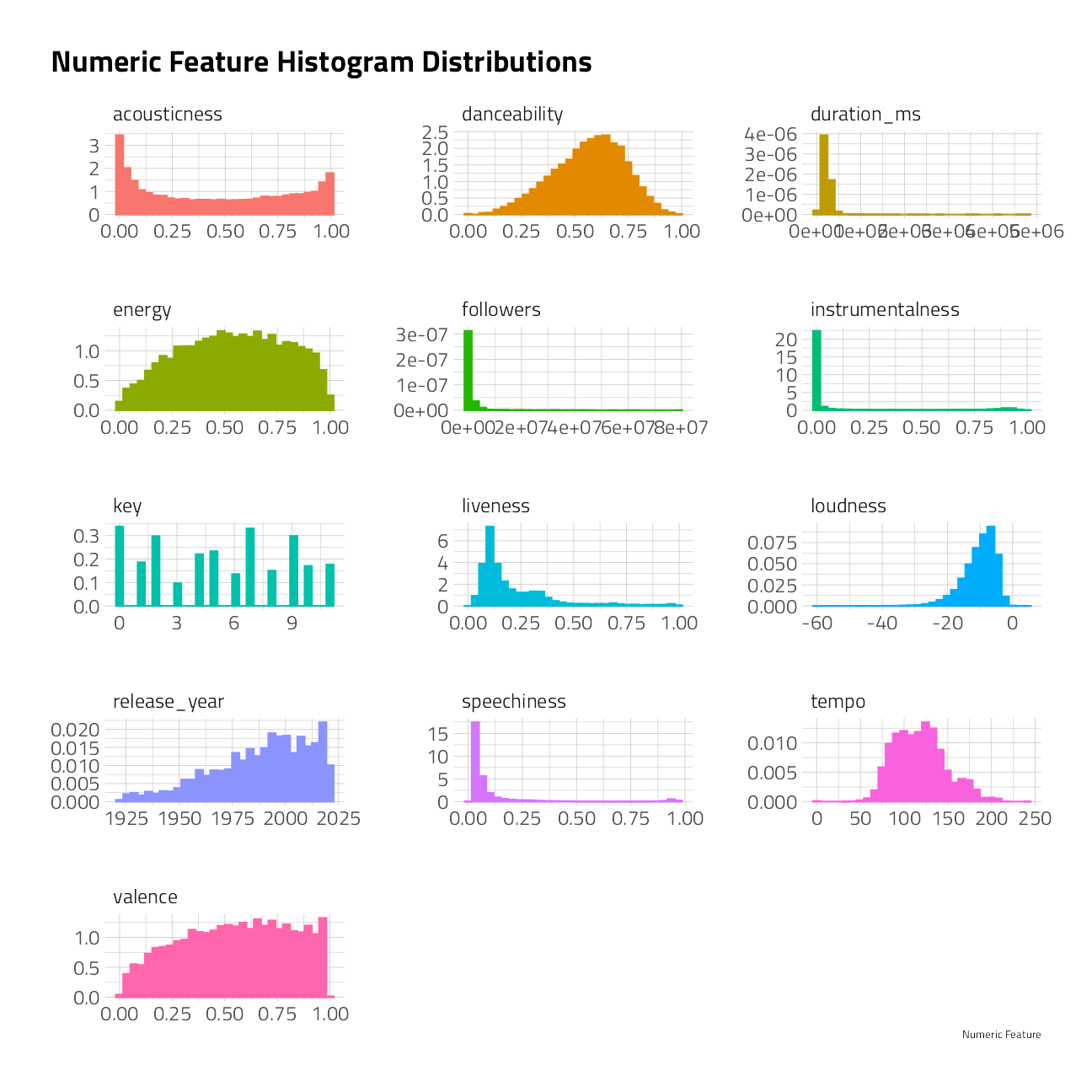

Numeric Feature Plots

train_numeric <- train_df %>%

keep(is.numeric) %>%

colnames()

train_df %>%

select_at(all_of(train_numeric)) %>%

select(-id, -popularity) %>%

pivot_longer(

cols = everything(),

names_to = "key",

values_to = "value"

) %>%

filter(!is.na(value)) %>%

ggplot(mapping = aes(value,

after_stat(density),

fill = key, color = key

)) +

geom_histogram(

position = "identity",

bins = 30,

show.legend = FALSE

) +

facet_wrap(~key, scales = "free", ncol = 3) +

theme(

plot.subtitle = ggtext::element_textbox_simple(),

plot.background = element_rect(color = "white")

) +

labs(

title = "Numeric Feature Histogram Distributions",

x = "Numeric Feature",

y = NULL

)

A facet plot for all numeric features of popularity over time by numeric feature.

train_df %>%

select(id, popularity, duration_ms:release_year) %>%

pivot_longer(

cols = -c(id, popularity, release_year),

names_to = "key",

values_to = "value"

) %>%

ggplot(aes(release_year, value, z = popularity)) +

stat_summary_hex(alpha = 0.9, bins = 30) +

scale_x_continuous(n.breaks = 3) +

facet_wrap(~key, scales = "free") +

labs(

fill = "Mean popularity",

title = "Spotify Numeric Features"

) +

theme(legend.position = c(0.9, 0.2))

Numeric Feature Correlations

train_df %>%

select(danceability:release_year) %>%

correlate(

method = "pearson",

use = "everything"

) %>%

rearrange() %>%

shave() %>%

rplot(

shape = 16,

print_cor = TRUE,

legend = TRUE

) +

scale_x_discrete(guide = guide_axis(n.dodge = 2))

Acousticness, loudness, danceability, and release_year are correlated. Acousticness is anti-correlated with energy.

Conclusion:

- The

followersof the artist feature requires an imputation for missing data and would benefit from a log transformation. - We will attempt to use some number of the most frequent genres. Let’s start with 50.

Preprocessing

The recipe

To move quickly, this basic recipe tunes nothing, takes all of the numeric features, and uses only 50 genres.

basic_rec <-

recipe(

popularity ~ duration_ms + danceability + energy + key + loudness + speechiness + acousticness + instrumentalness + liveness + valence + tempo + release_year + followers + genres,

data = train_df

) %>%

step_tokenize(genres) %>%

step_tokenfilter(genres, max_tokens = 50) %>%

step_tf(genres, weight_scheme = "binary") %>%

step_mutate_at(contains("tf_genres"),

fn = ~ if_else(. == TRUE, 1, 0)

) %>%

step_impute_median(followers) %>%

step_log(followers, duration_ms, offset = 1)dataset for modeling

basic_rec %>%

# finalize_recipe(list(num_comp = 2)) %>%

prep() %>%

juice()Cross Validation

We will use 5-fold cross validation and stratify on popularity to build models that are less likely to over-fit the training data.

Proper business modeling practice would holdout a sample from training entirely for assessing model performance. On the Kaggle competitions, the holdout is the “test” itself in the form of the the competitor submission.

set.seed(2021)

(folds <- vfold_cv(train_df, v = 5, strata = popularity))Machine Learning: Random Forest

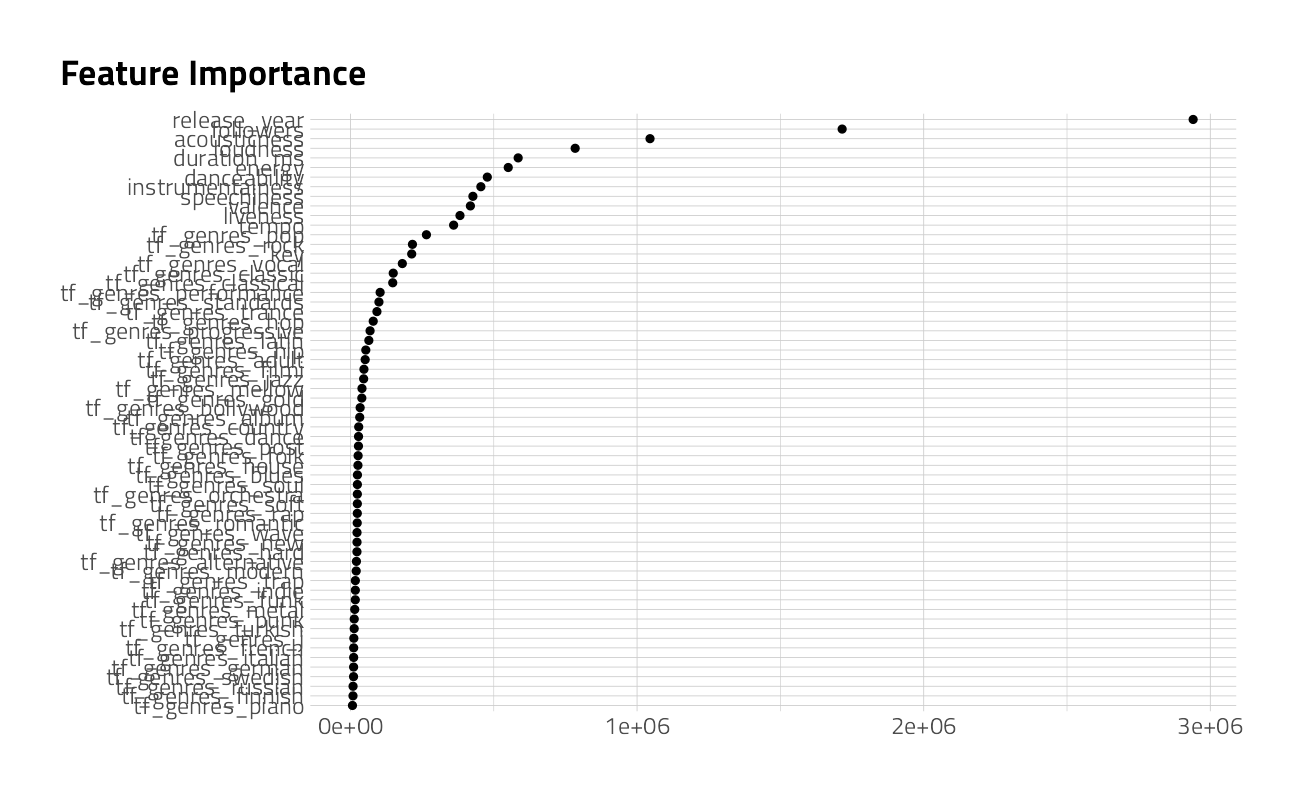

Let’s run models in two steps. The first is a simple, fast shallow random forest, to confirm that the model will run and observe feature importance scores. The second will use xgboost. Both use the basic recipe preprocessor for now.

Model Specification

This first model is a bagged tree, where the number of predictors to consider for each split of a tree (i.e., mtry) equals the number of all available predictors. The min_n of 10 means that each tree branch of the 50 decision trees built have at least 10 observations. As a result, the decision trees in the ensemble all are relatively shallow.

(bag_spec <-

bag_tree(min_n = 10) %>%

set_engine("rpart", times = 50) %>%

set_mode("regression"))Bagged Decision Tree Model Specification (regression)

Main Arguments:

cost_complexity = 0

min_n = 10

Engine-Specific Arguments:

times = 50

Computational engine: rpart Parallel backend

To speed up computation we will use a parallel backend.

all_cores <- parallelly::availableCores(omit = 1)

all_coressystem

11 future::plan("multisession", workers = all_cores) # on WindowsFit and Variable Importance

Lets make a cursory check of the recipe and variable importance, which comes out of rpart for free. This workflow also handles factors without dummies.

bag_wf <-

workflow() %>%

add_recipe(basic_rec) %>%

add_model(bag_spec)

bag_fit <- parsnip::fit(bag_wf, data = train_df)

extract_fit_parsnip(bag_fit)$fit$imp %>%

mutate(term = fct_reorder(term, value)) %>%

ggplot(aes(value, term)) +

geom_point() +

geom_errorbarh(aes(

xmin = value - `std.error` / 2,

xmax = value + `std.error` / 2

),

height = .3

) +

labs(

title = "Feature Importance",

x = NULL, y = NULL

)

augment(bag_fit, train_df) %>%

select(id, popularity, .pred) %>%

rmse(truth = popularity, estimate = .pred)The RMSE here is not a great result. It suggests that for this model, songs where the popularity is near the mean of 27, the prediction yields an error of around 5.8 on the training data. Even worse, the model is likely over-fit and would perform worse on holdout data.

Even so, let’s bank this first submission to Kaggle as-is, and work more with xgboost to do better.

augment(bag_fit, test_df) %>%

select(id, popularity = .pred) %>%

write_csv(file = path_export)shell(glue::glue('kaggle competitions submit -c { competition_name } -f { path_export } -m "First model"'))Machine Learning: XGBoost Model 1

Model Specification

Let’s start with a boosted model that runs fast and gives an early indication of which hyperparameters make the most difference in model performance.

(xgboost_spec <- boost_tree(

trees = tune(),

min_n = tune(),

learn_rate = tune(),

tree_depth = tune(),

stop_iter = 20

) %>%

set_engine("xgboost", validation = 0.2) %>%

set_mode("regression"))Boosted Tree Model Specification (regression)

Main Arguments:

trees = tune()

min_n = tune()

tree_depth = tune()

learn_rate = tune()

stop_iter = 20

Engine-Specific Arguments:

validation = 0.2

Computational engine: xgboost Tuning and Performance

The grid here is only 7 combinations of the default parameters and the tune_grid process is setup to stop early.

set.seed(2021)

cv_res_xgboost <-

workflow() %>%

add_recipe(basic_rec) %>%

add_model(xgboost_spec) %>%

tune_grid(

resamples = folds,

grid = 7,

metrics = mset

)autoplot(cv_res_xgboost)

collect_metrics(cv_res_xgboost) %>%

arrange(mean)The best learning rates are very small, around 0.013. Setting trees at 753 and depth at 2 appears to give stable results.

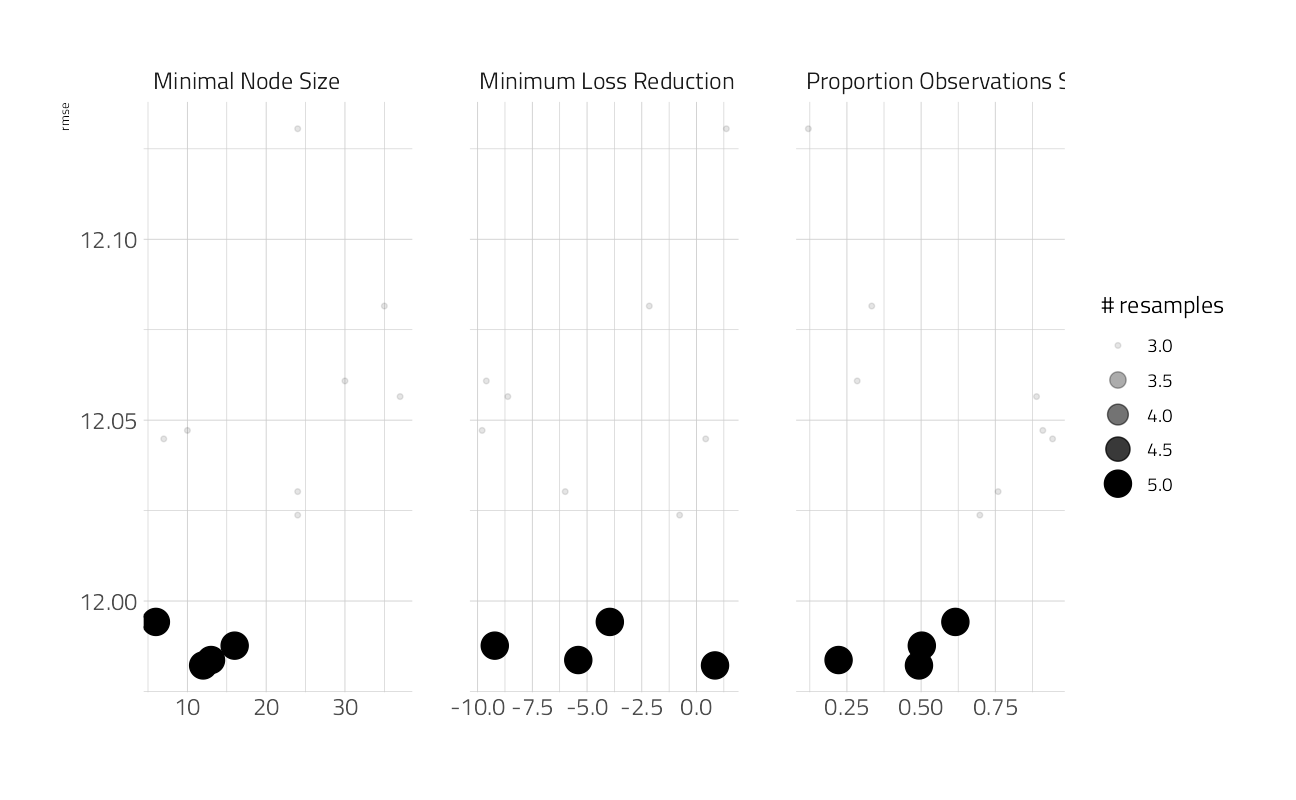

Machine Learning: XGBoost Model 2

Let’s use what we learned above to set the learning rate, the number of trees and tree depth, and add back other hyperparameters for further tuning. This time, let’s also try thetune_race_anova technique for skipping the parts of the grid search that do not perform well.

Model Specification

(xgboost_spec <- boost_tree(

trees = 753,

min_n = tune(),

learn_rate = 0.013,

tree_depth = 2,

sample_size = tune(),

loss_reduction = tune(),

stop_iter = 20

) %>%

set_engine("xgboost", validation = 0.1) %>%

set_mode("regression"))Boosted Tree Model Specification (regression)

Main Arguments:

trees = 753

min_n = tune()

tree_depth = 2

learn_rate = 0.013

loss_reduction = tune()

sample_size = tune()

stop_iter = 20

Engine-Specific Arguments:

validation = 0.1

Computational engine: xgboost xgboost_param <- parameters(xgboost_spec) %>%

update(

min_n = min_n(c(4, 39))

)Tuning and Performance

cv_res_xgboost <-

workflow() %>%

add_recipe(basic_rec) %>%

add_model(xgboost_spec) %>%

tune_race_anova(

resamples = folds,

grid = xgboost_param %>% grid_max_entropy(size = 12),

control = control_race(

verbose = FALSE,

save_pred = TRUE,

save_workflow = TRUE,

extract = extract_model,

parallel_over = "resamples"

),

metrics = mset

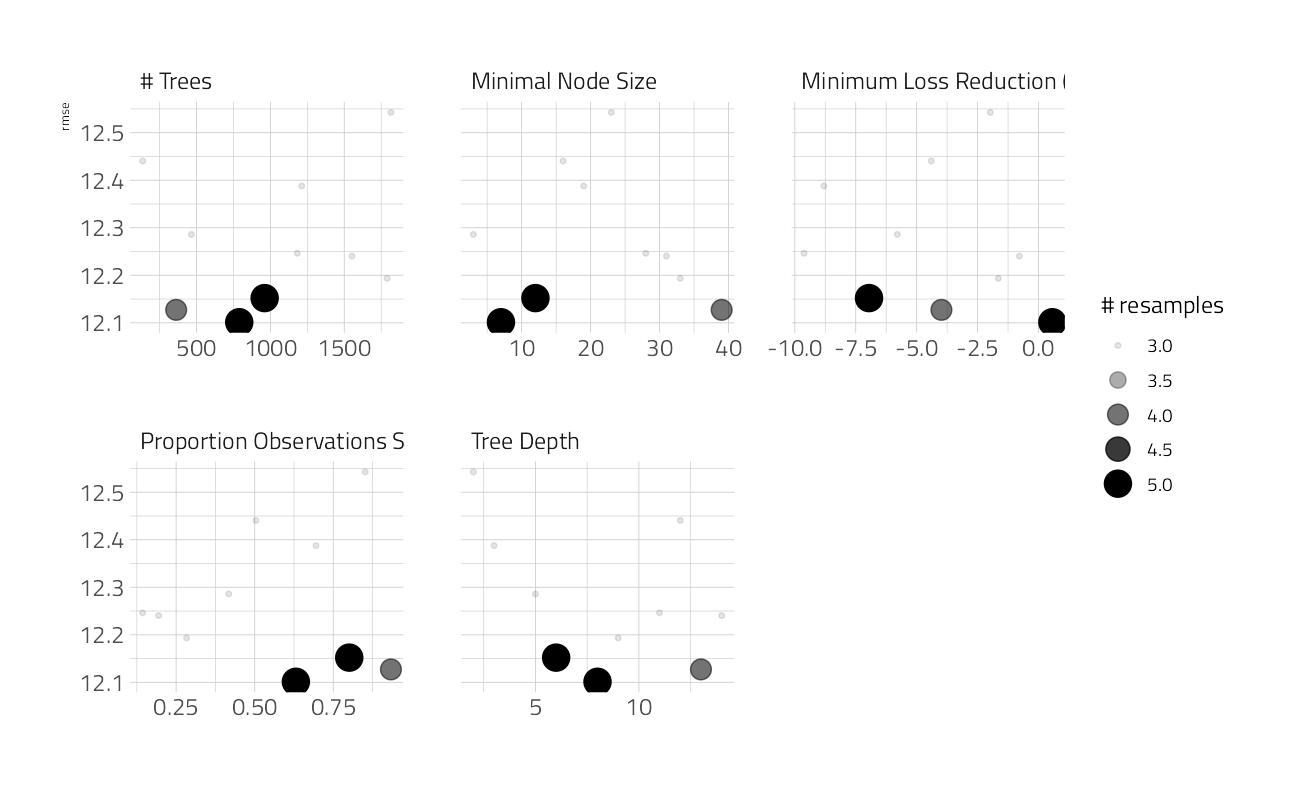

)autoplot(cv_res_xgboost)

collect_metrics(cv_res_xgboost) %>%

arrange(mean)The best mean rmse across folds is about 12, which is discouraging. This figure is likely more robust and a better estimate of performance on holdout data. Let’s fit on the entire training set at these hyperparameters to get a single RMSE performance estimate on the best model so far.

xgb_wf_best <-

workflow() %>%

add_recipe(basic_rec) %>%

add_model(xgboost_spec) %>%

finalize_workflow(select_best(cv_res_xgboost))

fit_best <- xgb_wf_best %>%

parsnip::fit(data = train_df)

augment(fit_best, train_df) %>%

select(id, popularity, .pred) %>%

rmse(truth = popularity, estimate = .pred)Let’s bank this second submission to Kaggle, and work even more with xgboost to do better.

augment(fit_best, test_df) %>%

select(id, popularity = .pred) %>%

write_csv(file = path_export)shell(glue::glue('kaggle competitions submit -c { competition_name } -f { path_export } -m "Second model"'))SHAPley Variable Importance

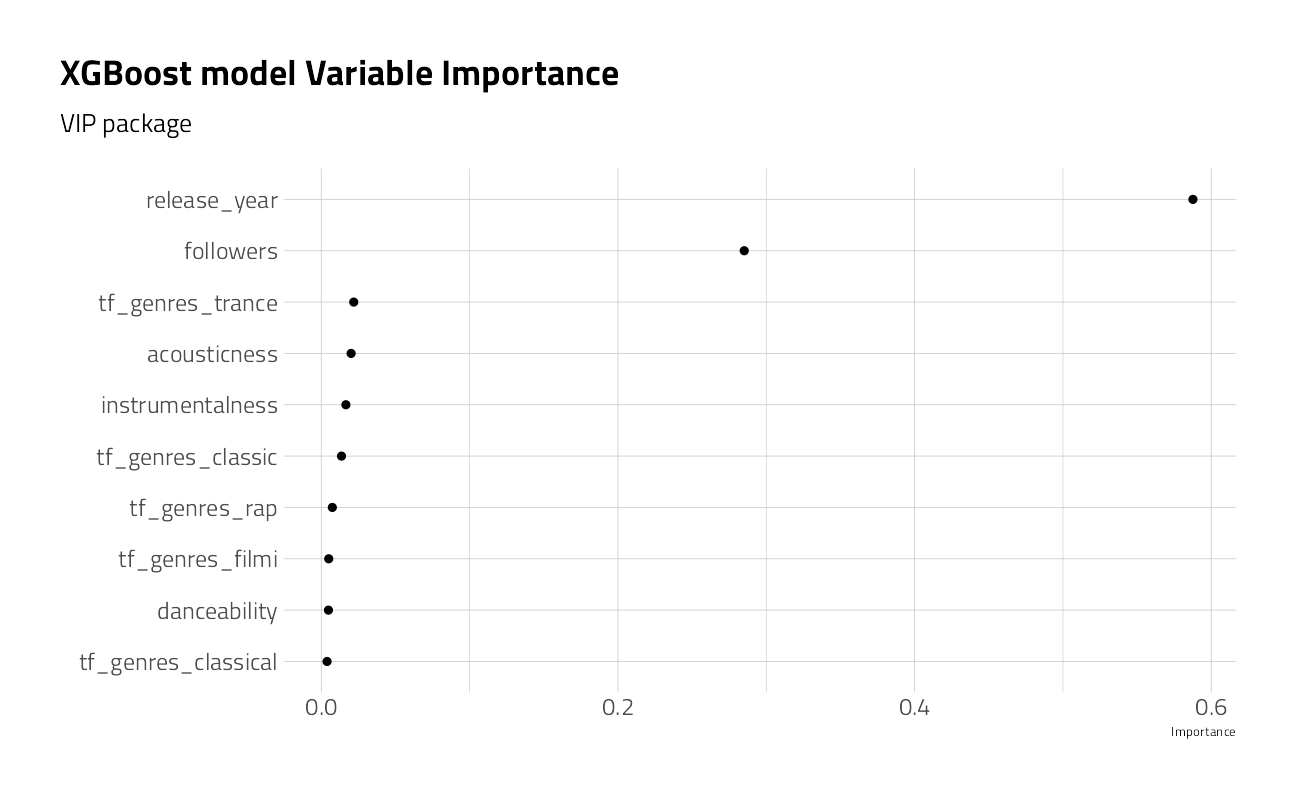

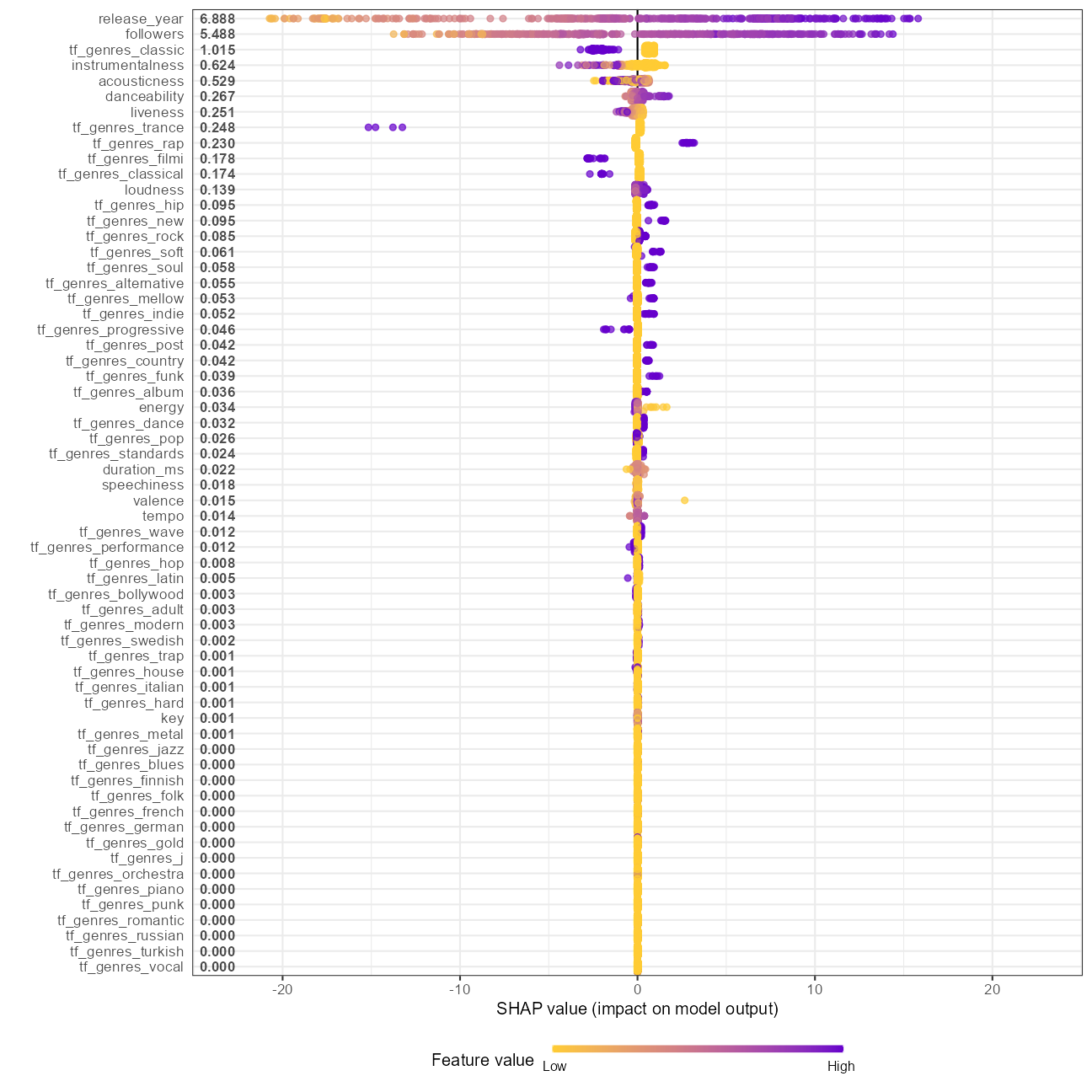

Let’s take a deeper dive into the XGBoost variable importance.

fit_best %>%

extract_fit_parsnip() %>%

vip(geom = "point") +

labs(

title = "XGBoost model Variable Importance",

subtitle = "VIP package"

)

For inference, the XGBoost model suggests that after release_year and followers, the underlying features that drive the popularity are somewhat different. Let’s look closer to understand what is going on to improve the model further.

some_training_data <- basic_rec %>%

prep() %>%

bake(new_data = slice_sample(train_df, n = 500), composition = "matrix")

parsnip_fit <- extract_fit_parsnip(fit_best)

# remove the outcome variable

shap <- shap.prep(parsnip_fit$fit, X_train = some_training_data[, -14])

# shap.importance(shap, names_only = TRUE)

shap.plot.summary(shap)

As mentioned before, release_year and the artist’s followers (log scale) have a large influence on popularity in the model output. Let’s look at individual features.

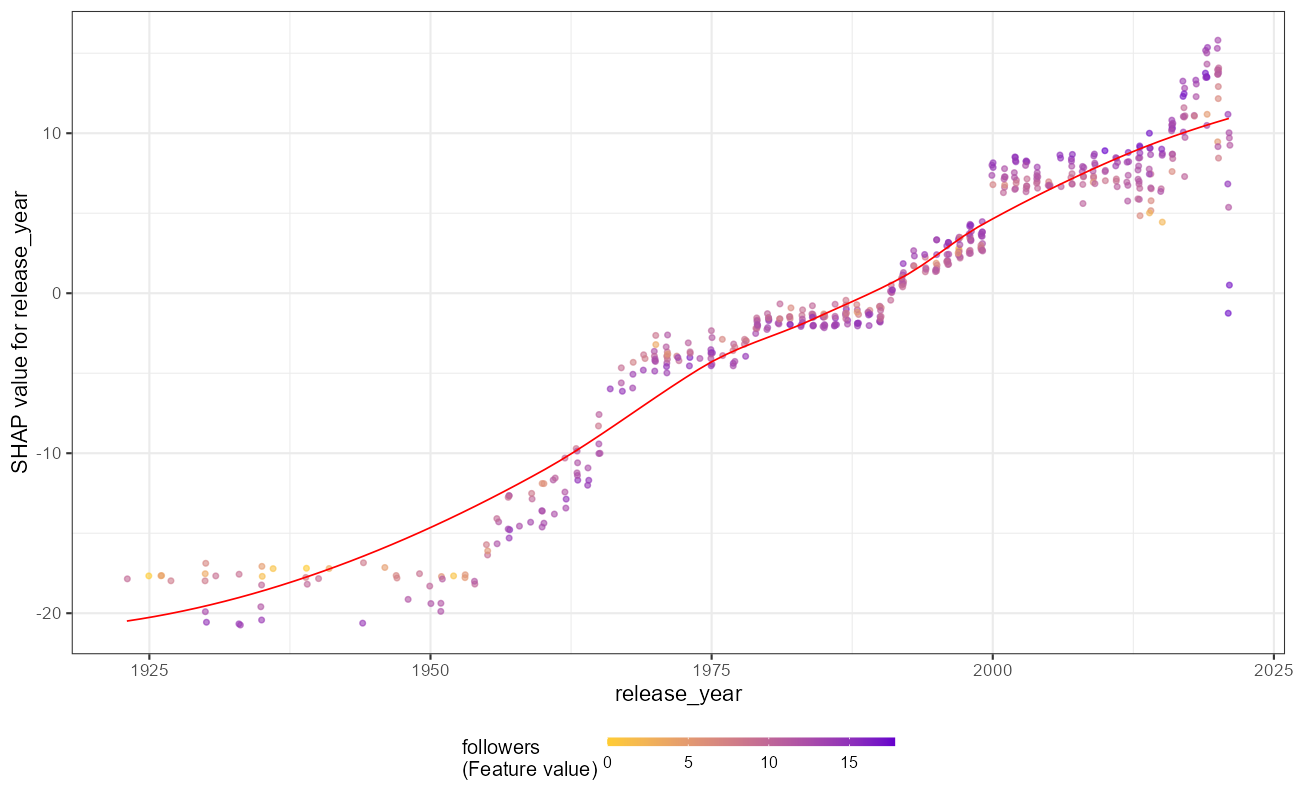

shap.plot.dependence(shap, "release_year",

color_feature = "auto", alpha = 0.6,

jitter_width = 0.1

)

The release year SHAP values are almost linear. There may be an interaction with energy that would improve the model.

shap.plot.dependence(shap, "followers",

color_feature = "auto", alpha = 0.6,

jitter_width = 0.1

)

The log transformation of followers was justified, though something strange must be happening at 12. There may be an interaction with release_year that could improve the model.

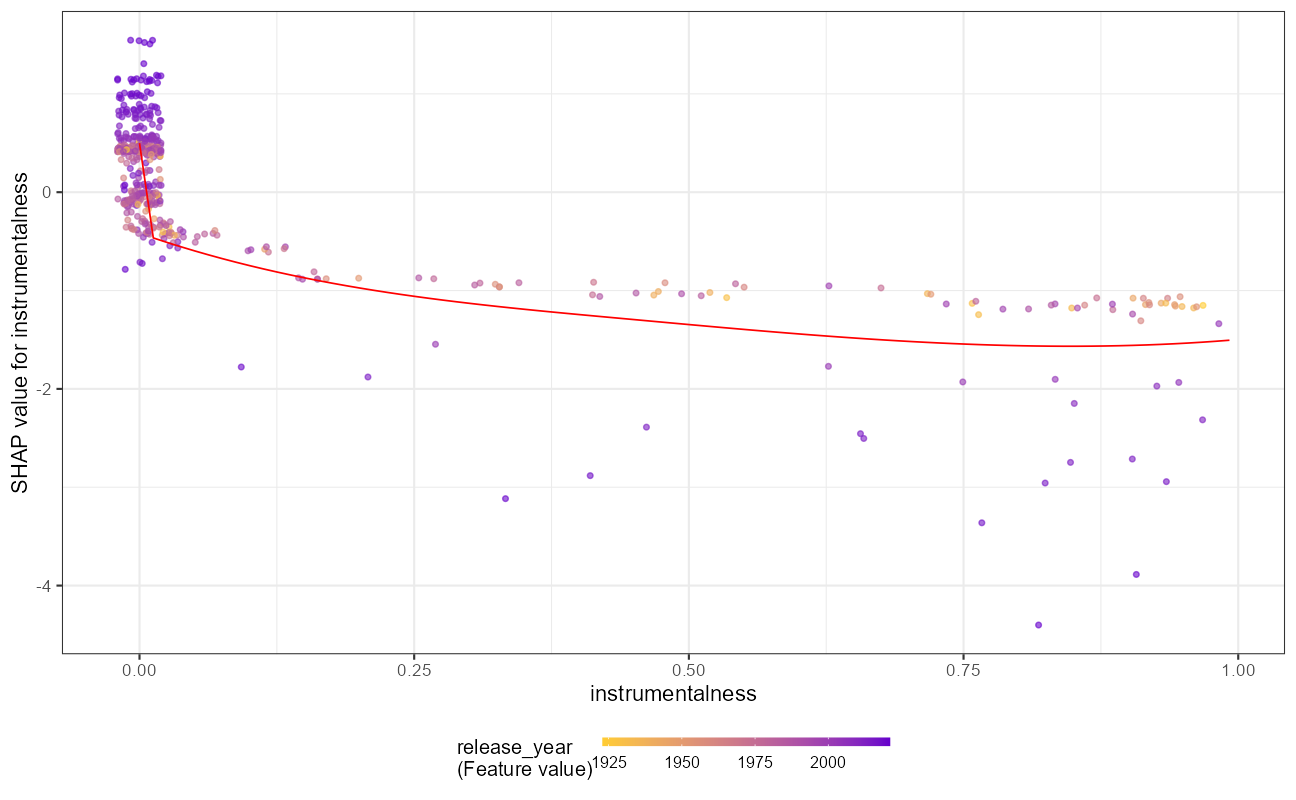

And for the feature instrumentalness:

shap.plot.dependence(shap, "instrumentalness",

color_feature = "auto", alpha = 0.6,

jitter_width = 0.02

)

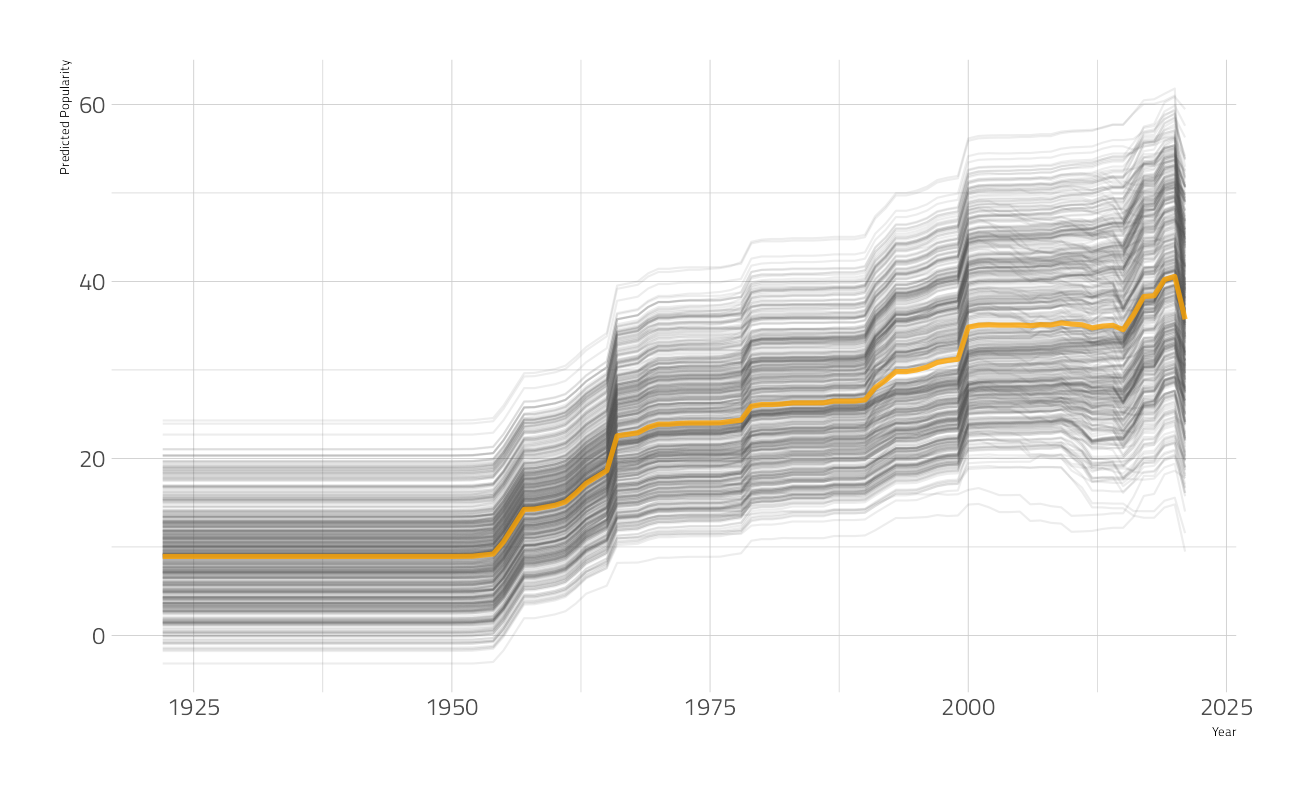

DALEX Partial Dependence Plots

What is the aggregated effect of the release_year feature over 500 examples?

explainer_xgb <- explain_tidymodels(

fit_best,

train_df %>% select(-popularity),

train_df$popularity

)Preparation of a new explainer is initiated

-> model label : workflow ( [33m default [39m )

-> data : 21000 rows 17 cols

-> data : tibble converted into a data.frame

-> target variable : 21000 values

-> predict function : yhat.workflow will be used ( [33m default [39m )

-> predicted values : No value for predict function target column. ( [33m default [39m )

-> model_info : package tidymodels , ver. 0.1.4 , task regression ( [33m default [39m )

-> predicted values : numerical, min = -3.274729 , mean = 27.55155 , max = 63.13488

-> residual function : difference between y and yhat ( [33m default [39m )

-> residuals : numerical, min = -55.70597 , mean = 0.02830947 , max = 51.18548

[32m A new explainer has been created! [39m pdp_year <- model_profile(explainer_xgb,

N = 500,

variables = "release_year"

)

as_tibble(pdp_year$agr_profiles) %>%

ggplot(aes(`_x_`, `_yhat_`)) +

geom_line(

data = as_tibble(

pdp_year$cp_profiles

),

aes(release_year, group = `_ids_`),

size = 0.5, alpha = 0.1, color = "gray30"

) +

geom_line(size = 1.2, alpha = 0.8, color = "orange") +

labs(x = "Year", y = "Predicted Popularity")

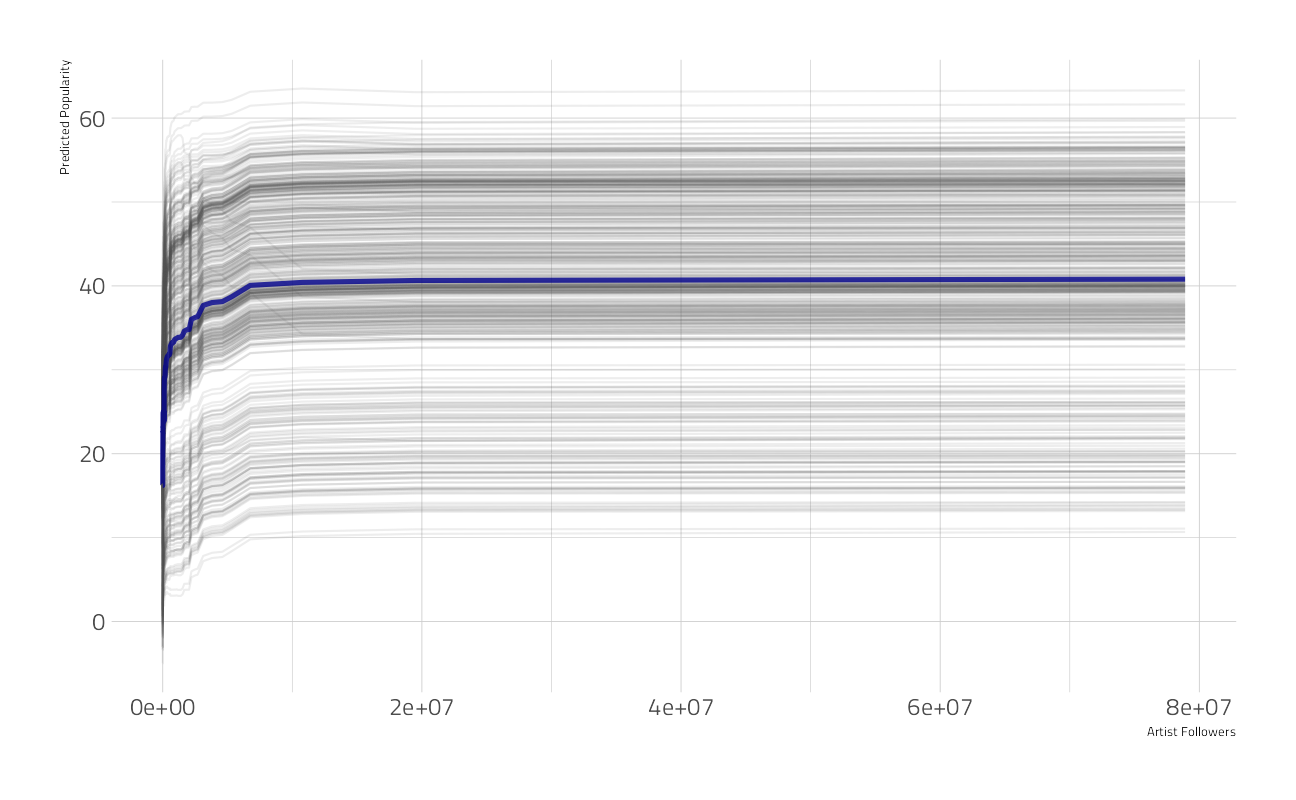

What is the aggregated effect of the followers feature over 500 examples?

pdp_followers <- model_profile(explainer_xgb,

N = 500,

variables = "followers"

)

as_tibble(pdp_followers$agr_profiles) %>%

ggplot(aes(`_x_`, `_yhat_`)) +

geom_line(

data = as_tibble(

pdp_followers$cp_profiles

),

aes(followers, group = `_ids_`),

size = 0.5, alpha = 0.1, color = "gray30"

) +

geom_line(size = 1.2, alpha = 0.8, color = "darkblue") +

labs(x = "Artist Followers", y = "Predicted Popularity")

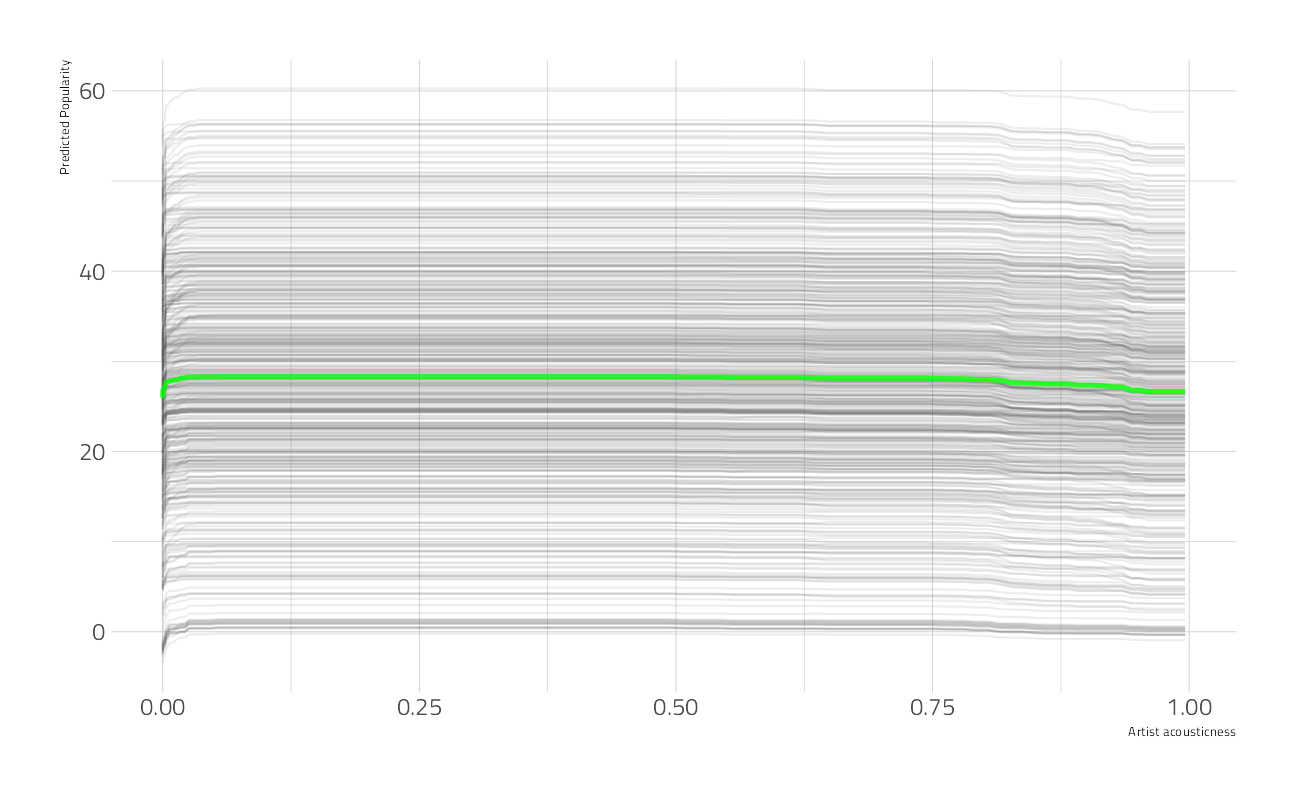

What is the aggregated effect of the acousticness feature over 500 examples?

pdp_acousticness <- model_profile(explainer_xgb,

N = 500,

variables = "acousticness"

)

as_tibble(pdp_acousticness$agr_profiles) %>%

ggplot(aes(`_x_`, `_yhat_`)) +

geom_line(

data = as_tibble(

pdp_acousticness$cp_profiles

),

aes(acousticness, group = `_ids_`),

size = 0.5, alpha = 0.1, color = "gray30"

) +

geom_line(size = 1.2, alpha = 0.8, color = "green") +

labs(x = "Artist acousticness", y = "Predicted Popularity")

What is the aggregated effect of the duration feature over 500 examples?

pdp_duration <- model_profile(explainer_xgb,

N = 500,

variables = "duration_ms"

)

as_tibble(pdp_duration$agr_profiles) %>%

ggplot(aes(`_x_`, `_yhat_`)) +

geom_line(

data = as_tibble(

pdp_duration$cp_profiles

),

aes(duration_ms, group = `_ids_`),

size = 0.5, alpha = 0.1, color = "gray30"

) +

geom_line(size = 1.2, alpha = 0.8, color = "darkgreen") +

labs(x = "Song Duration (ms)", y = "Predicted Popularity")

Machine Learning XGBoost 3

Let’s add our interactions from above and introduce dimensionality reduction.

Recipe

Principal component analysis (PCA) is a transformation of a group of variables that produces a new set of artificial features or components. These components are designed to capture the maximum amount of information (i.e. variance) in the original variables. Also, the components are statistically independent from one another. This means that they can be used to combat large inter-variable correlations in a data set.

(advanced_rec <-

basic_rec %>%

step_interact(terms = ~ release_year:energy) %>%

step_interact(terms = ~ release_year:followers) %>%

step_normalize(all_numeric_predictors()) %>%

step_pca(all_numeric_predictors(), threshold = 0.9))Recipe

Inputs:

role #variables

outcome 1

predictor 14

Operations:

Tokenization for genres

Text filtering for genres

Term frequency with genres

Variable mutation for contains("tf_genres")

Median Imputation for followers

Log transformation on followers, duration_ms

Interactions with release_year:energy

Interactions with release_year:followers

Centering and scaling for all_numeric_predictors()

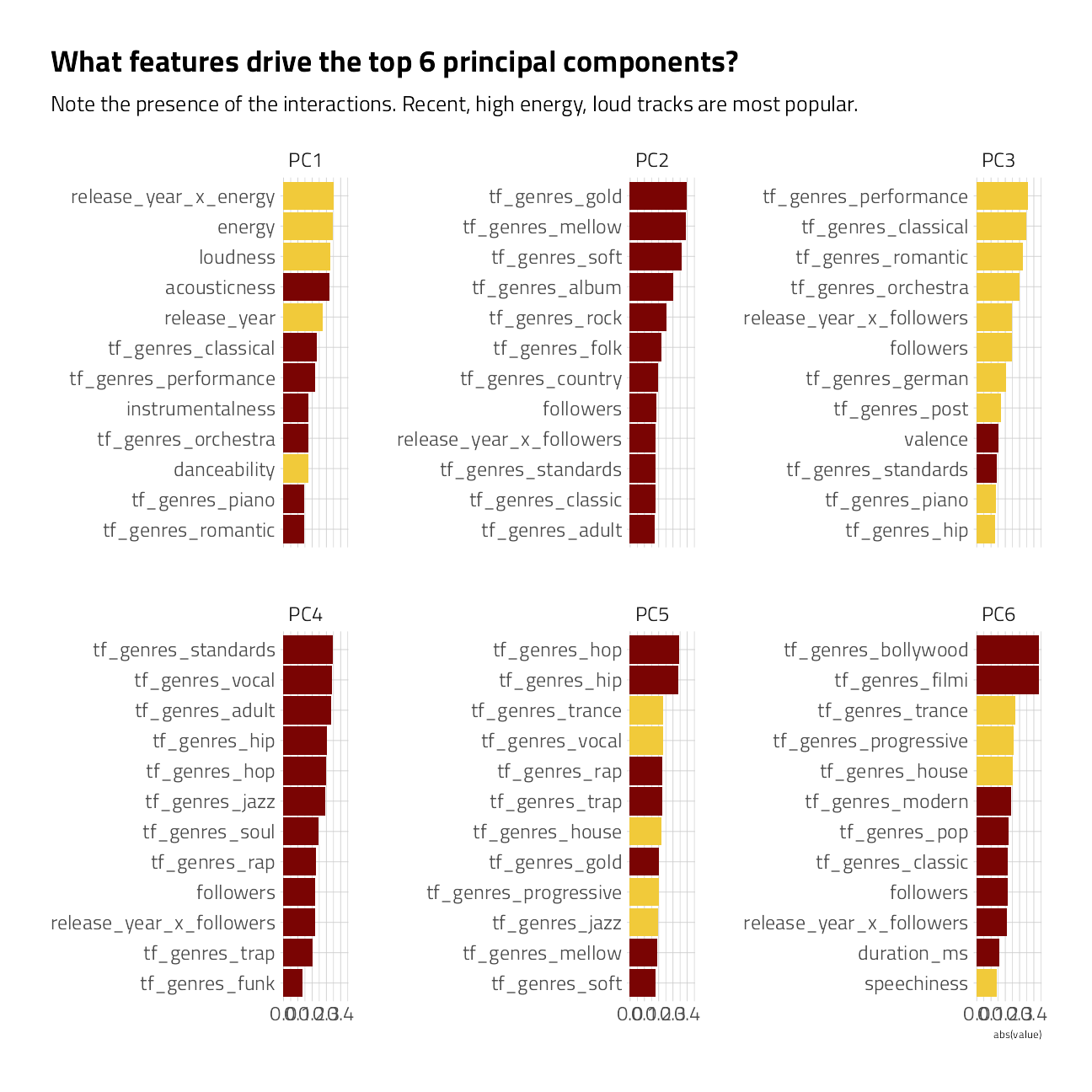

No PCA components were extracted.tidy_coef <- tidy(prep(advanced_rec), 10, type = "coef")

tidy_coef %>%

filter(component %in% paste0("PC", 1:6)) %>%

group_by(component) %>%

slice_max(abs(value), n = 12) %>%

ungroup() %>%

mutate(terms = tidytext::reorder_within(terms, abs(value), component)) %>%

ggplot(aes(abs(value), terms, fill = value > 0)) +

geom_col(show.legend = FALSE) +

facet_wrap(~component, scales = "free_y") +

tidytext::scale_y_reordered() +

labs(

title = "What features drive the top 6 principal components?",

subtitle = "Note the presence of the interactions. Recent, high energy, loud tracks are most popular.",

y = NULL

)

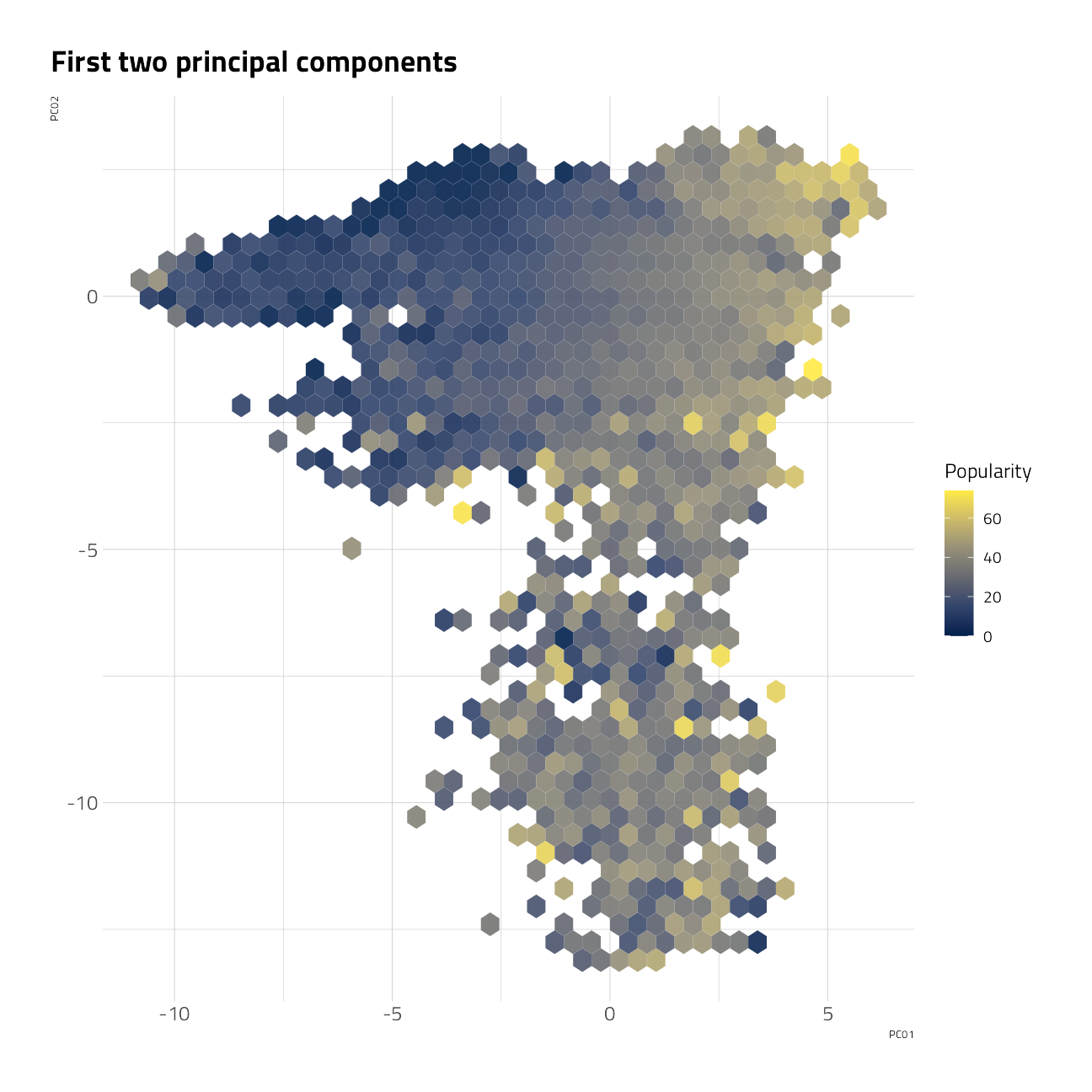

bake(prep(advanced_rec), new_data = NULL) %>%

ggplot(aes(PC01, PC02, z = popularity)) +

stat_summary_hex(alpha = 0.9, bins = 40) +

scale_fill_viridis_c(option = "E") +

labs(

fill = "Popularity",

title = "First two principal components"

)

Tuning and Performance

The clean advanced preprocessor with interactions should yield a better model. Let’s see.

(xgboost_spec <- boost_tree(

trees = tune(),

min_n = tune(),

learn_rate = 0.02,

tree_depth = tune(),

sample_size = tune(),

loss_reduction = tune()

) %>%

set_engine("xgboost") %>%

set_mode("regression"))Boosted Tree Model Specification (regression)

Main Arguments:

trees = tune()

min_n = tune()

tree_depth = tune()

learn_rate = 0.02

loss_reduction = tune()

sample_size = tune()

Computational engine: xgboost cv_res_xgboost <-

workflow() %>%

add_recipe(advanced_rec) %>%

add_model(xgboost_spec) %>%

tune_race_anova(

resamples = folds,

grid = 10,

control = control_race(

verbose = FALSE,

save_pred = TRUE,

save_workflow = TRUE,

extract = extract_model,

parallel_over = "resamples"

),

metrics = mset

)autoplot(cv_res_xgboost)

collect_metrics(cv_res_xgboost) %>%

arrange(mean)The best mean rmse averaged across folds is about 12.1.

xgb_wf_best <-

workflow() %>%

add_recipe(advanced_rec) %>%

add_model(xgboost_spec) %>%

finalize_workflow(select_best(cv_res_xgboost))

fit_best <- xgb_wf_best %>%

parsnip::fit(data = train_df)

augment(fit_best, train_df) %>%

select(id, popularity, .pred) %>%

rmse(truth = popularity, estimate = .pred)We’re out of time. This will be as good as it gets. Our final submission:

augment(fit_best, test_df) %>%

select(id, popularity = .pred) %>%

write_csv(file = path_export)shell(glue::glue('kaggle competitions submit -c { competition_name } -f { path_export } -m "Third model"'))It turns out that an RMSE in the neighbornood of 10 is competitive with the others on the leaderboard.

sessionInfo()R version 4.1.1 (2021-08-10)

Platform: x86_64-w64-mingw32/x64 (64-bit)

Running under: Windows 10 x64 (build 22000)

Matrix products: default

locale:

[1] LC_COLLATE=English_United States.1252

[2] LC_CTYPE=English_United States.1252

[3] LC_MONETARY=English_United States.1252

[4] LC_NUMERIC=C

[5] LC_TIME=English_United States.1252

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] xgboost_1.4.1.1 vctrs_0.3.8 rlang_0.4.11

[4] DALEXtra_2.1.1 DALEX_2.3.0 SHAPforxgboost_0.1.1

[7] vip_0.3.2 baguette_0.1.1 themis_0.1.4

[10] textrecipes_0.4.1 finetune_0.1.0 yardstick_0.0.8

[13] workflowsets_0.1.0 workflows_0.2.3 tune_0.1.6

[16] rsample_0.1.0 recipes_0.1.17 parsnip_0.1.7.900

[19] modeldata_0.1.1 infer_1.0.0 dials_0.0.10

[22] scales_1.1.1 broom_0.7.9 tidymodels_0.1.4

[25] corrr_0.4.3 hrbrthemes_0.8.0 forcats_0.5.1

[28] stringr_1.4.0 dplyr_1.0.7 purrr_0.3.4

[31] readr_2.0.2 tidyr_1.1.4 tibble_3.1.4

[34] ggplot2_3.3.5 tidyverse_1.3.1 workflowr_1.6.2

loaded via a namespace (and not attached):

[1] rappdirs_0.3.3 SnowballC_0.7.0 R.methodsS3_1.8.1

[4] earth_5.3.1 ragg_1.1.3 bit64_4.0.5

[7] knitr_1.36 R.utils_2.11.0 styler_1.6.2

[10] data.table_1.14.2 rpart_4.1-15 hardhat_0.1.6

[13] doParallel_1.0.16 generics_0.1.0 GPfit_1.0-8

[16] usethis_2.0.1 RANN_2.6.1 future_1.22.1

[19] conflicted_1.0.4 bit_4.0.4 tzdb_0.1.2

[22] tokenizers_0.2.1 xml2_1.3.2 lubridate_1.7.10

[25] httpuv_1.6.3 assertthat_0.2.1 viridis_0.6.1

[28] gower_0.2.2 xfun_0.26 hms_1.1.1

[31] jquerylib_0.1.4 TSP_1.1-10 evaluate_0.14

[34] promises_1.2.0.1 fansi_0.5.0 dbplyr_2.1.1

[37] readxl_1.3.1 DBI_1.1.1 ellipsis_0.3.2

[40] ggpubr_0.4.0 backports_1.2.1 libcoin_1.0-9

[43] here_1.0.1 abind_1.4-5 cachem_1.0.6

[46] withr_2.4.2 ggforce_0.3.3 checkmate_2.0.0

[49] vroom_1.5.5 lime_0.5.2 crayon_1.4.1

[52] glmnet_4.1-2 pkgconfig_2.0.3 labeling_0.4.2

[55] tweenr_1.0.2 nlme_3.1-152 seriation_1.3.0

[58] nnet_7.3-16 globals_0.14.0 lifecycle_1.0.1

[61] registry_0.5-1 extrafontdb_1.0 ingredients_2.2.0

[64] unbalanced_2.0 modelr_0.1.8 tidytext_0.3.2

[67] cellranger_1.1.0 Cubist_0.3.0 rprojroot_2.0.2

[70] polyclip_1.10-0 partykit_1.2-15 Matrix_1.3-4

[73] carData_3.0-4 reprex_2.0.1 whisker_0.4

[76] png_0.1-7 viridisLite_0.4.0 ROSE_0.0-4

[79] R.oo_1.24.0 pROC_1.18.0 mlr_2.19.0

[82] shape_1.4.6 parallelly_1.28.1 R.cache_0.15.0

[85] rstatix_0.7.0 ggsignif_0.6.3 hexbin_1.28.2

[88] magrittr_2.0.1 ParamHelpers_1.14 plyr_1.8.6

[91] compiler_4.1.1 RColorBrewer_1.1-2 plotrix_3.8-2

[94] cli_3.0.1 DiceDesign_1.9 listenv_0.8.0

[97] janeaustenr_0.1.5 Formula_1.2-4 mgcv_1.8-36

[100] MASS_7.3-54 tidyselect_1.1.1 stringi_1.7.5

[103] textshaping_0.3.5 butcher_0.1.5 highr_0.9

[106] yaml_2.2.1 grid_4.1.1 sass_0.4.0

[109] fastmatch_1.1-3 tools_4.1.1 future.apply_1.8.1

[112] parallel_4.1.1 rio_0.5.27 rstudioapi_0.13

[115] foreach_1.5.1 foreign_0.8-81 inum_1.0-4

[118] git2r_0.28.0 gridExtra_2.3 prodlim_2019.11.13

[121] C50_0.1.5 farver_2.1.0 digest_0.6.28

[124] FNN_1.1.3 ggtext_0.1.1 lava_1.6.10

[127] TeachingDemos_2.12 gridtext_0.1.4 Rcpp_1.0.7

[130] car_3.0-11 later_1.3.0 httr_1.4.2

[133] gdtools_0.2.3 colorspace_2.0-2 rvest_1.0.1

[136] fs_1.5.0 reticulate_1.22 splines_4.1.1

[139] rematch2_2.1.2 systemfonts_1.0.2 jsonlite_1.7.2

[142] BBmisc_1.11 timeDate_3043.102 plotmo_3.6.1

[145] ipred_0.9-12 R6_2.5.1 lhs_1.1.3

[148] pillar_1.6.3 htmltools_0.5.2 glue_1.4.2

[151] fastmap_1.1.0 class_7.3-19 codetools_0.2-18

[154] mvtnorm_1.1-2 furrr_0.2.3 utf8_1.2.2

[157] lattice_0.20-44 bslib_0.3.0 curl_4.3.2

[160] zip_2.2.0 openxlsx_4.2.4 Rttf2pt1_1.3.8

[163] survival_3.2-11 parallelMap_1.5.1 rmarkdown_2.11

[166] munsell_0.5.0 iterators_1.0.13 haven_2.4.3

[169] reshape2_1.4.4 gtable_0.3.0 extrafont_0.17