Last updated: 2021-10-13

Checks:

Knit directory: myTidyTuesday/

This reproducible R Markdown analysis was created with workflowr (version 1.6.2). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20210907) was run prior to running the code in the R Markdown file. Setting a seed ensures that any results that rely on randomness, e.g. subsampling or permutations, are reproducible.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility.

The results in this page were generated with repository version bb48ad1 . See the Past versions tab to see a history of the changes made to the R Markdown and HTML files.

Note that you need to be careful to ensure that all relevant files for the analysis have been committed to Git prior to generating the results (you can use wflow_publish or wflow_git_commit). workflowr only checks the R Markdown file, but you know if there are other scripts or data files that it depends on. Below is the status of the Git repository when the results were generated:

Ignored files:

Ignored: .Rhistory

Ignored: .Rproj.user/

Ignored: catboost_info/

Ignored: data/2021-10-12/

Ignored: data/2021-10-13/

Ignored: data/CNHI_Excel_Chart.xlsx

Ignored: data/CommunityTreemap.jpeg

Ignored: data/Community_Roles.jpeg

Ignored: data/YammerDigitalDataScienceMembership.xlsx

Ignored: data/accountchurn.rds

Ignored: data/acs_poverty.rds

Ignored: data/advancedaccountchurn.rds

Ignored: data/airbnbcatboost.rds

Ignored: data/austinHomeValue.rds

Ignored: data/austinHomeValue2.rds

Ignored: data/australiaweather.rds

Ignored: data/baseballHRxgboost.rds

Ignored: data/baseballHRxgboost2.rds

Ignored: data/fmhpi.rds

Ignored: data/grainstocks.rds

Ignored: data/hike_data.rds

Ignored: data/nber_rs.rmd

Ignored: data/netflixTitles2.rds

Ignored: data/pets.rds

Ignored: data/pets2.rds

Ignored: data/spotifyxgboost.rds

Ignored: data/spotifyxgboostadvanced.rds

Ignored: data/us_states.rds

Ignored: data/us_states_hexgrid.geojson

Ignored: data/weatherstats_toronto_daily.csv

Untracked files:

Untracked: code/YammerReach.R

Untracked: code/work list batch targets.R

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the repository in which changes were made to the R Markdown (analysis/2021_08_10_sliced.Rmd) and HTML (docs/2021_08_10_sliced.html) files. If you’ve configured a remote Git repository (see ?wflow_git_remote), click on the hyperlinks in the table below to view the files as they were in that past version.

File

Version

Author

Date

Message

Rmd

bb48ad1

opus1993

2021-10-13

adopt common color palette

Season 1 Episode 11 of #SLICED features a multi-class challenge with Zillow data on Austin, TX area property listings. Contestants are to use a variety of features to predict which pricing category houses are listed in. Each row represents a unique property valuation. The evaluation metric for submissions in this competition is classification mean logloss.

SLICED is like the TV Show Chopped but for data science. The four competitors get a never-before-seen dataset and two-hours to code a solution to a prediction challenge. Contestants get points for the best model plus bonus points for data visualization, votes from the audience, and more.

The audience is invited to participate as well. This file consists of my submissions with cleanup and commentary added.

To make the best use of the resources that we have, we will explore the data set for features to select those with the most predictive power, build a random forest to confirm the recipe, and then build one or more ensemble models. If there is time, we will craft some visuals for model explainability.

Let’s load up packages:

suppressPackageStartupMessages({

library(tidyverse) # clean and transform rectangular data

library(hrbrthemes) # plot theming

library(lubridate) # date and time transformations

library(patchwork)

library(tidymodels) # machine learning tools

library(finetune) # racing methods for accelerating hyperparameter tuning

library(textrecipes)

library(tidylo)

library(tidytext)

library(themis) # ml prep tools for handling unbalanced datasets

library(baguette) # ml tools for bagged decision tree models

library(vip) # interpret model performance

})

source(here::here("code","_common.R"),

verbose = FALSE,

local = knitr::knit_global())

ggplot2::theme_set(theme_jim(base_size = 12))

#create a data directory

data_dir <- here::here("data",Sys.Date())

if (!file.exists(data_dir)) dir.create(data_dir)

# set a competition metric

mset <- metric_set(mn_log_loss, accuracy, roc_auc)

# set the competition name from the web address

competition_name <- "sliced-s01e11-semifinals"

zipfile <- paste0(data_dir,"/", competition_name, ".zip")

path_export <- here::here("data",Sys.Date(),paste0(competition_name,".csv"))

Get the Data

A quick reminder before downloading the dataset: Go to the web site and accept the competition terms!!!

We have basic shell commands available to interact with Kaggle here:

# from the Kaggle api https://github.com/Kaggle/kaggle-api

# the leaderboard

shell(glue::glue("kaggle competitions leaderboard { competition_name } -s"))

# the files to download

shell(glue::glue("kaggle competitions files -c { competition_name }"))

# the command to download files

shell(glue::glue("kaggle competitions download -c { competition_name } -p { data_dir }"))

# unzip the files received

shell(glue::glue("unzip { zipfile } -d { data_dir }"))We are reading in the contents of the datafiles here.

train_df <- arrow::read_csv_arrow(file = glue::glue(

{

data_dir

},

"/train.csv"

)) %>%

mutate(zip_code = str_extract(description, "[:digit:]{5}")) %>%

mutate(priceRange = fct_reorder(priceRange, parse_number(priceRange))) %>%

mutate(description = str_to_lower(description)) %>%

mutate(across(c("city", "homeType", "zip_code", "hasSpa"), as_factor))

holdout_df <- arrow::read_csv_arrow(file = glue::glue(

{

data_dir

},

"/test.csv"

)) %>%

mutate(zip_code = str_extract(description, "[:digit:]{5}")) %>%

mutate(description = str_to_lower(description)) %>%

mutate(across(c("city", "homeType", "zip_code", "hasSpa"), as_factor))Some questions to answer here: What features have missing data, and imputations may be required? What does the outcome variable look like, in terms of imbalance?

skimr::skim(train_df)The zip_code parsing was not perfect here. We could easily knn impute the zip using latitude and longitudes if we choose to use them as features.

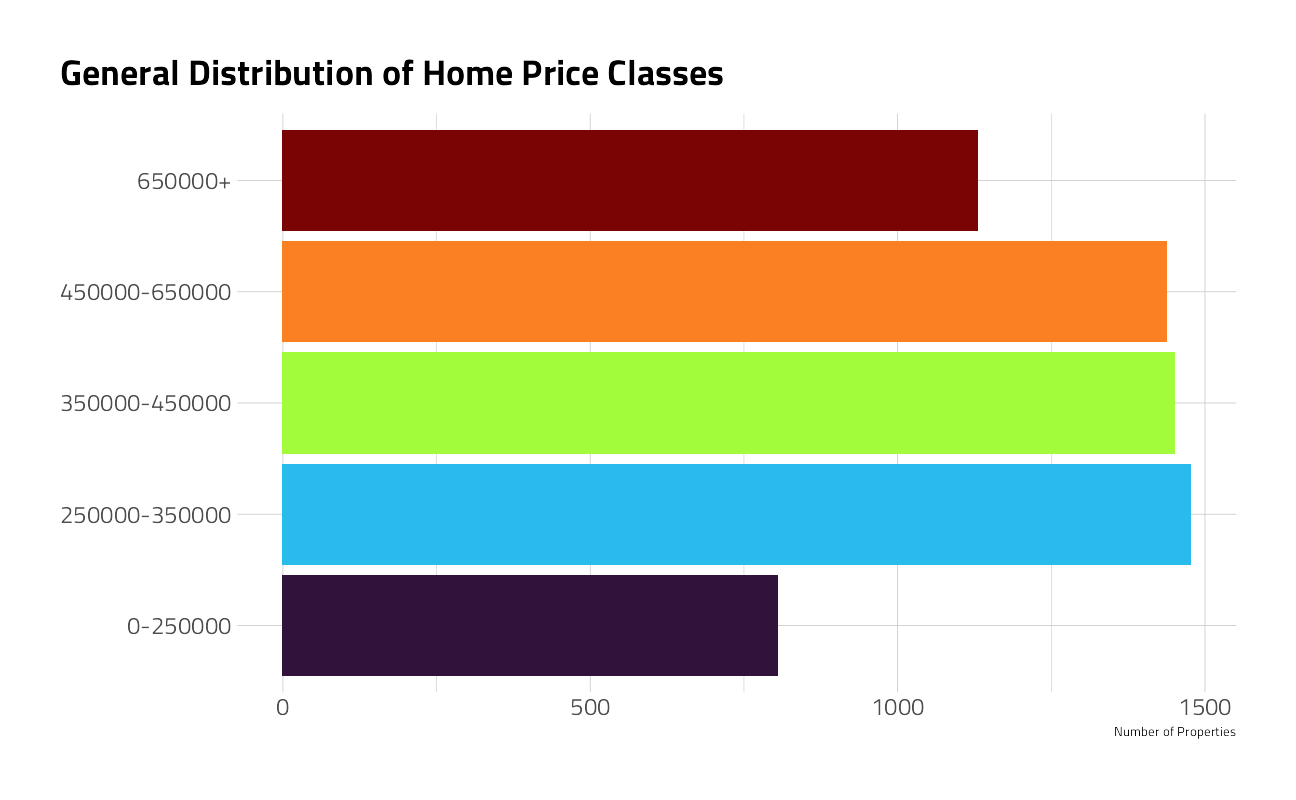

Outcome variable priceRange has five classes.

Outcome Variable Distribution

The price range class

train_df %>%

count(priceRange) %>%

mutate(priceRange = fct_reorder(priceRange, parse_number(as.character(priceRange)))) %>%

ggplot(aes(n, priceRange, fill = priceRange)) +

geom_col(show.legend = FALSE) +

scale_fill_viridis_d(option = "H") +

labs(title = "General Distribution of Home Price Classes", fill = NULL, y = NULL, x = "Number of Properties")

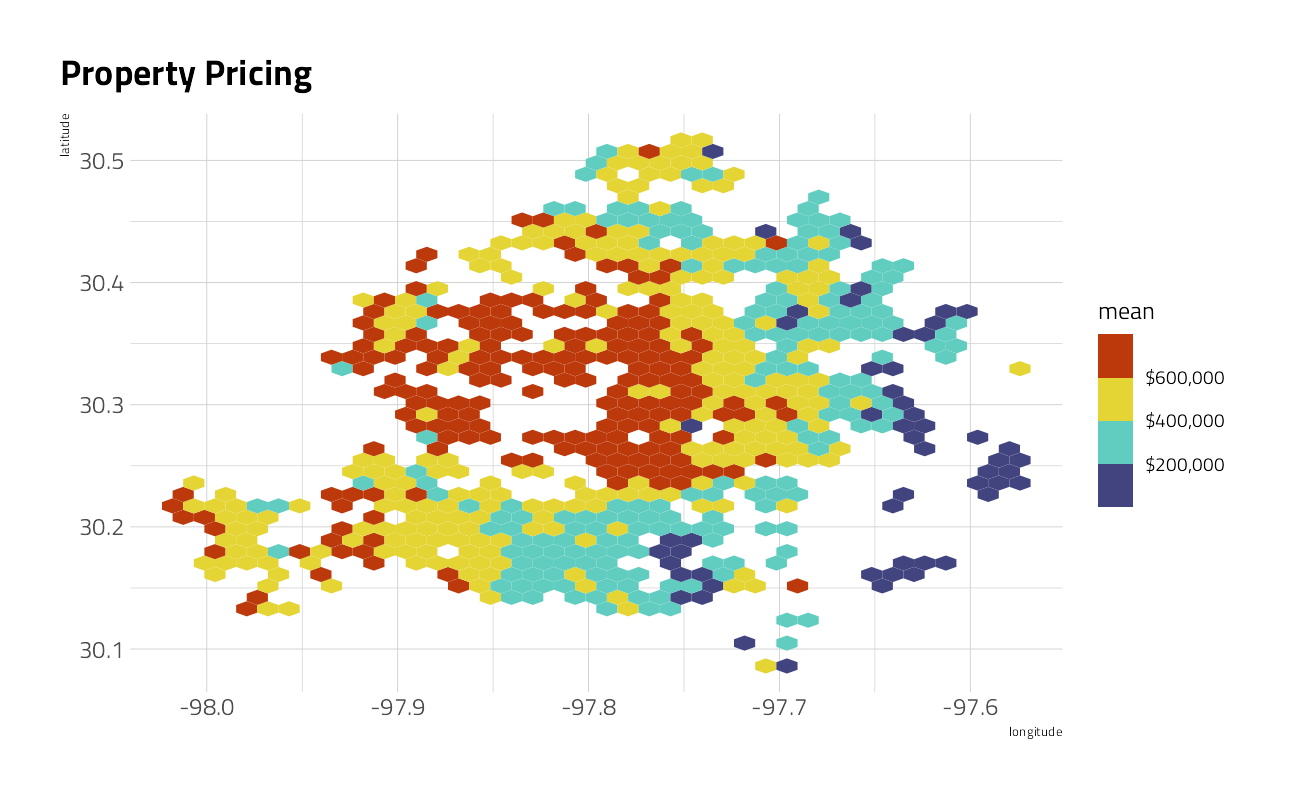

train_df %>%

mutate(priceRange = parse_number(as.character(priceRange)) + 100000) %>%

ggplot(aes(longitude, latitude, z = priceRange)) +

stat_summary_hex(bins = 40) +

scale_fill_viridis_b(option = "H", labels = scales::dollar) +

labs(fill = "mean", title = "Property Pricing")

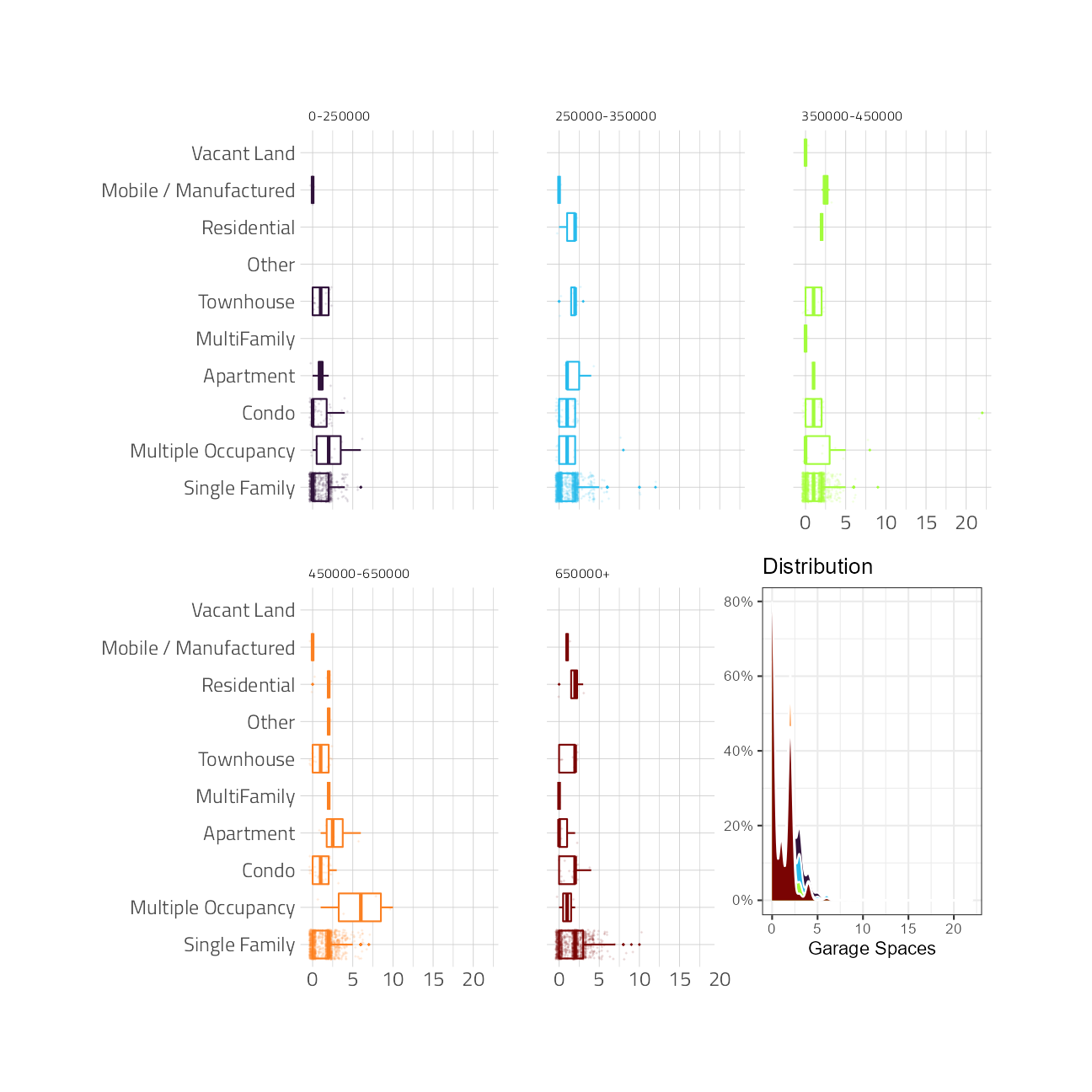

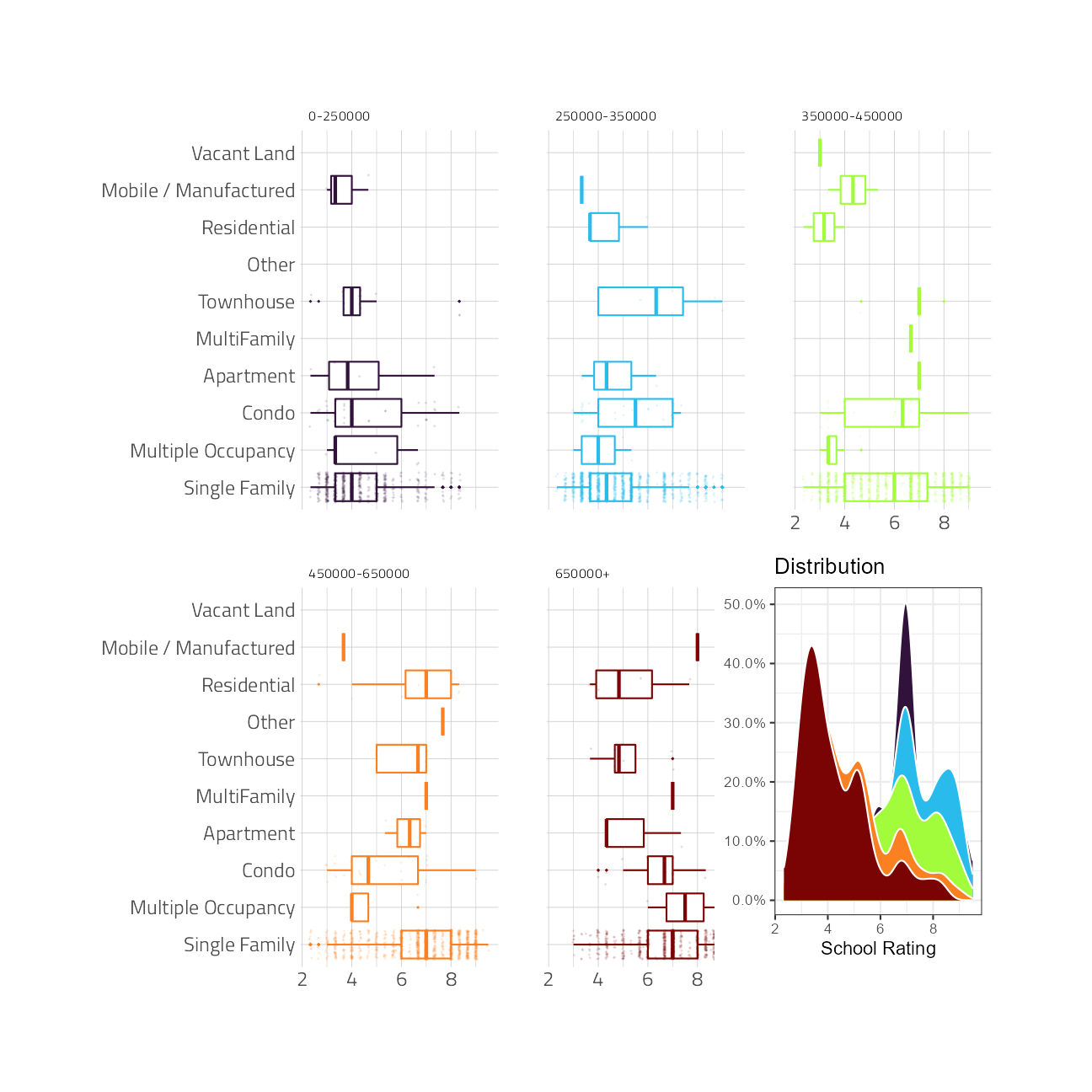

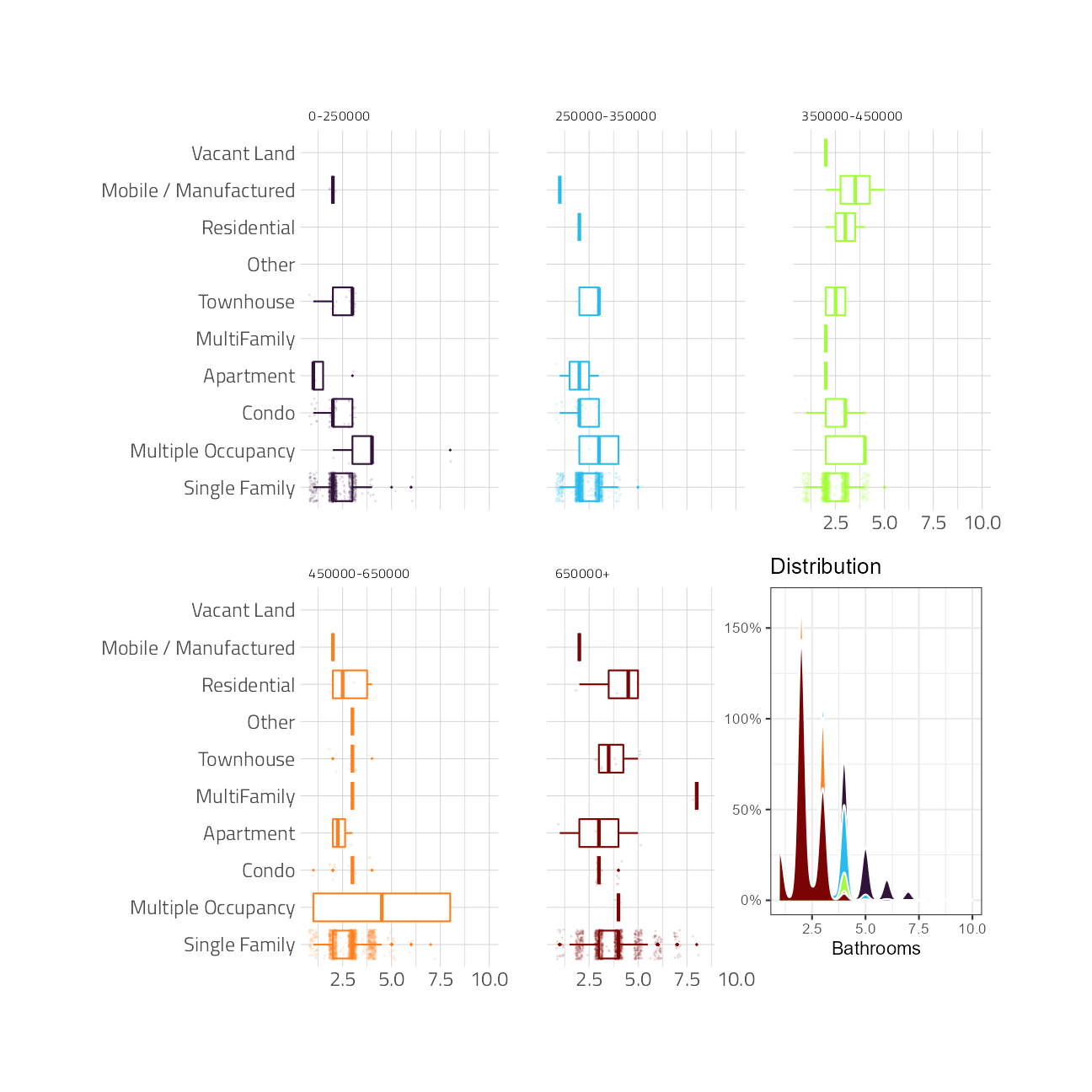

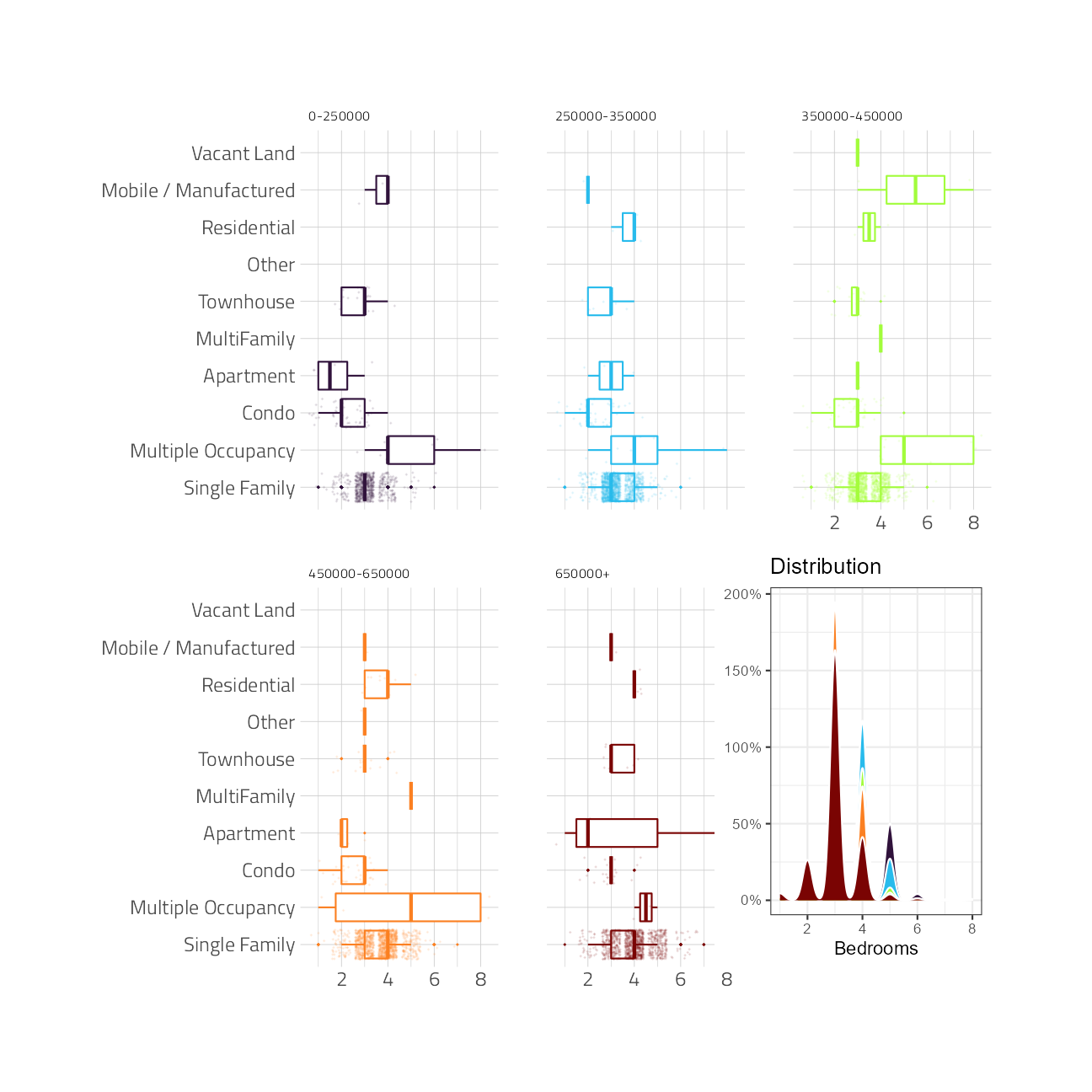

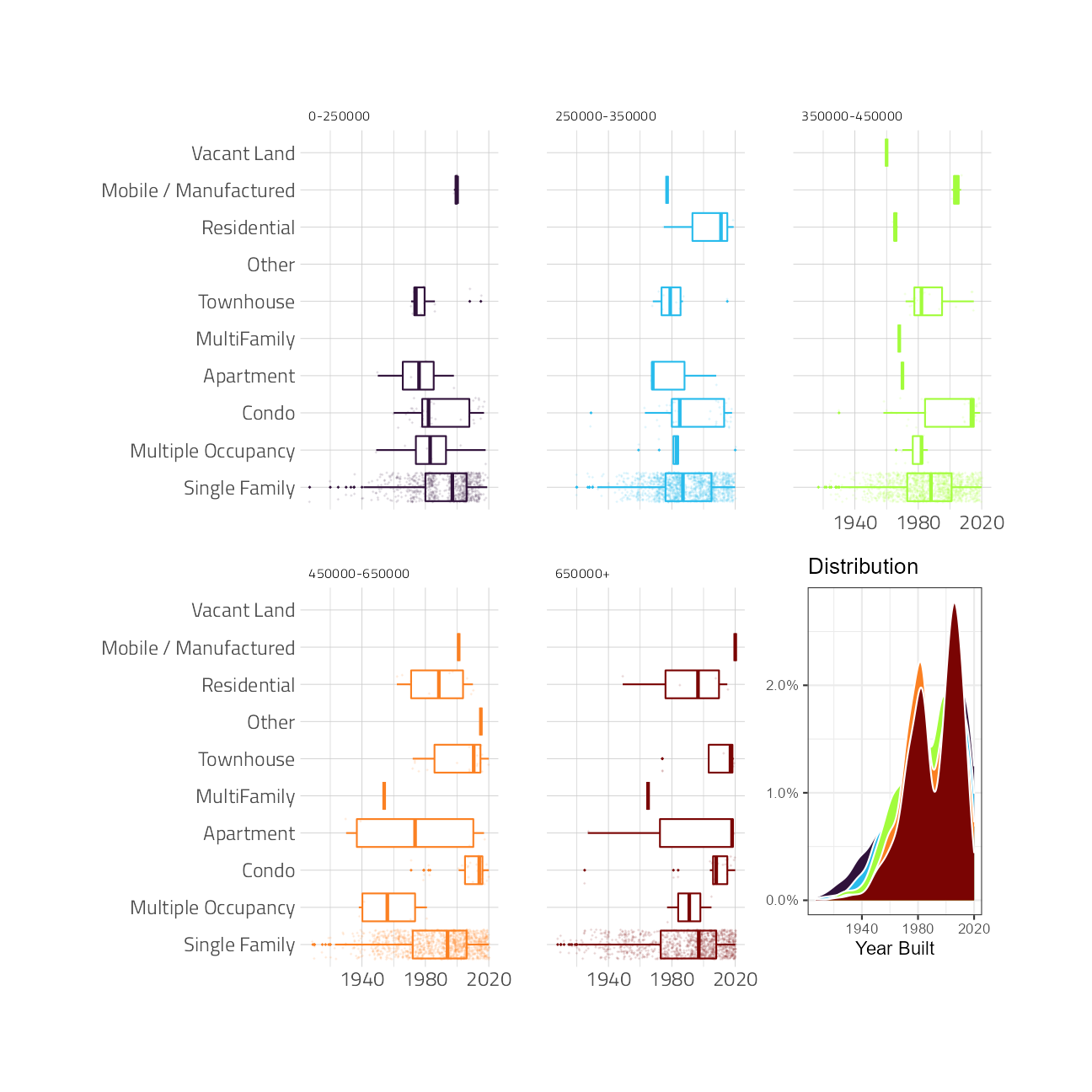

Numeric features

numeric_plot <- function(tbl, numeric_var, factor_var = priceRange, color_var = priceRange) {

tbl %>%

ggplot(aes({{ numeric_var }}, {{ factor_var }},

color = {{ color_var }}

)) +

geom_boxplot(show.legend = FALSE, outlier.size = 0.1) +

geom_jitter(size = 0.1, alpha = 0.1, show.legend = FALSE) +

scale_color_viridis_d(option = "H") +

facet_wrap(vars(priceRange)) +

theme(

legend.position = "top",

strip.text.x = element_text(size = 24 / .pt)

) +

labs(x = NULL, y = NULL)

}

numeric_histogram <- function(tbl, numeric_var) {

tbl %>%

ggplot(aes({{ numeric_var }},

after_stat(density),

fill = fct_rev(priceRange)

)) +

geom_density(

position = "identity",

color = "white",

show.legend = FALSE

) +

scale_fill_viridis_d(option = "H") +

scale_y_continuous(labels = scales::percent) +

labs(y = NULL, x = NULL, title = "Distribution") +

theme_bw()

}

numeric_plot(train_df, garageSpaces, factor_var = homeType) +

inset_element(numeric_histogram(train_df, garageSpaces),

left = 0.6, bottom = 0, right = 1, top = 0.5

) +

labs(x = "Garage Spaces")

numeric_plot(train_df, numOfPatioAndPorchFeatures, factor_var = homeType) +

inset_element(numeric_histogram(train_df, numOfPatioAndPorchFeatures),

left = 0.6, bottom = 0, right = 1, top = 0.5

) +

labs(x = "Patio and Porch Features")

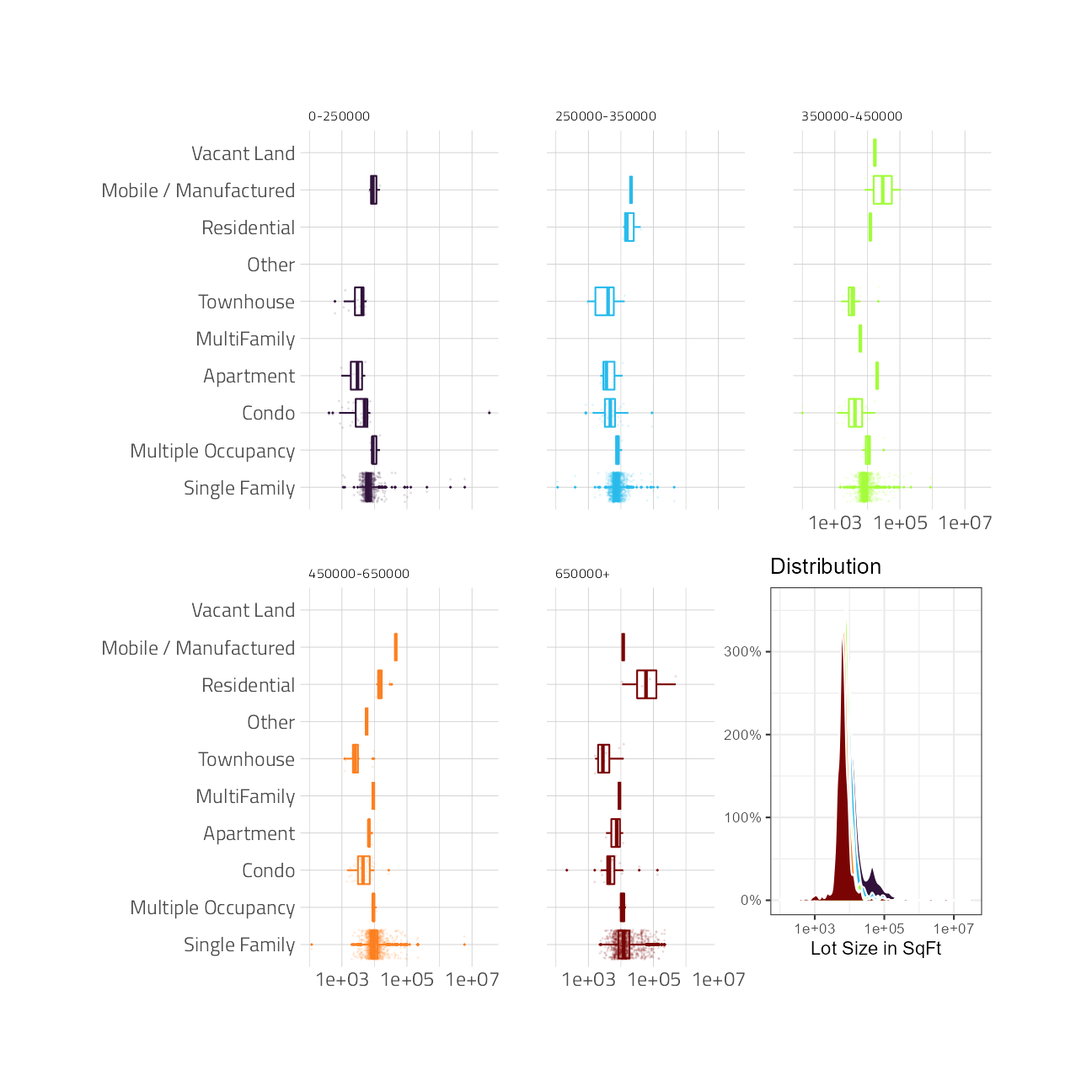

numeric_plot(train_df, lotSizeSqFt, factor_var = homeType) +

scale_x_log10() +

inset_element(numeric_histogram(train_df, lotSizeSqFt) +

scale_x_log10(),

left = 0.6, bottom = 0, right = 1, top = 0.5

) +

labs(x = "Lot Size in SqFt")

numeric_plot(train_df, avgSchoolRating, factor_var = homeType) +

inset_element(numeric_histogram(train_df, avgSchoolRating),

left = 0.6, bottom = 0, right = 1, top = 0.5

) +

labs(x = "School Rating")

numeric_plot(train_df, MedianStudentsPerTeacher, factor_var = homeType) +

inset_element(numeric_histogram(train_df, MedianStudentsPerTeacher),

left = 0.6, bottom = 0, right = 1, top = 0.5

) +

labs(x = "Median Students\nPer Teacher")

numeric_plot(train_df, numOfBathrooms, factor_var = homeType) +

inset_element(numeric_histogram(train_df, numOfBathrooms),

left = 0.6, bottom = 0, right = 1, top = 0.5

) +

labs(x = "Bathrooms")

numeric_plot(train_df, numOfBedrooms, factor_var = homeType) +

inset_element(numeric_histogram(train_df, numOfBedrooms),

left = 0.6, bottom = 0, right = 1, top = 0.5

) +

labs(x = "Bedrooms")

numeric_plot(train_df, yearBuilt, factor_var = homeType) +

scale_x_continuous(n.breaks = 4) +

inset_element(numeric_histogram(train_df, yearBuilt) +

scale_x_continuous(n.breaks = 4),

left = 0.66, bottom = 0, right = 1, top = 0.5

) +

labs(x = "Year Built")

timeline_plot <- function(tbl, numeric_var, factor_var = priceRange) {

tbl %>%

group_by({{ factor_var }}, decade = 10 * yearBuilt %/% 10) %>%

summarize(

n = n(),

mean = mean({{ numeric_var }}, na.rm = TRUE),

high = quantile({{ numeric_var }}, probs = 0.9, na.rm = TRUE),

low = quantile({{ numeric_var }}, probs = 0.1, na.rm = TRUE),

.groups = "drop"

) %>%

ggplot(aes(decade, mean, color = priceRange)) +

geom_line() +

geom_ribbon(aes(ymin = low, ymax = high, fill = priceRange),

alpha = .1, size = 0.1

) +

scale_y_log10(labels = scales::comma) +

scale_fill_viridis_d(option = "H")

}

timeline_plot(train_df, lotSizeSqFt) +

scale_x_continuous(breaks = seq(1900, 2020, 20)) +

theme(

legend.position = c(0.15, 0.8),

legend.background = element_rect(color = "white")

) +

labs(

y = "Mean Lot Size in Square Feet",

title = "There must be an outlier lot size transaction in the 1980s"

)

Maps

map_plot <- function(tbl, numeric_var) {

tbl %>%

group_by(

latitude = round(latitude, digits = 2),

longitude = round(longitude, digits = 2)

) %>%

summarize(

n = n(),

mean = mean({{ numeric_var }}, na.rm = TRUE),

.groups = "drop"

) %>%

filter(n < 10) %>%

ggplot(aes(longitude, latitude, z = mean)) +

stat_summary_hex(alpha = 0.9, bins = 20) +

scale_fill_viridis_b(option = "H", labels = scales::comma) +

coord_cartesian()

}

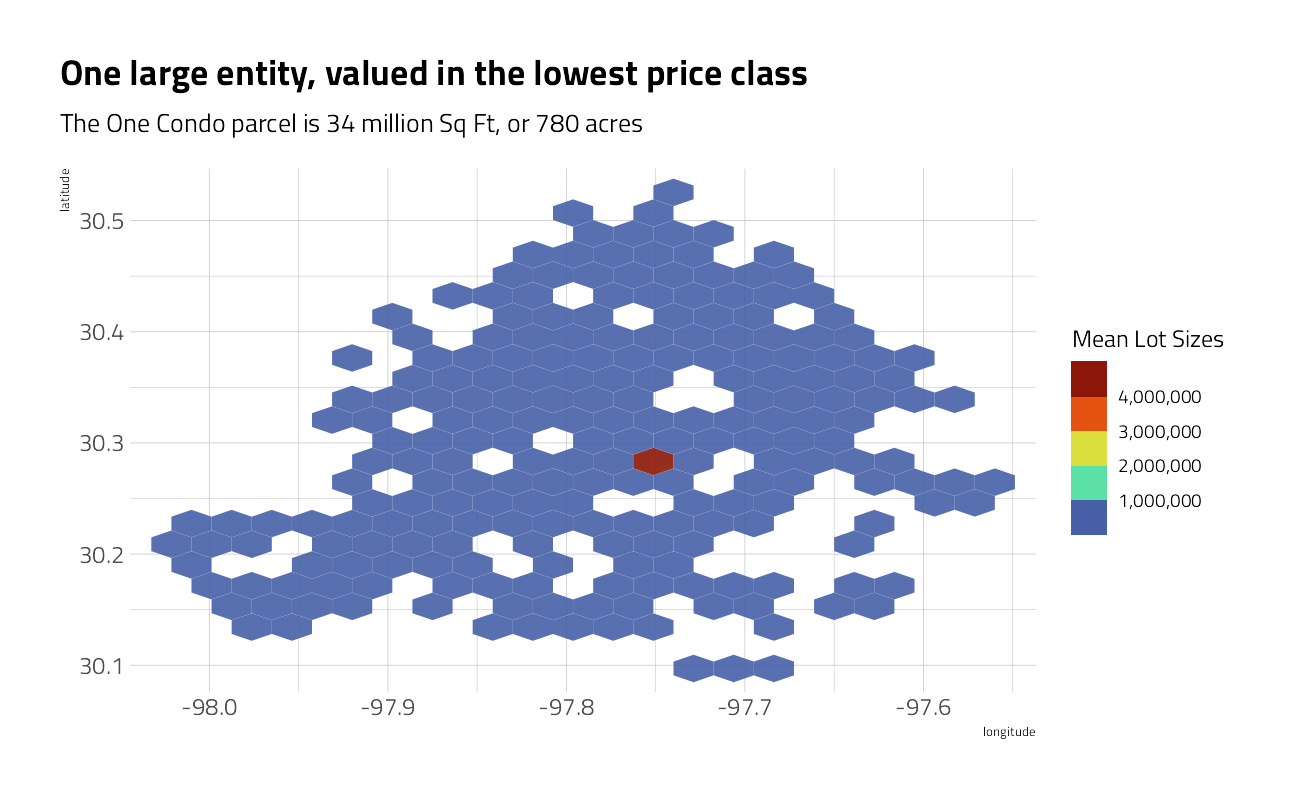

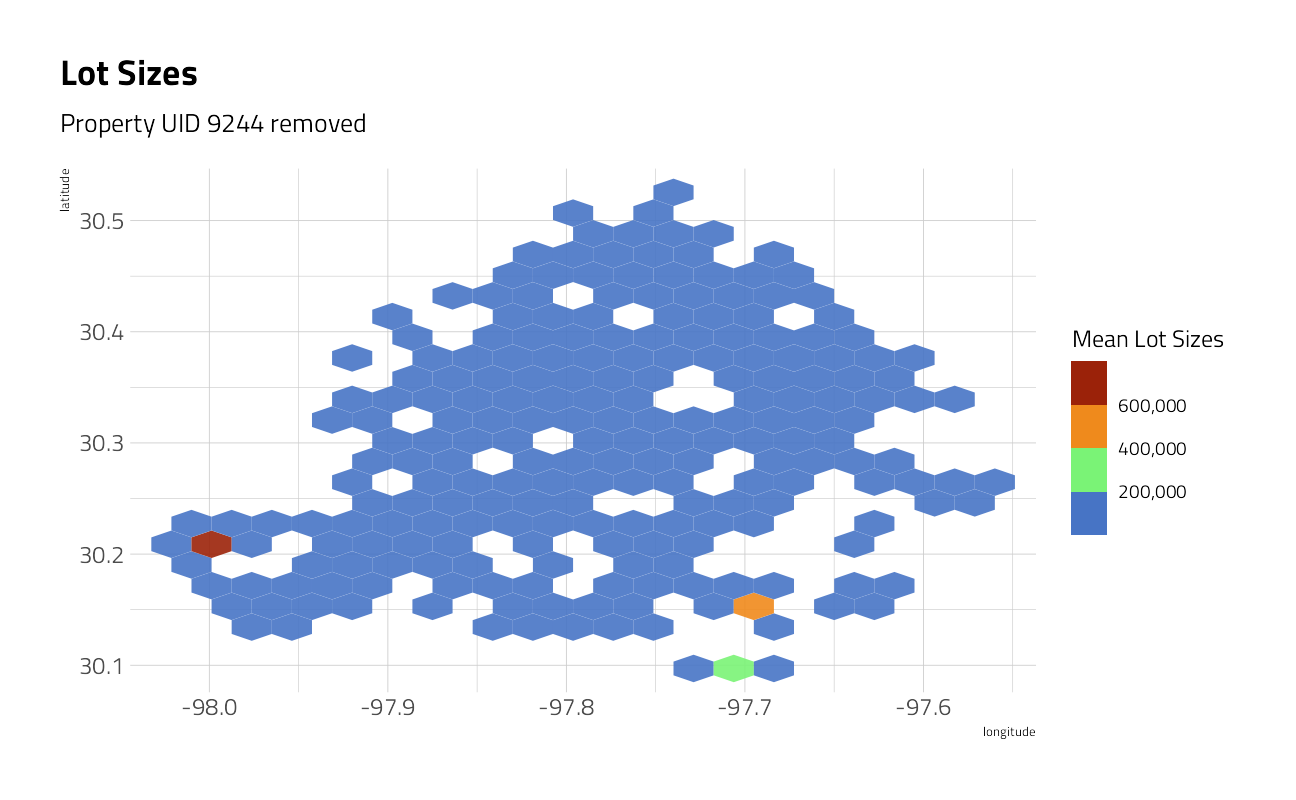

map_plot(train_df, lotSizeSqFt) +

labs(

fill = "Mean Lot Sizes",

title = "One large entity, valued in the lowest price class",

subtitle = "The One Condo parcel is 34 million Sq Ft, or 780 acres"

)

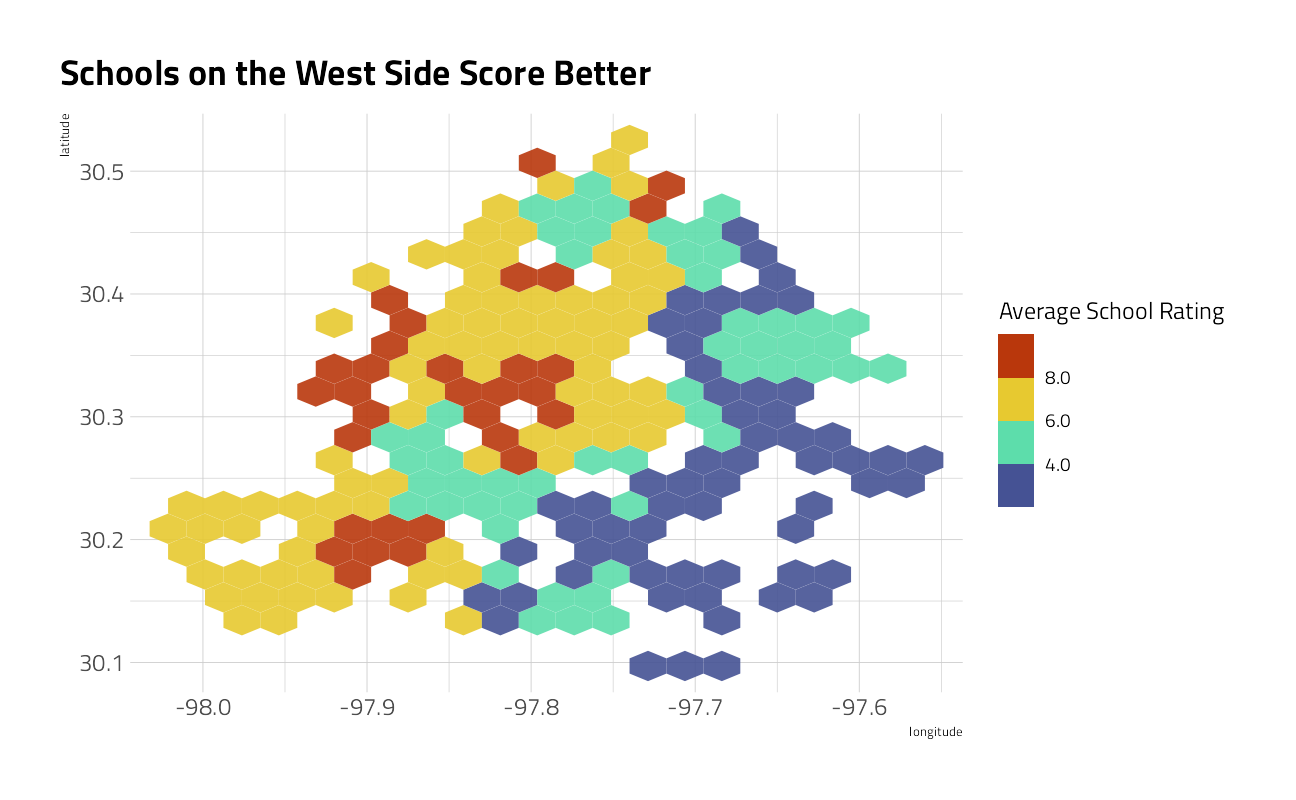

map_plot(train_df, avgSchoolRating) +

labs(

fill = "Average School Rating",

title = "Schools on the West Side Score Better"

)

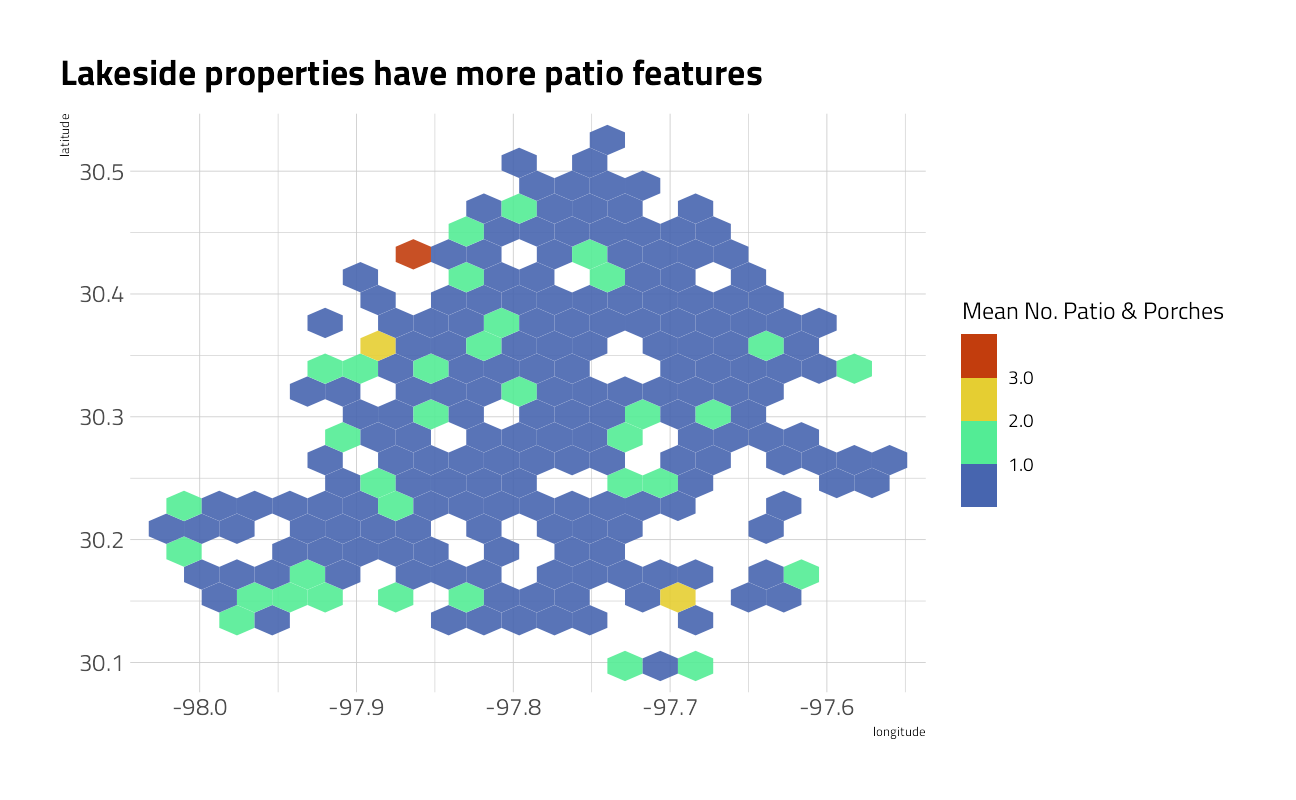

map_plot(train_df, numOfPatioAndPorchFeatures) +

labs(

fill = "Mean No. Patio & Porches",

title = "Lakeside properties have more patio features"

)

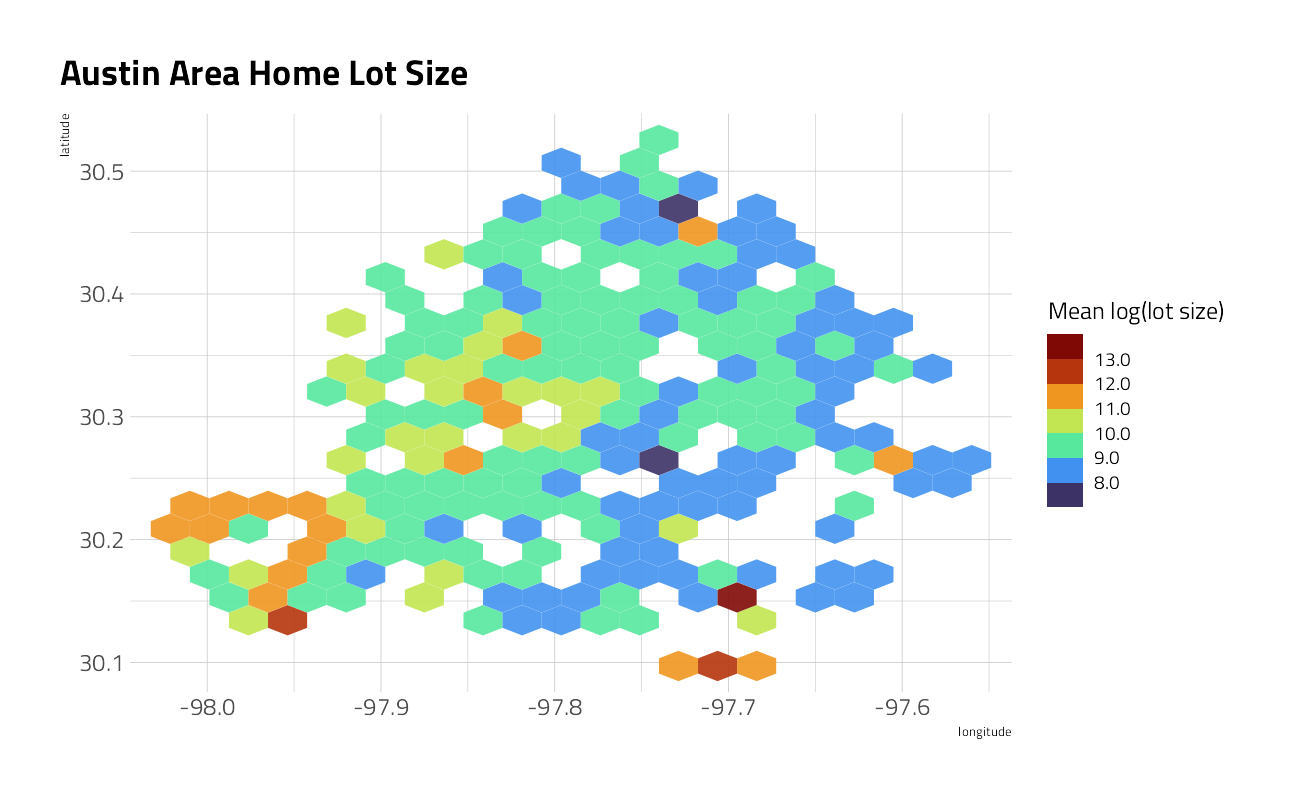

map_plot(train_df, log(lotSizeSqFt)) +

labs(

fill = "Mean log(lot size)",

title = "Austin Area Home Lot Size"

)

I am going to take the unusual step of removing the uid 9244 condo from the training dataset, as an exceptional outlier. The histograms of condo prices and of lot sizes is more consistent, and I suspect more predictive of price class, with the outlier removed.

train_df %>%

filter(uid != 9244L) %>%

map_plot(lotSizeSqFt) +

labs(

fill = "Mean Lot Sizes",

title = "Lot Sizes",

subtitle = "Property UID 9244 removed"

)

Text analysis

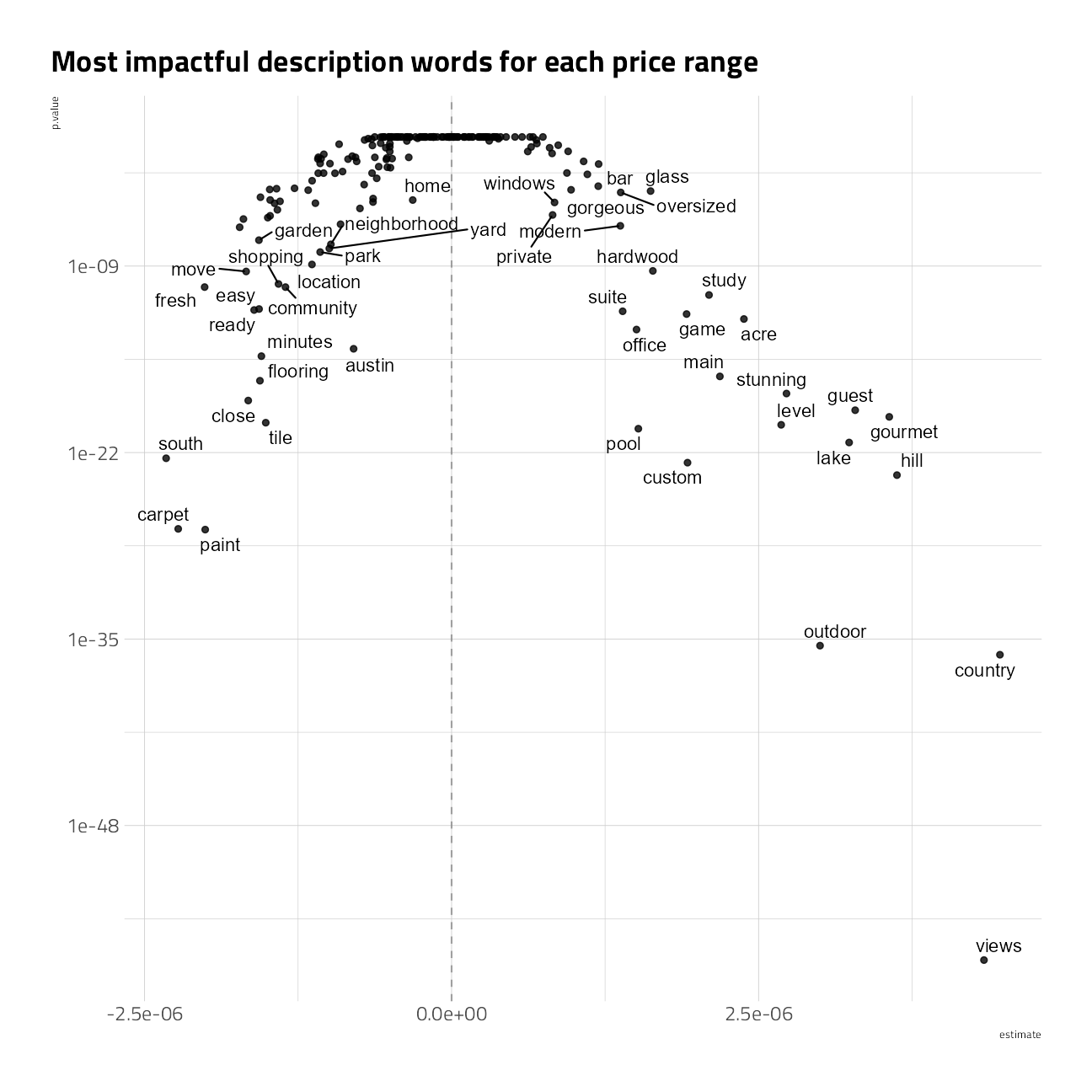

Julia Silge and team deserve a huge amount of credit for both the package development and the many demonstrations of text analysis on their blogs and YouTube.

description_tidy <- train_df %>%

mutate(priceRange = parse_number(as.character(priceRange)) + 100000) %>%

unnest_tokens(word, description) %>%

filter(!str_detect(word, "[:digit:]")) %>%

anti_join(stop_words)

top_description <- description_tidy %>%

count(word, sort = TRUE) %>%

slice_max(n, n = 200) %>%

pull(word)

word_freqs <- description_tidy %>%

count(word, priceRange) %>%

complete(word, priceRange, fill = list(n = 0)) %>%

group_by(priceRange) %>%

mutate(

price_total = sum(n),

proportion = n / price_total

) %>%

ungroup() %>%

filter(word %in% top_description)

word_models <- word_freqs %>%

nest(data = -word) %>%

mutate(

model = map(data, ~ glm(cbind(n, price_total) ~ priceRange, ., family = "binomial")),

model = map(model, tidy)

) %>%

unnest(model) %>%

filter(term == "priceRange") %>%

mutate(p.value = p.adjust(p.value)) %>%

arrange(-estimate)

word_models %>%

ggplot(aes(estimate, p.value)) +

geom_vline(xintercept = 0, lty = 2, alpha = 0.7, color = "gray50") +

geom_point(alpha = 0.8, size = 1.5) +

scale_y_log10() +

ggrepel::geom_text_repel(aes(label = word)) +

labs(title = "Most impactful description words for each price range")

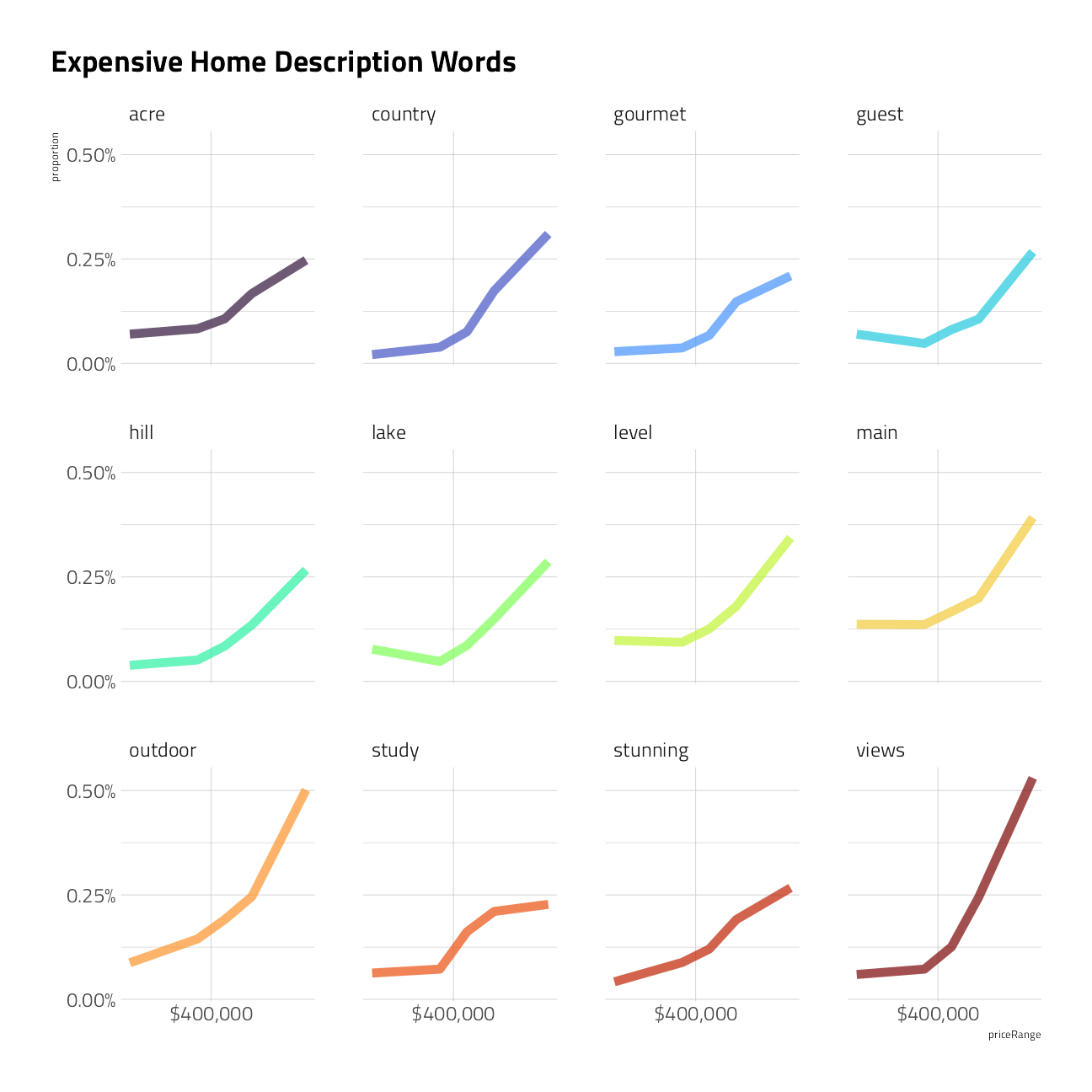

highest_words <-

word_models %>%

filter(p.value < 0.05) %>%

slice_max(estimate, n = 12) %>%

pull(word)

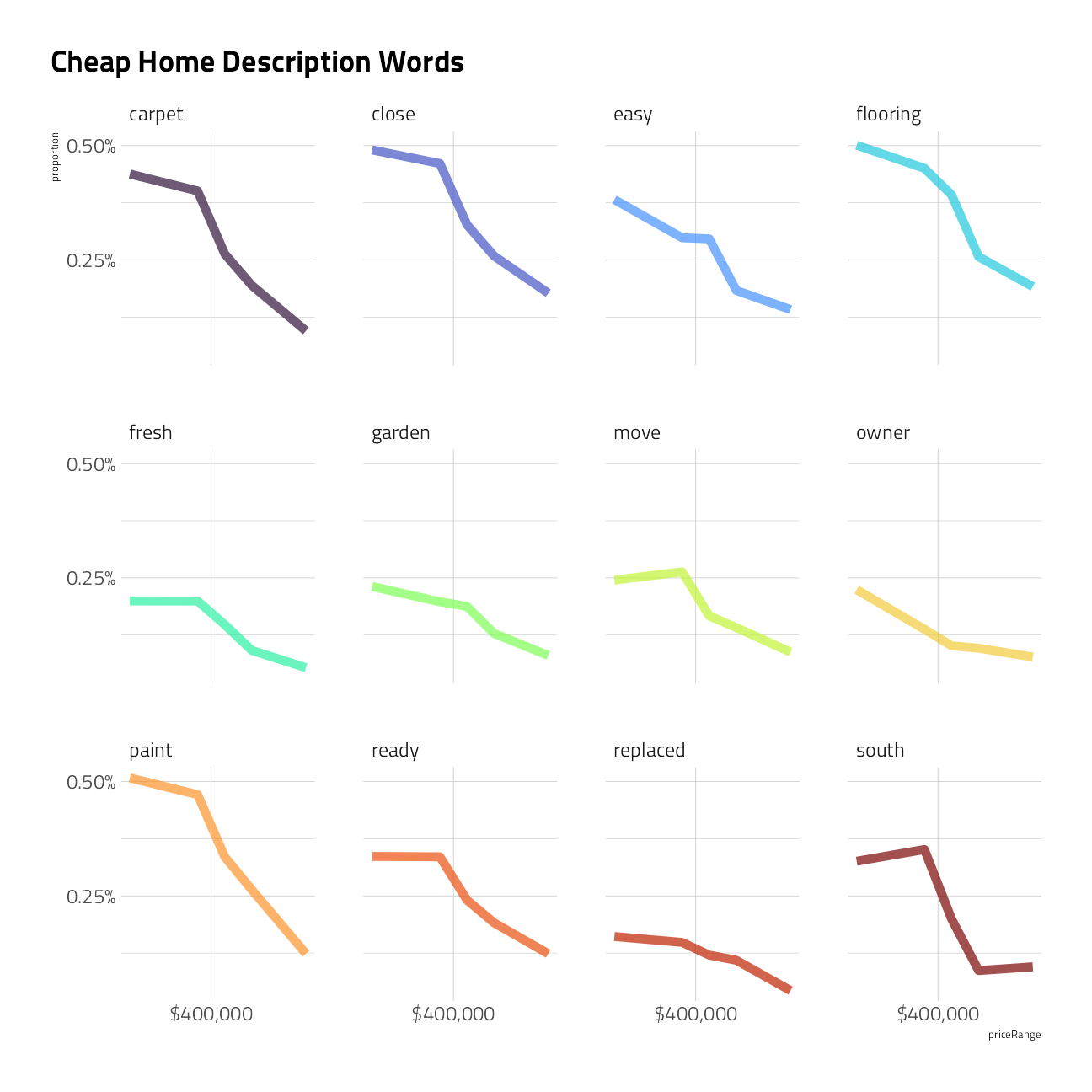

lowest_words <-

word_models %>%

filter(p.value < 0.05) %>%

slice_max(-estimate, n = 12) %>%

pull(word)

word_freqs %>%

filter(word %in% highest_words) %>%

ggplot(aes(priceRange, proportion, color = word)) +

geom_line(size = 2.5, alpha = 0.7, show.legend = FALSE) +

facet_wrap(~word) +

scale_color_viridis_d(option = "H") +

scale_x_continuous(labels = scales::dollar, n.breaks = 3) +

scale_y_continuous(labels = scales::percent, n.breaks = 3) +

labs(title = "Expensive Home Description Words")

word_freqs %>%

filter(word %in% lowest_words) %>%

ggplot(aes(priceRange, proportion, color = word)) +

geom_line(size = 2.5, alpha = 0.7, show.legend = FALSE) +

facet_wrap(~word) +

scale_color_viridis_d(option = "H") +

scale_x_continuous(labels = scales::dollar, n.breaks = 3) +

scale_y_continuous(labels = scales::percent, n.breaks = 3) +

labs(title = "Cheap Home Description Words")

Machine Learning: Random Forest

Let’s run models in two steps. The first is a simple, fast shallow random forest, to confirm that the model will run and observe feature importance scores. The second will use xgboost. Both use the basic recipe preprocessor for now.

Cross Validation

We will use 5-fold cross validation and stratify on the outcome to build models that are less likely to over-fit the training data. As a sound modeling practice, I am going to hold 10% of the training data out to better assess the model performance prior to submission.

set.seed(2021)

split <- initial_split(train_df, prop = 0.9)

training <- training(split)

testing <- testing(split)

(folds <- vfold_cv(training, v = 5, strata = priceRange))

The recipe

To move quickly I start with this basic recipe.

basic_rec <-

recipe(

priceRange ~ uid + latitude + longitude + garageSpaces + hasSpa + yearBuilt + numOfPatioAndPorchFeatures + lotSizeSqFt + avgSchoolRating + MedianStudentsPerTeacher + numOfBathrooms + numOfBedrooms + homeType,

data = training

) %>%

update_role(uid, new_role = "ID") %>%

step_filter(uid != 9244L) %>%

step_log(lotSizeSqFt) %>%

step_novel(all_nominal_predictors()) %>%

step_ns(latitude, longitude, deg_free = 5)

Dataset for modeling

basic_rec %>%

# finalize_recipe(list(num_comp = 2)) %>%

prep() %>%

juice()

Model Specification

This first model is a bagged tree, where the number of predictors to consider for each split of a tree (i.e., mtry) equals the number of all available predictors. The min_n of 10 means that each tree branch of the 50 decision trees built have at least 10 observations. As a result, the decision trees in the ensemble all are relatively shallow.

(bag_spec <-

bag_tree(min_n = 10) %>%

set_engine("rpart", times = 50) %>%

set_mode("classification"))Bagged Decision Tree Model Specification (classification)

Main Arguments:

cost_complexity = 0

min_n = 10

Engine-Specific Arguments:

times = 50

Computational engine: rpart

Parallel backend

To speed up computation we will use a parallel backend.

all_cores <- parallelly::availableCores(omit = 1)

all_coressystem

11 future::plan("multisession", workers = all_cores) # on Windows

Fit and Variable Importance

Lets make a cursory check of the recipe and variable importance, which comes out of rpart for free. This workflow also handles factors without dummies.

bag_wf <-

workflow() %>%

add_recipe(basic_rec) %>%

add_model(bag_spec)

system.time(

bag_fit_rs <- fit_resamples(

bag_wf,

resamples = folds,

metrics = mset,

control = control_resamples(save_pred = TRUE)

)

) user system elapsed

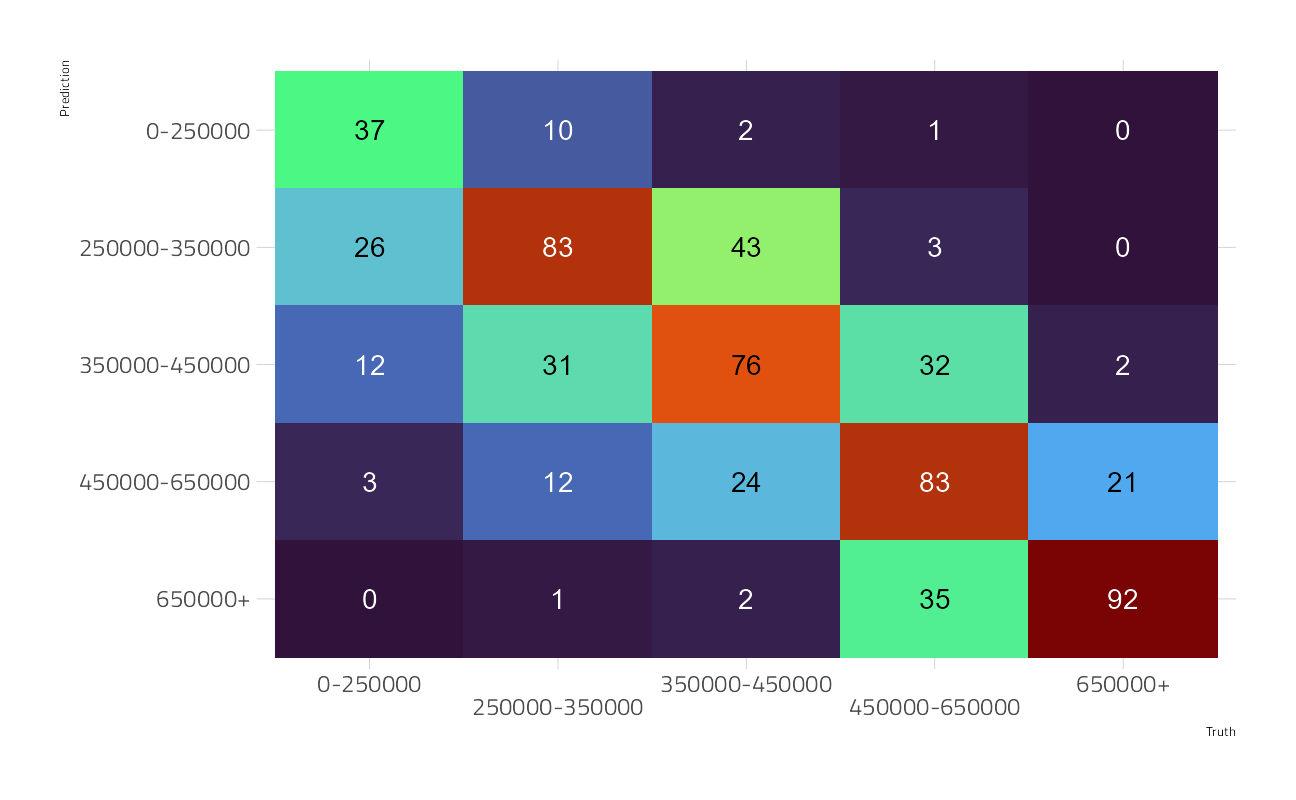

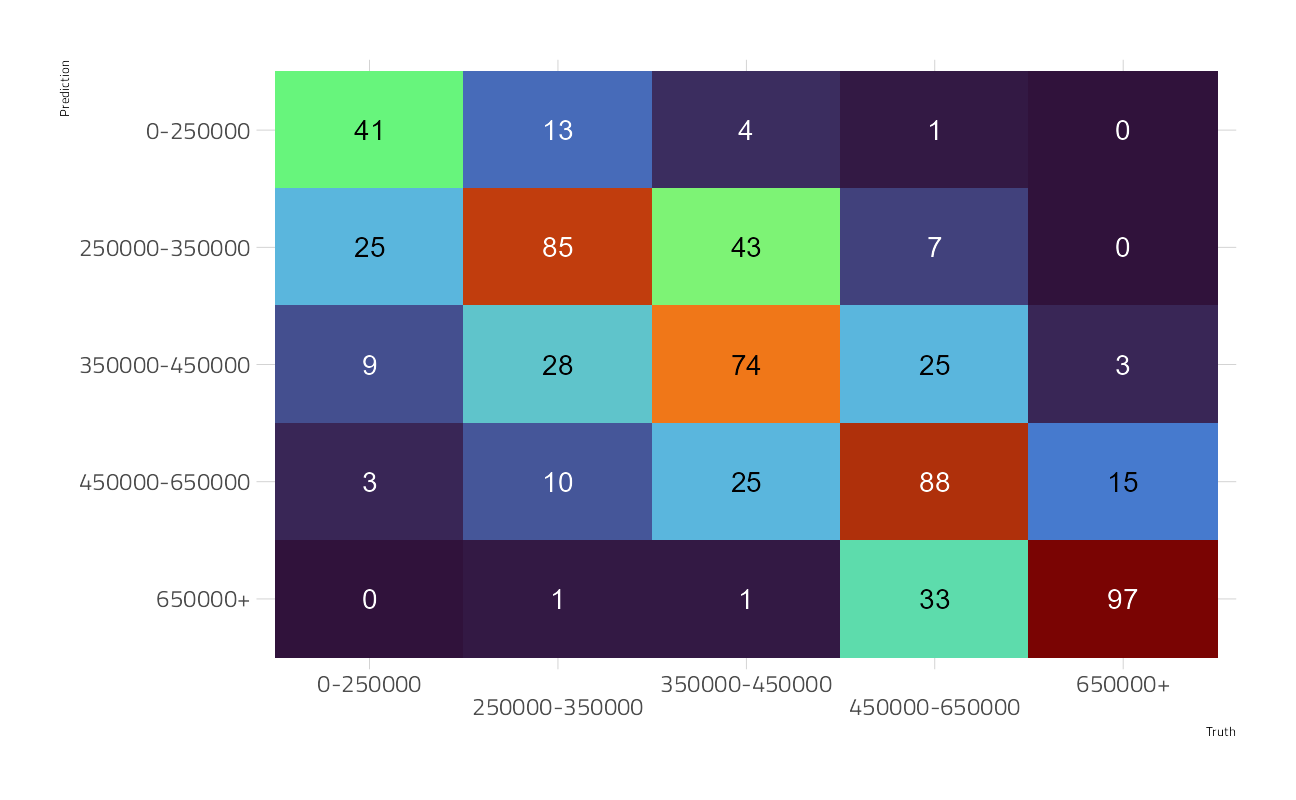

14.69 3.95 52.06 How did these results turn out? The metrics are across the cross validation holdouts, and the confidence matrix here is on the training data.

collect_metrics(bag_fit_rs)

That’s not great. What happened?

bag_fit_best <-

workflow() %>%

add_recipe(basic_rec) %>%

add_model(bag_spec) %>%

finalize_workflow(select_best(bag_fit_rs, "mn_log_loss"))

bag_last_fit <- last_fit(bag_fit_best, split)

collect_metrics(bag_last_fit)

collect_predictions(bag_last_fit) %>%

conf_mat(priceRange, .pred_class) %>%

autoplot(type = "heatmap")

Although 59% accuracy on the cross validation holdouts is not great, the performance seems to make sense, where the errors are mostly one class off of the truth.

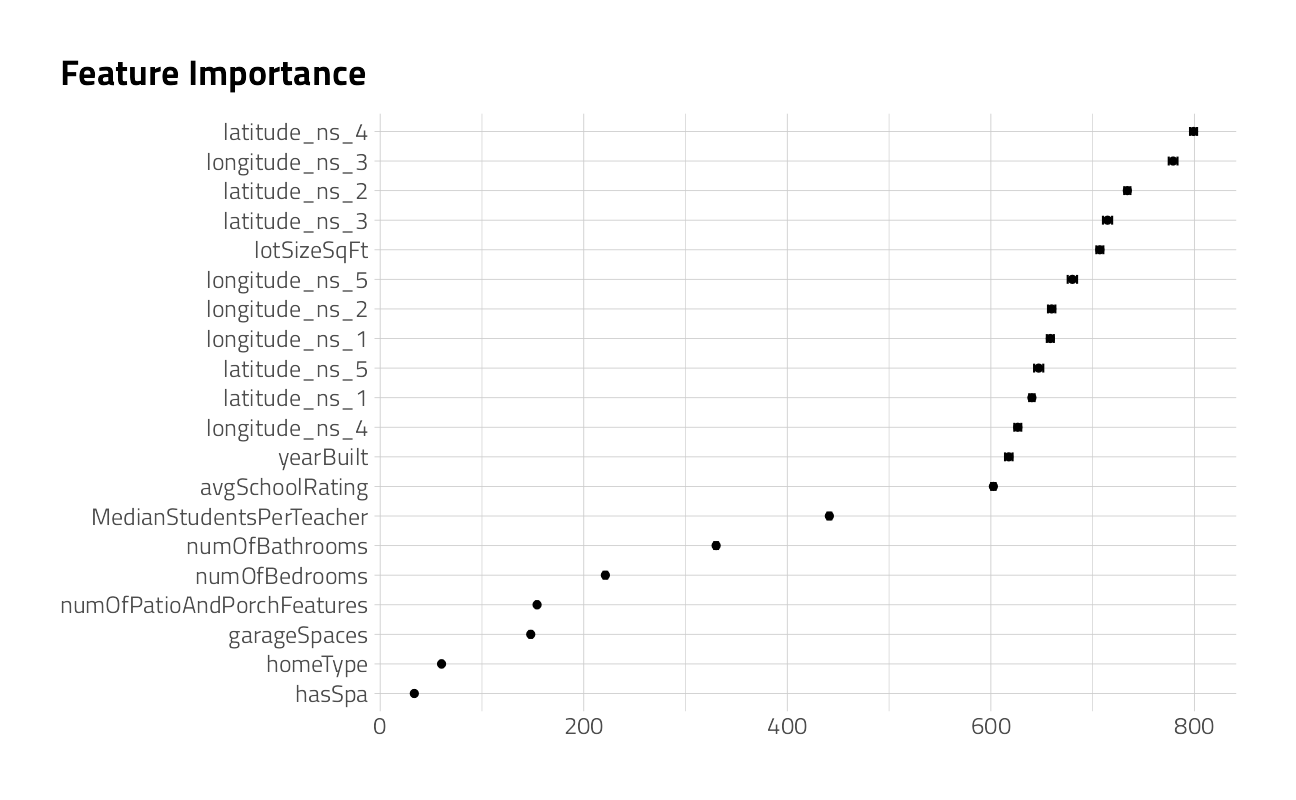

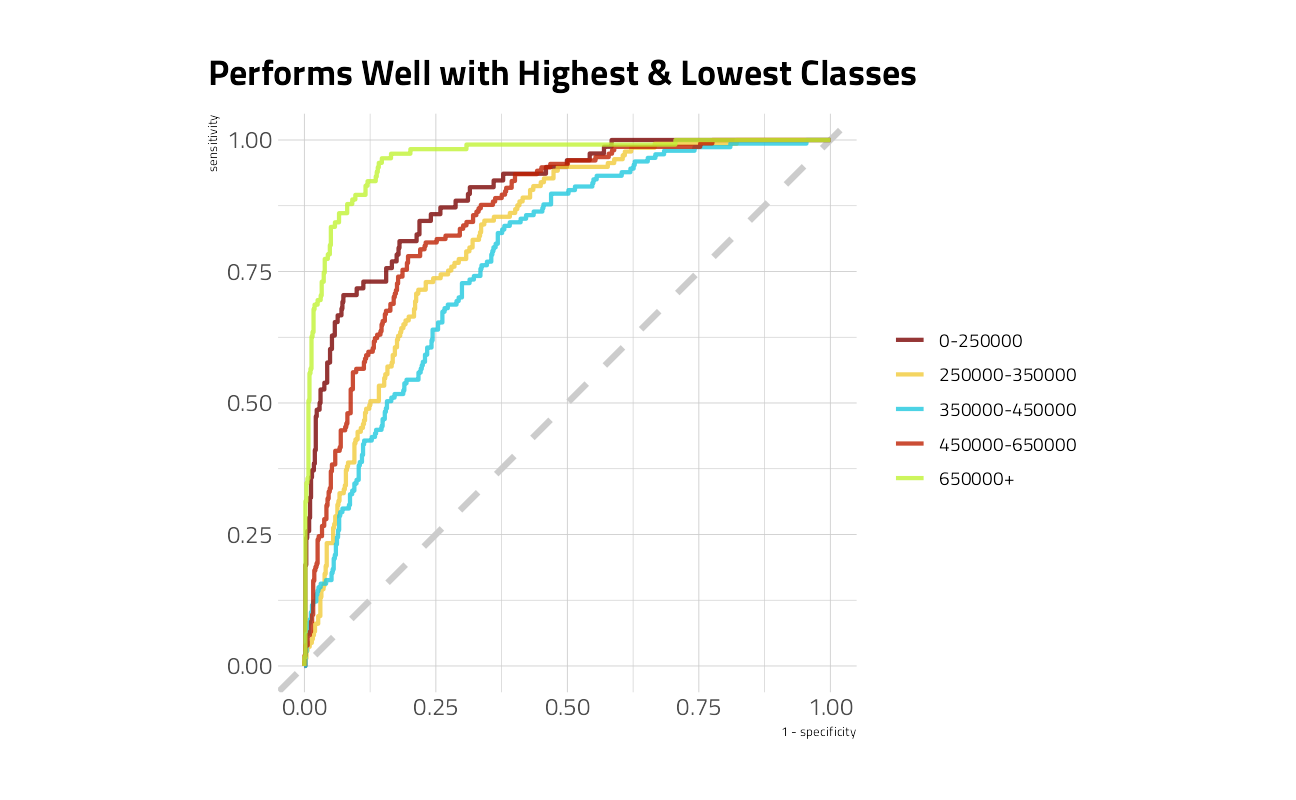

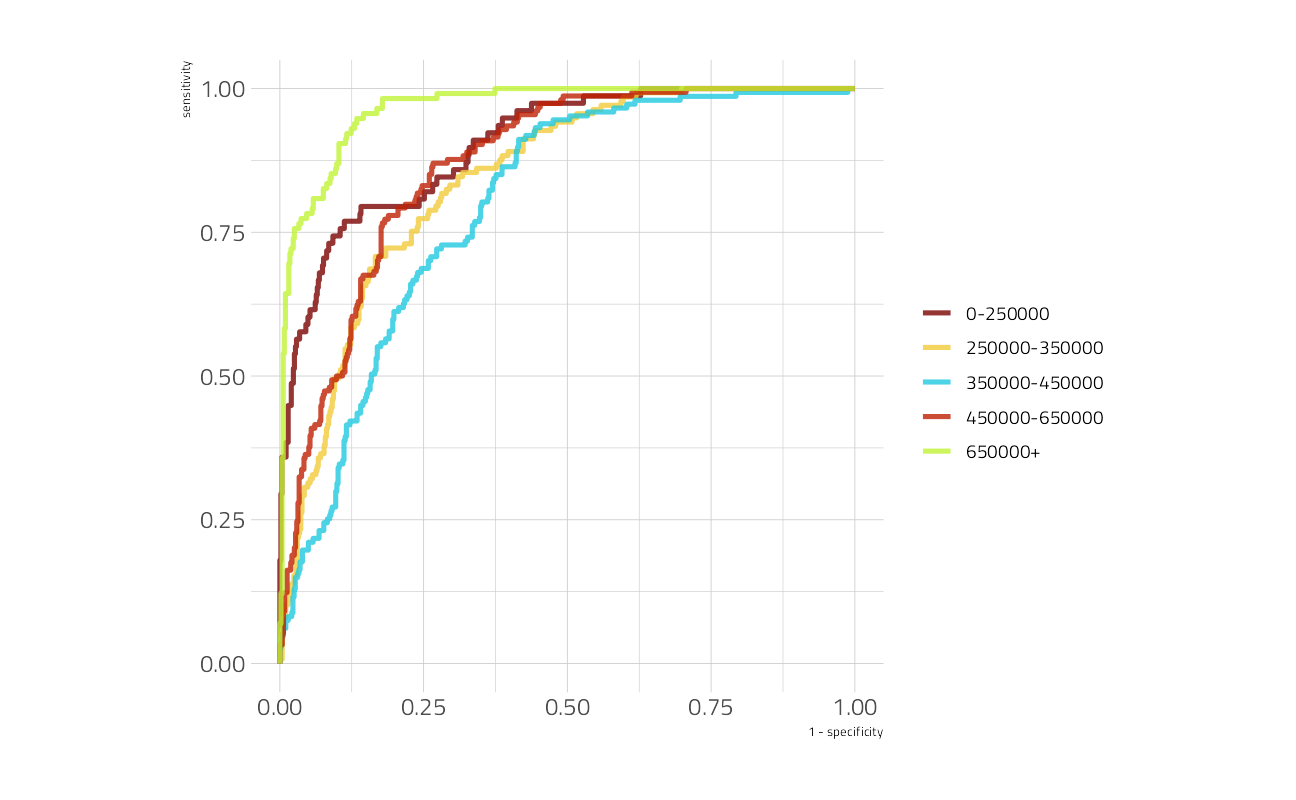

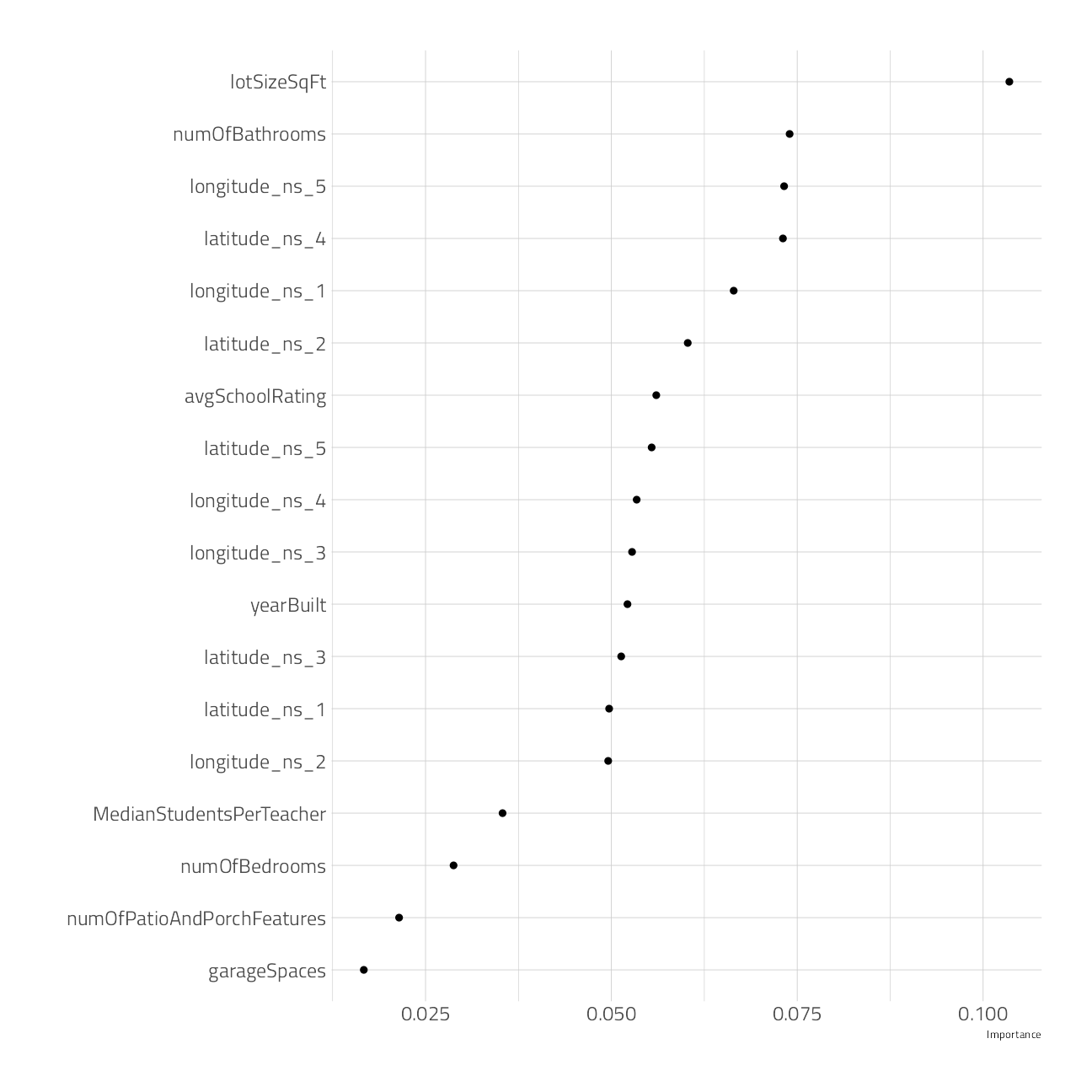

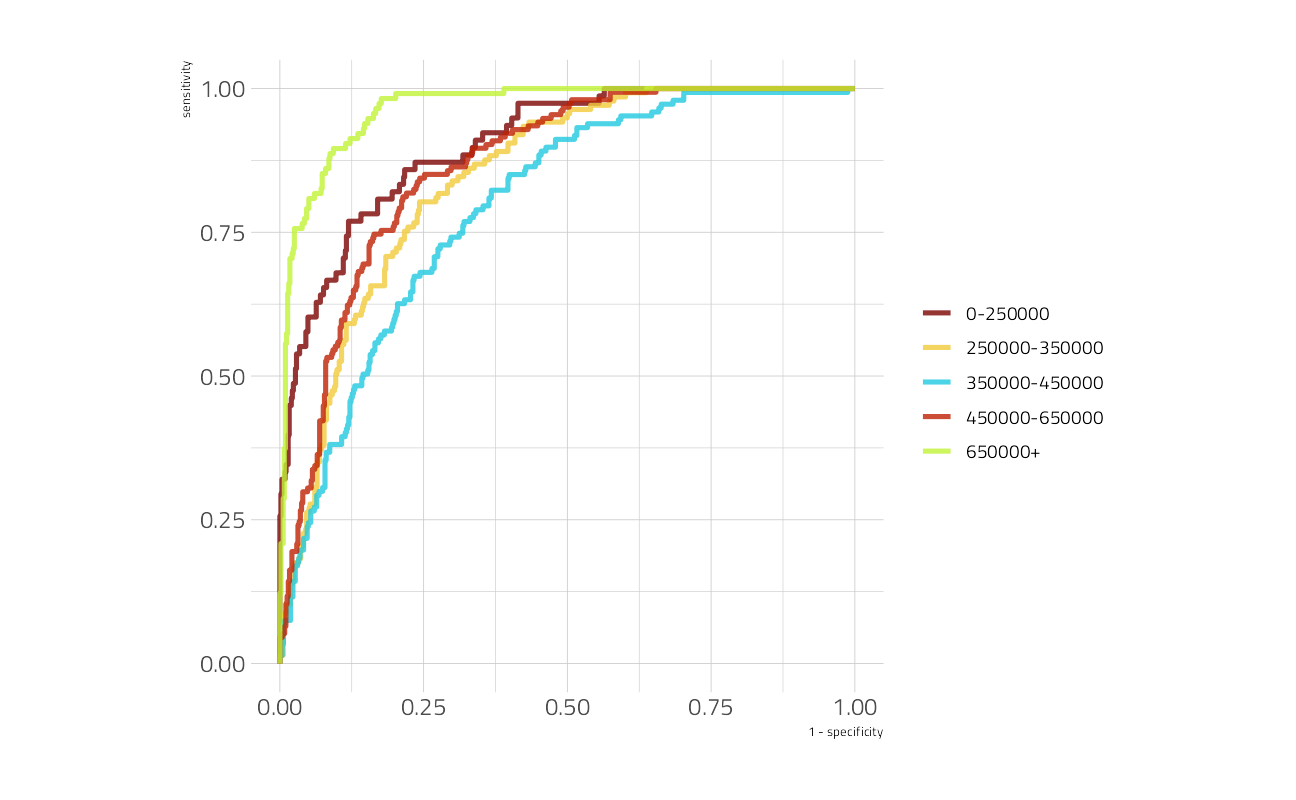

Let’s take a look at variable importance to explore additional feature engineering possibilities. Also, which price ranges does this model predict the best?

extract_fit_parsnip(bag_last_fit)$fit$imp %>%

mutate(term = fct_reorder(term, value)) %>%

ggplot(aes(value, term)) +

geom_point() +

geom_errorbarh(aes(

xmin = value - `std.error` / 2,

xmax = value + `std.error` / 2

),

height = .3

) +

labs(

title = "Feature Importance",

x = NULL, y = NULL

)

collect_predictions(bag_last_fit) %>%

roc_curve(priceRange, `.pred_0-250000`:`.pred_650000+`) %>%

ggplot(aes(1 - specificity, sensitivity, color = .level)) +

geom_abline(lty = 2, color = "gray80", size = 1.5) +

geom_path(alpha = 0.8, size = 1) +

coord_equal() +

labs(

color = NULL,

title = "Performs Well with Highest & Lowest Classes"

)

best_fit <- fit(bag_fit_best, data = train_df)

holdout_result <- augment(best_fit, holdout_df)

submission <- holdout_result %>%

select(uid,

`0-250000` = `.pred_0-250000`,

`250000-350000` = `.pred_250000-350000`,

`350000-450000` = `.pred_350000-450000`,

`450000-650000` = `.pred_450000-650000`,

`650000+` = `.pred_650000+`

)write_csv(submission, file = path_export)shell(glue::glue('kaggle competitions submit -c { competition_name } -f { path_export } -m "Simple random forest model 1"'))

Machine Learning: XGBoost Model 1

Model Specification

Let’s start with a boosted early stopping XGBoost model that runs fast and gives an early indication of which hyperparameters make the most difference in model performance.

(

xgboost_spec <- boost_tree(

trees = 500,

tree_depth = tune(),

min_n = tune(),

loss_reduction = tune(), ## first three: model complexity

sample_size = tune(),

mtry = tune(), ## randomness

learn_rate = tune(), ## step size

stop_iter = tune()

) %>%

set_engine("xgboost", validation = 0.2) %>%

set_mode("classification")

)Boosted Tree Model Specification (classification)

Main Arguments:

mtry = tune()

trees = 500

min_n = tune()

tree_depth = tune()

learn_rate = tune()

loss_reduction = tune()

sample_size = tune()

stop_iter = tune()

Engine-Specific Arguments:

validation = 0.2

Computational engine: xgboost

Tuning and Performance

We will start with the basic recipe from above. The tuning grid will evaluate hyperparameter combinations across our resample folds and report on the best average.

xgboost_rec <-

recipe(

priceRange ~ uid + latitude + longitude + garageSpaces + hasSpa + yearBuilt + numOfPatioAndPorchFeatures + lotSizeSqFt + avgSchoolRating + MedianStudentsPerTeacher + numOfBathrooms + numOfBedrooms + description + homeType,

data = training

) %>%

step_filter(uid != 9244L) %>%

update_role(uid, new_role = "ID") %>%

step_log(lotSizeSqFt) %>%

step_ns(latitude, longitude, deg_free = 5) %>%

step_regex(description,

pattern = str_flatten(highest_words, collapse = "|"),

result = "high_price_words"

) %>%

step_regex(description,

pattern = str_flatten(lowest_words, collapse = "|"),

result = "low_price_words"

) %>%

step_rm(description) %>%

step_novel(all_nominal_predictors()) %>%

step_unknown(all_nominal_predictors()) %>%

step_other(homeType, threshold = 0.02) %>%

step_dummy(all_nominal_predictors(), one_hot = TRUE) %>%

step_nzv(all_predictors())

stopping_grid <-

grid_latin_hypercube(

finalize(mtry(), xgboost_rec %>% prep() %>% juice()), ## for the ~68 columns in the juiced training set

learn_rate(range = c(-2, -1)), ## keep pretty big

stop_iter(range = c(10L, 50L)), ## bigger than default

tree_depth(c(5L, 10L)),

min_n(c(10L, 40L)),

loss_reduction(), ## first three: model complexity

sample_size = sample_prop(c(0.5, 1.0)),

size = 30

)system.time(

cv_res_xgboost <-

workflow() %>%

add_recipe(xgboost_rec) %>%

add_model(xgboost_spec) %>%

tune_grid(

resamples = folds,

grid = stopping_grid,

metrics = mset,

control = control_grid(save_pred = TRUE)

)

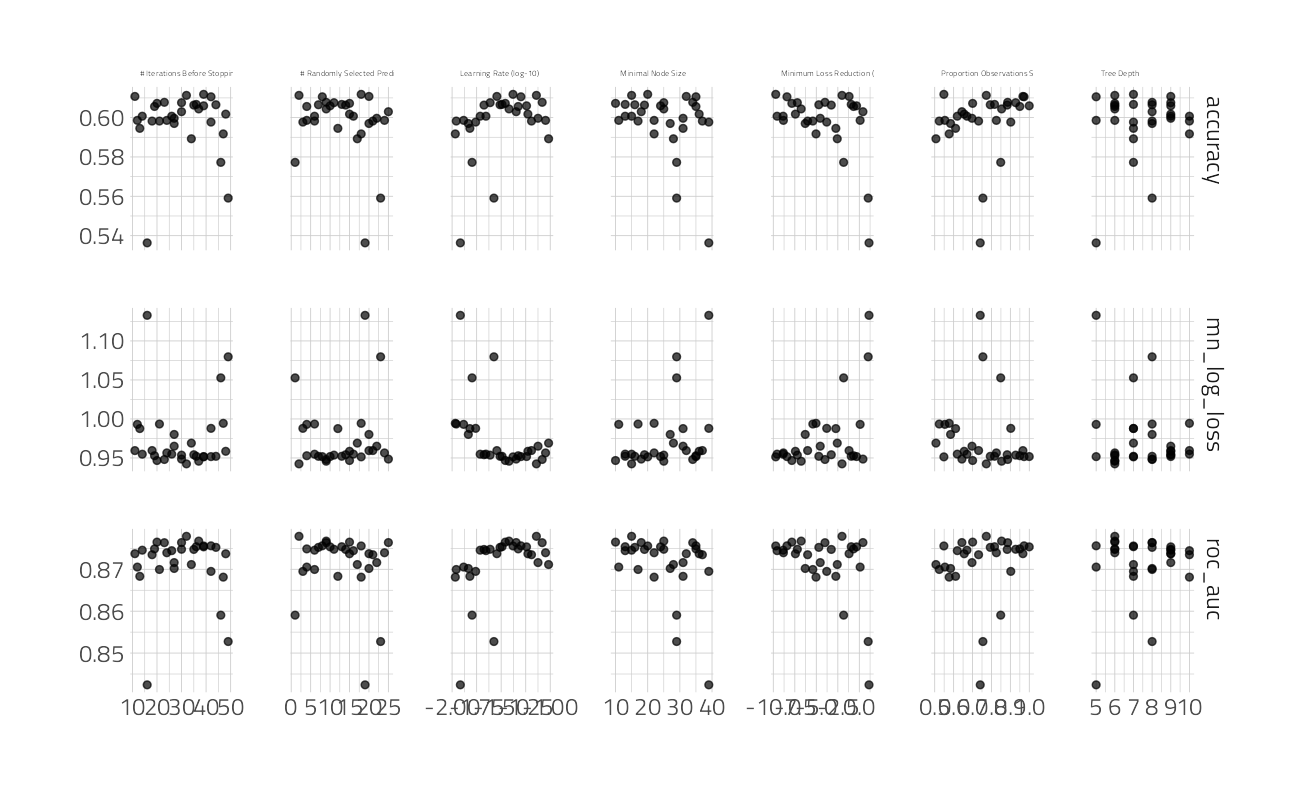

)autoplot(cv_res_xgboost) +

theme(strip.text.x = element_text(size = 12 / .pt))

show_best(cv_res_xgboost, metric = "mn_log_loss")

On the surface, this first XGBoost pass does not quite appear to be as good as the random forest was. Let’s use last_fit() to fit one final time to the training data and evaluate on the testing data, with the numerically optimal result.

best_xg1_wf <- workflow() %>%

add_recipe(xgboost_rec) %>%

add_model(xgboost_spec) %>%

finalize_workflow(select_best(cv_res_xgboost, metric = "mn_log_loss"))

xg1_last_fit <- last_fit(best_xg1_wf, split)

collect_predictions(xg1_last_fit) %>%

conf_mat(priceRange, .pred_class) %>%

autoplot(type = "heatmap")

collect_predictions(xg1_last_fit) %>%

roc_curve(priceRange, `.pred_0-250000`:`.pred_650000+`) %>%

ggplot(aes(1 - specificity, sensitivity, color = .level)) +

geom_path(alpha = 0.8, size = 1.2) +

coord_equal() +

labs(color = NULL)

collect_predictions(xg1_last_fit) %>%

mn_log_loss(priceRange, `.pred_0-250000`:`.pred_650000+`)

And the variable importance

extract_workflow(xg1_last_fit) %>%

extract_fit_parsnip() %>%

vip(geom = "point", num_features = 18)

It’s interesting to me how the lot size is the most important feature in this model, and that the description words are so low in the list.

I will try to submit this to Kaggle, but it’s not likely to take it because the performance is worse.

best_xg1_fit <- fit(best_xg1_wf, data = train_df)

holdout_result <- augment(best_xg1_fit, holdout_df)

submission <- holdout_result %>%

select(uid,

`0-250000` = `.pred_0-250000`,

`250000-350000` = `.pred_250000-350000`,

`350000-450000` = `.pred_350000-450000`,

`450000-650000` = `.pred_450000-650000`,

`650000+` = `.pred_650000+`

)I am going to attempt to post this second submission to Kaggle, and work more with xgboost and a more advanced recipe to do better.

write_csv(submission, file = path_export)shell(glue::glue('kaggle competitions submit -c { competition_name } -f { path_export } -m "First XGBoost Model"'))

Machine Learning: XGBoost Model 2

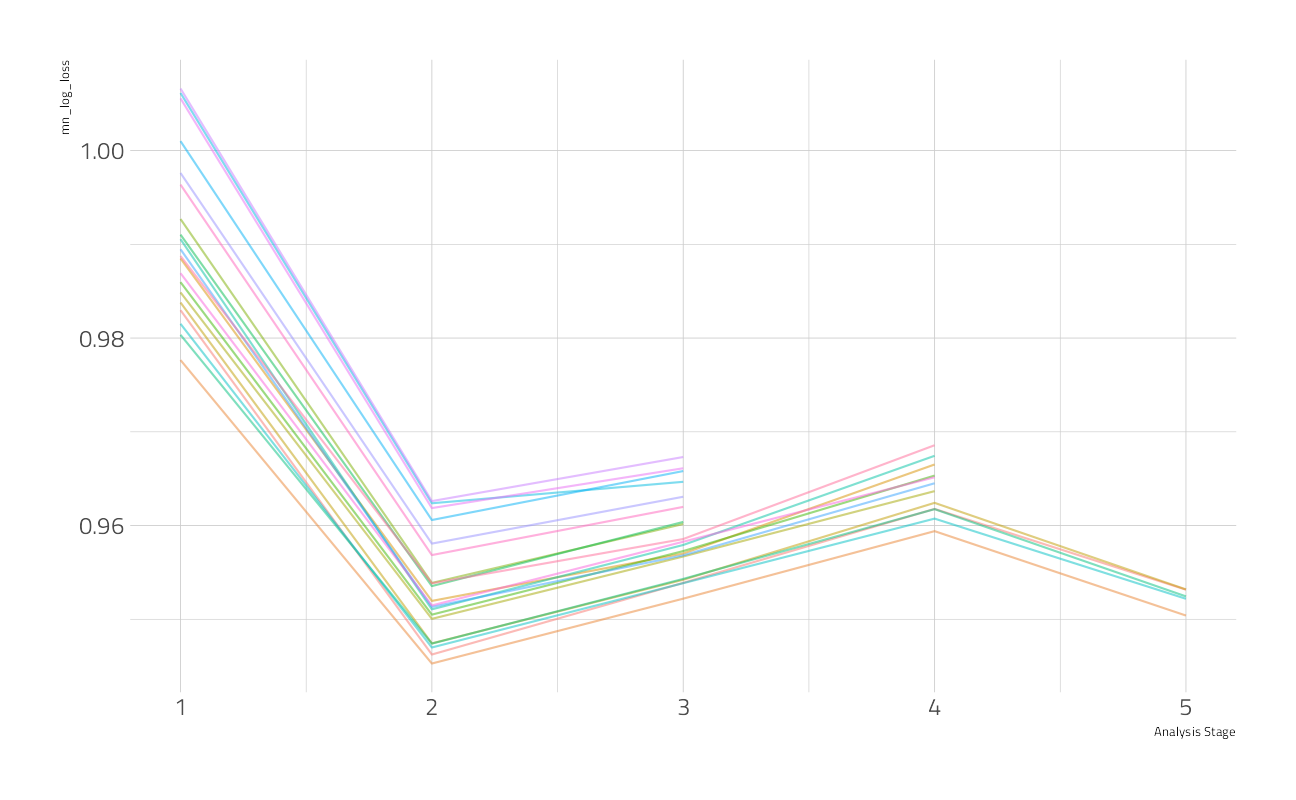

Let’s use what we learned above to choose better hyperparameters. This time, let’s try thetune_race_anova technique for skipping the parts of the grid search that do not perform well.

Model Specification

(xgboost_spec <- boost_tree(

trees = 500,

min_n = tune(),

loss_reduction = 0.337,

mtry = tune(),

sample_size = .970,

tree_depth = 6,

stop_iter = 29,

learn_rate = .05

) %>%

set_engine("xgboost") %>%

set_mode("classification"))Boosted Tree Model Specification (classification)

Main Arguments:

mtry = tune()

trees = 500

min_n = tune()

tree_depth = 6

learn_rate = 0.05

loss_reduction = 0.337

sample_size = 0.97

stop_iter = 29

Computational engine: xgboost

Tuning and Performance

race_anova_grid <-

grid_latin_hypercube(

mtry(range = c(40, 66)),

min_n(range = c(8, 20)),

size = 20

)system.time(

cv_res_xgboost <-

workflow() %>%

add_recipe(xgboost_rec) %>%

add_model(xgboost_spec) %>%

tune_race_anova(

resamples = folds,

grid = race_anova_grid,

control = control_race(

verbose_elim = TRUE,

parallel_over = "resamples",

save_pred = TRUE

),

metrics = mset

)

)We can visualize how the possible parameter combinations we tried did during the “race.” Notice how we saved a TON of time by not evaluating the parameter combinations that were clearly doing poorly on all the resamples; we only kept going with the good parameter combinations.

plot_race(cv_res_xgboost)

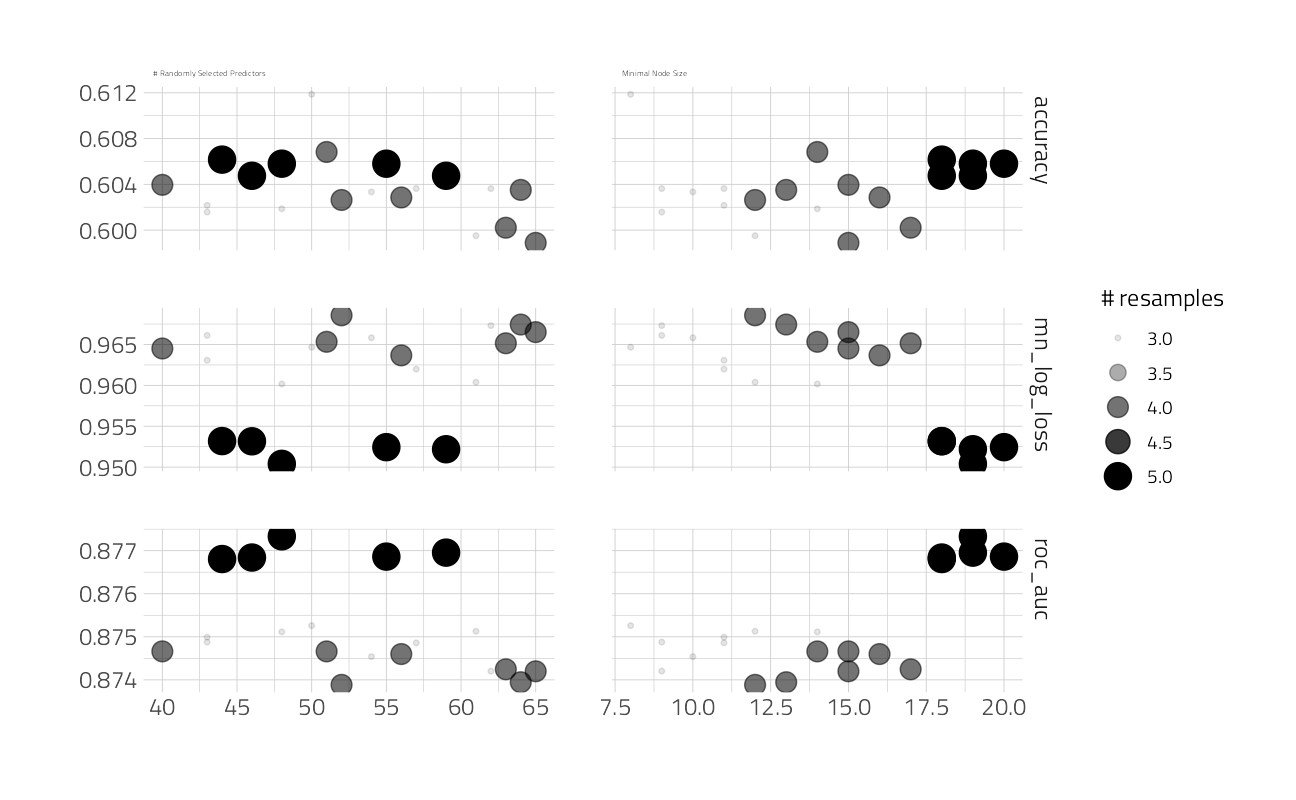

Let’s look at the top results

autoplot(cv_res_xgboost) +

theme(strip.text.x = element_text(size = 12 / .pt))

show_best(cv_res_xgboost,

metric = "mn_log_loss"

)

I’m not making much progress here in improving logloss.

best_xg2_wf <- workflow() %>%

add_recipe(xgboost_rec) %>%

add_model(xgboost_spec) %>%

finalize_workflow(select_best(cv_res_xgboost, metric = "mn_log_loss"))

xg2_last_fit <- last_fit(best_xg2_wf, split)

collect_predictions(xg2_last_fit) %>%

conf_mat(priceRange, .pred_class) %>%

autoplot(type = "heatmap")

collect_predictions(xg2_last_fit) %>%

roc_curve(priceRange, `.pred_0-250000`:`.pred_650000+`) %>%

ggplot(aes(1 - specificity, sensitivity, color = .level)) +

geom_path(alpha = 0.8, size = 1.2) +

coord_equal() +

labs(color = NULL)

collect_predictions(xg2_last_fit) %>%

mn_log_loss(priceRange, `.pred_0-250000`:`.pred_650000+`)

And the variable importance

extract_workflow(xg2_last_fit) %>%

extract_fit_parsnip() %>%

vip(geom = "point", num_features = 15)

best_xg2_fit <- fit(best_xg2_wf, data = train_df)[15:50:02] WARNING: amalgamation/../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'multi:softprob' was changed from 'merror' to 'mlogloss'. Explicitly set eval_metric if you'd like to restore the old behavior.Strange that this model changes up variable importance yet again. Let’s submit to Kaggle.

holdout_result <- augment(best_xg2_fit, holdout_df)

submission <- holdout_result %>%

select(uid,

`0-250000` = `.pred_0-250000`,

`250000-350000` = `.pred_250000-350000`,

`350000-450000` = `.pred_350000-450000`,

`450000-650000` = `.pred_450000-650000`,

`650000+` = `.pred_650000+`

)

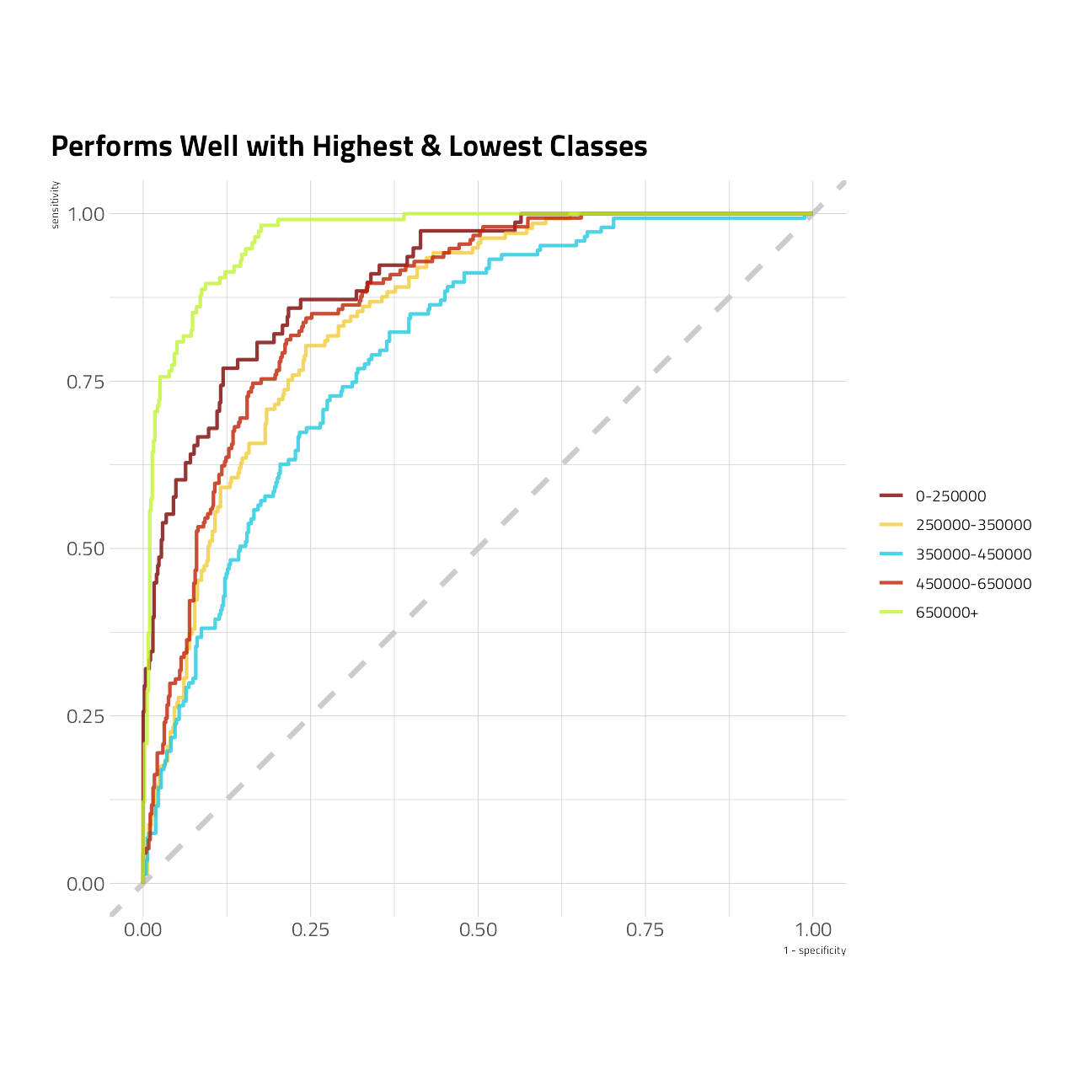

collect_predictions(xg2_last_fit) %>%

roc_curve(priceRange, `.pred_0-250000`:`.pred_650000+`) %>%

ggplot(aes(1 - specificity, sensitivity, color = .level)) +

geom_abline(lty = 2, color = "gray80", size = 1.5) +

geom_path(alpha = 0.8, size = 1) +

coord_equal() +

labs(

color = NULL,

title = "Performs Well with Highest & Lowest Classes"

)

We’re out of time. This will be as good as it gets, and our final submission.

shell(glue::glue('kaggle competitions submit -c { competition_name } -f { path_export } -m "XGboost with advanced preprocessing model 2"'))

sessionInfo()R version 4.1.1 (2021-08-10)

Platform: x86_64-w64-mingw32/x64 (64-bit)

Running under: Windows 10 x64 (build 22000)

Matrix products: default

locale:

[1] LC_COLLATE=English_United States.1252

[2] LC_CTYPE=English_United States.1252

[3] LC_MONETARY=English_United States.1252

[4] LC_NUMERIC=C

[5] LC_TIME=English_United States.1252

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] xgboost_1.4.1.1 rpart_4.1-15 vctrs_0.3.8 rlang_0.4.11

[5] vip_0.3.2 baguette_0.1.1 themis_0.1.4 tidytext_0.3.2

[9] tidylo_0.1.0 textrecipes_0.4.1 finetune_0.1.0 yardstick_0.0.8

[13] workflowsets_0.1.0 workflows_0.2.3 tune_0.1.6 rsample_0.1.0

[17] recipes_0.1.17 parsnip_0.1.7.900 modeldata_0.1.1 infer_1.0.0

[21] dials_0.0.10 scales_1.1.1 broom_0.7.9 tidymodels_0.1.4

[25] patchwork_1.1.1 lubridate_1.8.0 hrbrthemes_0.8.0 forcats_0.5.1

[29] stringr_1.4.0 dplyr_1.0.7 purrr_0.3.4 readr_2.0.2

[33] tidyr_1.1.4 tibble_3.1.4 ggplot2_3.3.5 tidyverse_1.3.1

[37] workflowr_1.6.2

loaded via a namespace (and not attached):

[1] utf8_1.2.2 R.utils_2.11.0 tidyselect_1.1.1

[4] grid_4.1.1 pROC_1.18.0 munsell_0.5.0

[7] codetools_0.2-18 ragg_1.1.3 future_1.22.1

[10] withr_2.4.2 colorspace_2.0-2 highr_0.9

[13] knitr_1.36 rstudioapi_0.13 Rttf2pt1_1.3.9

[16] listenv_0.8.0 labeling_0.4.2 git2r_0.28.0

[19] TeachingDemos_2.12 farver_2.1.0 bit64_4.0.5

[22] DiceDesign_1.9 rprojroot_2.0.2 mlr_2.19.0

[25] parallelly_1.28.1 generics_0.1.0 ipred_0.9-12

[28] xfun_0.26 R6_2.5.1 doParallel_1.0.16

[31] arrow_5.0.0.2 lhs_1.1.3 cachem_1.0.6

[34] assertthat_0.2.1 promises_1.2.0.1 nnet_7.3-16

[37] gtable_0.3.0 Cubist_0.3.0 globals_0.14.0

[40] timeDate_3043.102 BBmisc_1.11 systemfonts_1.0.2

[43] splines_4.1.1 butcher_0.1.5 extrafontdb_1.0

[46] hexbin_1.28.2 earth_5.3.1 prismatic_1.0.0

[49] checkmate_2.0.0 yaml_2.2.1 reshape2_1.4.4

[52] modelr_0.1.8 backports_1.2.1 httpuv_1.6.3

[55] tokenizers_0.2.1 extrafont_0.17 usethis_2.0.1

[58] inum_1.0-4 tools_4.1.1 lava_1.6.10

[61] ellipsis_0.3.2 jquerylib_0.1.4 Rcpp_1.0.7

[64] plyr_1.8.6 parallelMap_1.5.1 ParamHelpers_1.14

[67] viridis_0.6.1 ggrepel_0.9.1 haven_2.4.3

[70] fs_1.5.0 here_1.0.1 furrr_0.2.3

[73] unbalanced_2.0 magrittr_2.0.1 data.table_1.14.2

[76] reprex_2.0.1 RANN_2.6.1 GPfit_1.0-8

[79] mvtnorm_1.1-3 SnowballC_0.7.0 whisker_0.4

[82] ROSE_0.0-4 R.cache_0.15.0 hms_1.1.1

[85] evaluate_0.14 readxl_1.3.1 gridExtra_2.3

[88] compiler_4.1.1 crayon_1.4.1 R.oo_1.24.0

[91] htmltools_0.5.2 later_1.3.0 tzdb_0.1.2

[94] Formula_1.2-4 libcoin_1.0-9 DBI_1.1.1

[97] dbplyr_2.1.1 MASS_7.3-54 Matrix_1.3-4

[100] cli_3.0.1 C50_0.1.5 R.methodsS3_1.8.1

[103] parallel_4.1.1 gower_0.2.2 pkgconfig_2.0.3

[106] xml2_1.3.2 foreach_1.5.1 bslib_0.3.1

[109] hardhat_0.1.6 plotmo_3.6.1 prodlim_2019.11.13

[112] rvest_1.0.1 janeaustenr_0.1.5 digest_0.6.28

[115] rmarkdown_2.11 cellranger_1.1.0 fastmatch_1.1-3

[118] gdtools_0.2.3 lifecycle_1.0.1 jsonlite_1.7.2

[121] viridisLite_0.4.0 fansi_0.5.0 pillar_1.6.3

[124] lattice_0.20-44 fastmap_1.1.0 httr_1.4.2

[127] plotrix_3.8-2 survival_3.2-11 glue_1.4.2

[130] conflicted_1.0.4 FNN_1.1.3 iterators_1.0.13

[133] bit_4.0.4 class_7.3-19 stringi_1.7.5

[136] sass_0.4.0 rematch2_2.1.2 textshaping_0.3.5

[139] partykit_1.2-15 styler_1.6.2 future.apply_1.8.1

LS0tDQp0aXRsZTogIlNsaWNlZCBBdXN0aW4gSG9tZSBWYWx1ZSBDbGFzc2lmaWNhdGlvbiINCmF1dGhvcjogIkppbSBHcnVtYW4iDQpkYXRlOiAiQXVndXN0IDEwLCAyMDIxIg0Kb3V0cHV0Og0KICB3b3JrZmxvd3I6OndmbG93X2h0bWw6DQogICAgdG9jOiBubw0KICAgIGNvZGVfZm9sZGluZzogaGlkZQ0KICAgIGNvZGVfZG93bmxvYWQ6IHRydWUNCiAgICBkZl9wcmludDogcGFnZWQNCmVkaXRvcl9vcHRpb25zOg0KICBjaHVua19vdXRwdXRfdHlwZTogY29uc29sZQ0KLS0tDQoNCltTZWFzb24gMSBFcGlzb2RlIDExXShodHRwczovL3d3dy5rYWdnbGUuY29tL2Mvc2xpY2VkLXMwMWUxMS1zZW1pZmluYWxzL2RhdGEpIG9mICNTTElDRUQgZmVhdHVyZXMgYSBtdWx0aS1jbGFzcyBjaGFsbGVuZ2Ugd2l0aCBaaWxsb3cgZGF0YSBvbiBBdXN0aW4sIFRYIGFyZWEgcHJvcGVydHkgbGlzdGluZ3MuIENvbnRlc3RhbnRzIGFyZSB0byB1c2UgYSB2YXJpZXR5IG9mIGZlYXR1cmVzIHRvIHByZWRpY3Qgd2hpY2ggcHJpY2luZyBjYXRlZ29yeSBob3VzZXMgYXJlIGxpc3RlZCBpbi4gRWFjaCByb3cgcmVwcmVzZW50cyBhIHVuaXF1ZSBwcm9wZXJ0eSB2YWx1YXRpb24uIFRoZSBldmFsdWF0aW9uIG1ldHJpYyBmb3Igc3VibWlzc2lvbnMgaW4gdGhpcyBjb21wZXRpdGlvbiBpcyBjbGFzc2lmaWNhdGlvbiBtZWFuIGBsb2dsb3NzYC4NCg0KIVtdKGh0dHBzOi8vd3d3Lm5vdGlvbi5zby9pbWFnZS9odHRwcyUzQSUyRiUyRnMzLXVzLXdlc3QtMi5hbWF6b25hd3MuY29tJTJGc2VjdXJlLm5vdGlvbi1zdGF0aWMuY29tJTJGN2Y3YmE1ZjktZDdiZC00MTAxLTg5MzMtYTExMmI0Zjc4NTcwJTJGRnJhbWVfMy5wbmc/dGFibGU9YmxvY2smaWQ9YzdiZDI2MzUtNmUzYS00MjI3LTllMmQtZmJhZmIwNDgwMDczJnNwYWNlSWQ9MmNjNDA0ZTYtZmUyMC00ODNkLTllYTUtNWQ0NGViM2RkNTg2JndpZHRoPTE1MTAmdXNlcklkPSZjYWNoZT12MikNCg0KW1NMSUNFRF0oaHR0cHM6Ly93d3cubm90aW9uLnNvL1NMSUNFRC1TaG93LWM3YmQyNjM1NmUzYTQyMjc5ZTJkZmJhZmIwNDgwMDczKSBpcyBsaWtlIHRoZSBUViBTaG93IENob3BwZWQgYnV0IGZvciBkYXRhIHNjaWVuY2UuIFRoZSBmb3VyIGNvbXBldGl0b3JzIGdldCBhIG5ldmVyLWJlZm9yZS1zZWVuIGRhdGFzZXQgYW5kIHR3by1ob3VycyB0byBjb2RlIGEgc29sdXRpb24gdG8gYSBwcmVkaWN0aW9uIGNoYWxsZW5nZS4gQ29udGVzdGFudHMgZ2V0IHBvaW50cyBmb3IgdGhlIGJlc3QgbW9kZWwgcGx1cyBib251cyBwb2ludHMgZm9yIGRhdGEgdmlzdWFsaXphdGlvbiwgdm90ZXMgZnJvbSB0aGUgYXVkaWVuY2UsIGFuZCBtb3JlLg0KDQpUaGUgYXVkaWVuY2UgaXMgaW52aXRlZCB0byBwYXJ0aWNpcGF0ZSBhcyB3ZWxsLiBUaGlzIGZpbGUgY29uc2lzdHMgb2YgbXkgc3VibWlzc2lvbnMgd2l0aCBjbGVhbnVwIGFuZCBjb21tZW50YXJ5IGFkZGVkLg0KDQpUbyBtYWtlIHRoZSBiZXN0IHVzZSBvZiB0aGUgcmVzb3VyY2VzIHRoYXQgd2UgaGF2ZSwgd2Ugd2lsbCBleHBsb3JlIHRoZSBkYXRhIHNldCBmb3IgZmVhdHVyZXMgdG8gc2VsZWN0IHRob3NlIHdpdGggdGhlIG1vc3QgcHJlZGljdGl2ZSBwb3dlciwgYnVpbGQgYSByYW5kb20gZm9yZXN0IHRvIGNvbmZpcm0gdGhlIHJlY2lwZSwgYW5kIHRoZW4gYnVpbGQgb25lIG9yIG1vcmUgZW5zZW1ibGUgbW9kZWxzLiBJZiB0aGVyZSBpcyB0aW1lLCB3ZSB3aWxsIGNyYWZ0IHNvbWUgdmlzdWFscyBmb3IgbW9kZWwgZXhwbGFpbmFiaWxpdHkuDQoNCkxldCdzIGxvYWQgdXAgcGFja2FnZXM6DQoNCmBgYHtyIHNldHVwfQ0KDQpzdXBwcmVzc1BhY2thZ2VTdGFydHVwTWVzc2FnZXMoew0KbGlicmFyeSh0aWR5dmVyc2UpICMgY2xlYW4gYW5kIHRyYW5zZm9ybSByZWN0YW5ndWxhciBkYXRhDQpsaWJyYXJ5KGhyYnJ0aGVtZXMpICMgcGxvdCB0aGVtaW5nDQpsaWJyYXJ5KGx1YnJpZGF0ZSkgIyBkYXRlIGFuZCB0aW1lIHRyYW5zZm9ybWF0aW9ucw0KDQpsaWJyYXJ5KHBhdGNod29yaykNCiAgDQpsaWJyYXJ5KHRpZHltb2RlbHMpICMgbWFjaGluZSBsZWFybmluZyB0b29scw0KbGlicmFyeShmaW5ldHVuZSkgIyByYWNpbmcgbWV0aG9kcyBmb3IgYWNjZWxlcmF0aW5nIGh5cGVycGFyYW1ldGVyIHR1bmluZw0KDQpsaWJyYXJ5KHRleHRyZWNpcGVzKQ0KICANCmxpYnJhcnkodGlkeWxvKQ0KbGlicmFyeSh0aWR5dGV4dCkNCiAgDQpsaWJyYXJ5KHRoZW1pcykgIyBtbCBwcmVwIHRvb2xzIGZvciBoYW5kbGluZyB1bmJhbGFuY2VkIGRhdGFzZXRzDQpsaWJyYXJ5KGJhZ3VldHRlKSAjIG1sIHRvb2xzIGZvciBiYWdnZWQgZGVjaXNpb24gdHJlZSBtb2RlbHMNCiAgDQpsaWJyYXJ5KHZpcCkgIyBpbnRlcnByZXQgbW9kZWwgcGVyZm9ybWFuY2UNCg0KfSkNCg0Kc291cmNlKGhlcmU6OmhlcmUoImNvZGUiLCJfY29tbW9uLlIiKSwNCiAgICAgICB2ZXJib3NlID0gRkFMU0UsDQogICAgICAgbG9jYWwgPSBrbml0cjo6a25pdF9nbG9iYWwoKSkNCg0KZ2dwbG90Mjo6dGhlbWVfc2V0KHRoZW1lX2ppbShiYXNlX3NpemUgPSAxMikpDQoNCiNjcmVhdGUgYSBkYXRhIGRpcmVjdG9yeQ0KZGF0YV9kaXIgPC0gaGVyZTo6aGVyZSgiZGF0YSIsU3lzLkRhdGUoKSkNCmlmICghZmlsZS5leGlzdHMoZGF0YV9kaXIpKSBkaXIuY3JlYXRlKGRhdGFfZGlyKQ0KDQojIHNldCBhIGNvbXBldGl0aW9uIG1ldHJpYw0KbXNldCA8LSBtZXRyaWNfc2V0KG1uX2xvZ19sb3NzLCBhY2N1cmFjeSwgcm9jX2F1YykNCg0KIyBzZXQgdGhlIGNvbXBldGl0aW9uIG5hbWUgZnJvbSB0aGUgd2ViIGFkZHJlc3MNCmNvbXBldGl0aW9uX25hbWUgPC0gInNsaWNlZC1zMDFlMTEtc2VtaWZpbmFscyINCg0KemlwZmlsZSA8LSBwYXN0ZTAoZGF0YV9kaXIsIi8iLCBjb21wZXRpdGlvbl9uYW1lLCAiLnppcCIpDQoNCnBhdGhfZXhwb3J0IDwtIGhlcmU6OmhlcmUoImRhdGEiLFN5cy5EYXRlKCkscGFzdGUwKGNvbXBldGl0aW9uX25hbWUsIi5jc3YiKSkNCmBgYA0KDQojIyBHZXQgdGhlIERhdGENCg0KQSBxdWljayByZW1pbmRlciBiZWZvcmUgZG93bmxvYWRpbmcgdGhlIGRhdGFzZXQ6ICBHbyB0byB0aGUgd2ViIHNpdGUgYW5kIGFjY2VwdCB0aGUgY29tcGV0aXRpb24gdGVybXMhISENCg0KV2UgaGF2ZSBiYXNpYyBzaGVsbCBjb21tYW5kcyBhdmFpbGFibGUgdG8gaW50ZXJhY3Qgd2l0aCBLYWdnbGUgaGVyZToNCg0KYGBge3Iga2FnZ2xlIGNvbXBldGl0aW9ucyB0ZXJtaW5hbCBjb21tYW5kcywgZXZhbD1GQUxTRX0NCiMgZnJvbSB0aGUgS2FnZ2xlIGFwaSBodHRwczovL2dpdGh1Yi5jb20vS2FnZ2xlL2thZ2dsZS1hcGkNCg0KIyB0aGUgbGVhZGVyYm9hcmQNCnNoZWxsKGdsdWU6OmdsdWUoJ2thZ2dsZSBjb21wZXRpdGlvbnMgbGVhZGVyYm9hcmQgeyBjb21wZXRpdGlvbl9uYW1lIH0gLXMnKSkNCg0KIyB0aGUgZmlsZXMgdG8gZG93bmxvYWQNCnNoZWxsKGdsdWU6OmdsdWUoJ2thZ2dsZSBjb21wZXRpdGlvbnMgZmlsZXMgLWMgeyBjb21wZXRpdGlvbl9uYW1lIH0nKSkNCg0KIyB0aGUgY29tbWFuZCB0byBkb3dubG9hZCBmaWxlcw0Kc2hlbGwoZ2x1ZTo6Z2x1ZSgna2FnZ2xlIGNvbXBldGl0aW9ucyBkb3dubG9hZCAtYyB7IGNvbXBldGl0aW9uX25hbWUgfSAtcCB7IGRhdGFfZGlyIH0nKSkNCg0KIyB1bnppcCB0aGUgZmlsZXMgcmVjZWl2ZWQNCnNoZWxsKGdsdWU6OmdsdWUoJ3VuemlwIHsgemlwZmlsZSB9IC1kIHsgZGF0YV9kaXIgfScpKQ0KDQpgYGANCg0KV2UgYXJlIHJlYWRpbmcgaW4gdGhlIGNvbnRlbnRzIG9mIHRoZSBkYXRhZmlsZXMgaGVyZS4NCg0KYGBge3IgcmVhZCBrYWdnbGUgZmlsZXN9DQoNCnRyYWluX2RmIDwtIGFycm93OjpyZWFkX2Nzdl9hcnJvdyhmaWxlID0gZ2x1ZTo6Z2x1ZSh7IGRhdGFfZGlyIH0sICIvdHJhaW4uY3N2IikpICU+JSANCiAgICBtdXRhdGUoemlwX2NvZGUgPSBzdHJfZXh0cmFjdChkZXNjcmlwdGlvbiwgIls6ZGlnaXQ6XXs1fSIpKSAlPiUgDQogICAgbXV0YXRlKHByaWNlUmFuZ2UgPSBmY3RfcmVvcmRlcihwcmljZVJhbmdlLCBwYXJzZV9udW1iZXIocHJpY2VSYW5nZSkpKSAlPiUgDQogICAgbXV0YXRlKGRlc2NyaXB0aW9uID0gc3RyX3RvX2xvd2VyKGRlc2NyaXB0aW9uKSkgJT4lIA0KICAgIG11dGF0ZShhY3Jvc3MoYygiY2l0eSIsImhvbWVUeXBlIiwgInppcF9jb2RlIiwgImhhc1NwYSIpLCBhc19mYWN0b3IpKSANCg0KaG9sZG91dF9kZiA8LSBhcnJvdzo6cmVhZF9jc3ZfYXJyb3coZmlsZSA9IGdsdWU6OmdsdWUoeyBkYXRhX2RpciB9LCAiL3Rlc3QuY3N2IikpICU+JSANCiAgICBtdXRhdGUoemlwX2NvZGUgPSBzdHJfZXh0cmFjdChkZXNjcmlwdGlvbiwgIls6ZGlnaXQ6XXs1fSIpKSAlPiUgDQogICAgbXV0YXRlKGRlc2NyaXB0aW9uID0gc3RyX3RvX2xvd2VyKGRlc2NyaXB0aW9uKSkgJT4lIA0KICAgIG11dGF0ZShhY3Jvc3MoYygiY2l0eSIsImhvbWVUeXBlIiwgInppcF9jb2RlIiwgImhhc1NwYSIpLCBhc19mYWN0b3IpKQ0KDQpgYGANCg0KU29tZSBxdWVzdGlvbnMgdG8gYW5zd2VyIGhlcmU6DQpXaGF0IGZlYXR1cmVzIGhhdmUgbWlzc2luZyBkYXRhLCBhbmQgaW1wdXRhdGlvbnMgbWF5IGJlIHJlcXVpcmVkPw0KV2hhdCBkb2VzIHRoZSBvdXRjb21lIHZhcmlhYmxlIGxvb2sgbGlrZSwgaW4gdGVybXMgb2YgaW1iYWxhbmNlPw0KDQpgYGB7ciBza2ltLCBldmFsPUZBTFNFfQ0Kc2tpbXI6OnNraW0odHJhaW5fZGYpDQpgYGANCg0KVGhlIHppcF9jb2RlIHBhcnNpbmcgd2FzIG5vdCBwZXJmZWN0IGhlcmUuIFdlIGNvdWxkIGVhc2lseSBrbm4gaW1wdXRlIHRoZSB6aXAgdXNpbmcgbGF0aXR1ZGUgYW5kIGxvbmdpdHVkZXMgaWYgd2UgY2hvb3NlIHRvIHVzZSB0aGVtIGFzIGZlYXR1cmVzLg0KDQpPdXRjb21lIHZhcmlhYmxlIGBwcmljZVJhbmdlYCBoYXMgZml2ZSBjbGFzc2VzLiANCg0KIyMgT3V0Y29tZSBWYXJpYWJsZSBEaXN0cmlidXRpb24NCg0KVGhlIHByaWNlIHJhbmdlIGNsYXNzIA0KDQpgYGB7cn0NCnRyYWluX2RmICU+JSANCiAgY291bnQocHJpY2VSYW5nZSkgJT4lIA0KICBtdXRhdGUocHJpY2VSYW5nZSA9IGZjdF9yZW9yZGVyKHByaWNlUmFuZ2UsIHBhcnNlX251bWJlcihhcy5jaGFyYWN0ZXIocHJpY2VSYW5nZSkpKSkgJT4lIA0KICBnZ3Bsb3QoYWVzKG4sIHByaWNlUmFuZ2UsIGZpbGwgPSBwcmljZVJhbmdlKSkgKw0KICBnZW9tX2NvbChzaG93LmxlZ2VuZCA9IEZBTFNFKSArDQogIHNjYWxlX2ZpbGxfdmlyaWRpc19kKG9wdGlvbiA9ICJIIikgKw0KICBsYWJzKHRpdGxlID0gIkdlbmVyYWwgRGlzdHJpYnV0aW9uIG9mIEhvbWUgUHJpY2UgQ2xhc3NlcyIsIGZpbGwgPSBOVUxMLCB5ID0gTlVMTCwgeCA9ICJOdW1iZXIgb2YgUHJvcGVydGllcyIpDQoNCnRyYWluX2RmICU+JSANCiAgbXV0YXRlKHByaWNlUmFuZ2UgPSBwYXJzZV9udW1iZXIoYXMuY2hhcmFjdGVyKHByaWNlUmFuZ2UpKSArIDEwMDAwMCkgJT4lIA0KICBnZ3Bsb3QoYWVzKGxvbmdpdHVkZSwgbGF0aXR1ZGUsIHogPSBwcmljZVJhbmdlKSkgKw0KICBzdGF0X3N1bW1hcnlfaGV4KGJpbnMgPSA0MCkgKw0KICBzY2FsZV9maWxsX3ZpcmlkaXNfYihvcHRpb24gPSAiSCIsIGxhYmVscyA9IHNjYWxlczo6ZG9sbGFyKSArDQogIGxhYnMoZmlsbCA9ICJtZWFuIiwgdGl0bGUgPSAiUHJvcGVydHkgUHJpY2luZyIpDQoNCmBgYA0KDQojIyBOdW1lcmljIGZlYXR1cmVzDQoNCmBgYHtyIHN1bW1hcml6ZSBudW1lcmljIG91dGNvbWUsIGZpZy5hc3A9MX0NCm51bWVyaWNfcGxvdCA8LSBmdW5jdGlvbih0YmwsIG51bWVyaWNfdmFyLCBmYWN0b3JfdmFyID0gcHJpY2VSYW5nZSwgY29sb3JfdmFyID0gcHJpY2VSYW5nZSl7DQogIHRibCAlPiUgDQogICAgZ2dwbG90KGFlcyh7e251bWVyaWNfdmFyfX0sIHt7ZmFjdG9yX3Zhcn19LCANCiAgICAgICAgICAgICAgIGNvbG9yID0ge3tjb2xvcl92YXJ9fSkpICsNCiAgICBnZW9tX2JveHBsb3Qoc2hvdy5sZWdlbmQgPSBGQUxTRSwgb3V0bGllci5zaXplID0gMC4xKSArDQogICAgZ2VvbV9qaXR0ZXIoc2l6ZSA9IDAuMSwgYWxwaGEgPSAwLjEsc2hvdy5sZWdlbmQgPSBGQUxTRSkgKw0KICAgIHNjYWxlX2NvbG9yX3ZpcmlkaXNfZChvcHRpb24gPSAiSCIpICsNCiAgICBmYWNldF93cmFwKHZhcnMocHJpY2VSYW5nZSkpICsNCiAgICB0aGVtZShsZWdlbmQucG9zaXRpb24gPSAidG9wIiwNCiAgICAgICAgICBzdHJpcC50ZXh0LnggPSBlbGVtZW50X3RleHQoc2l6ZSA9IDI0Ly5wdCkpICsNCiAgICBsYWJzKHggPSBOVUxMLCB5ID0gTlVMTCkNCn0NCg0KbnVtZXJpY19oaXN0b2dyYW0gPC0gZnVuY3Rpb24odGJsLCBudW1lcmljX3Zhcil7DQogIHRibCAlPiUgIA0KICAgIGdncGxvdChhZXMoe3tudW1lcmljX3Zhcn19LCANCiAgICAgICAgICAgICAgIGFmdGVyX3N0YXQoZGVuc2l0eSksDQogICAgICAgICAgICAgICBmaWxsID0gZmN0X3JldihwcmljZVJhbmdlKSkpICsNCiAgICBnZW9tX2RlbnNpdHkocG9zaXRpb24gPSAiaWRlbnRpdHkiLCANCiAgICAgICAgICAgICAgICAgY29sb3IgPSAid2hpdGUiLCANCiAgICAgICAgICAgICAgICAgc2hvdy5sZWdlbmQgPSBGQUxTRSkgKw0KICAgIHNjYWxlX2ZpbGxfdmlyaWRpc19kKG9wdGlvbiA9ICJIIikgKw0KICAgIHNjYWxlX3lfY29udGludW91cyhsYWJlbHMgPSBzY2FsZXM6OnBlcmNlbnQpICsNCiAgICBsYWJzKHkgPSBOVUxMLCB4ID0gTlVMTCwgdGl0bGUgPSAiRGlzdHJpYnV0aW9uIikgKw0KICAgIHRoZW1lX2J3KCkgDQp9DQoNCm51bWVyaWNfcGxvdCh0cmFpbl9kZiwgZ2FyYWdlU3BhY2VzLCBmYWN0b3JfdmFyID0gaG9tZVR5cGUpICsNCmluc2V0X2VsZW1lbnQobnVtZXJpY19oaXN0b2dyYW0odHJhaW5fZGYsIGdhcmFnZVNwYWNlcyksDQogICAgICAgICAgICAgIGxlZnQgPSAwLjYsIGJvdHRvbSA9IDAsIHJpZ2h0ID0gMSwgdG9wID0gMC41KSArDQogIGxhYnMoeCA9ICJHYXJhZ2UgU3BhY2VzIikNCg0KbnVtZXJpY19wbG90KHRyYWluX2RmLCBudW1PZlBhdGlvQW5kUG9yY2hGZWF0dXJlcywgZmFjdG9yX3ZhciA9IGhvbWVUeXBlKSArDQppbnNldF9lbGVtZW50KG51bWVyaWNfaGlzdG9ncmFtKHRyYWluX2RmLCBudW1PZlBhdGlvQW5kUG9yY2hGZWF0dXJlcyksDQogICAgICAgICAgICAgIGxlZnQgPSAwLjYsIGJvdHRvbSA9IDAsIHJpZ2h0ID0gMSwgdG9wID0gMC41KSArDQogICBsYWJzKHggPSAiUGF0aW8gYW5kIFBvcmNoIEZlYXR1cmVzIikNCg0KbnVtZXJpY19wbG90KHRyYWluX2RmLCBsb3RTaXplU3FGdCwgZmFjdG9yX3ZhciA9IGhvbWVUeXBlKSArDQogICBzY2FsZV94X2xvZzEwKCkgKw0KaW5zZXRfZWxlbWVudChudW1lcmljX2hpc3RvZ3JhbSh0cmFpbl9kZiwgbG90U2l6ZVNxRnQpICsNCiAgICAgICAgICAgICAgICBzY2FsZV94X2xvZzEwKCksDQogICAgICAgICAgICAgIGxlZnQgPSAwLjYsIGJvdHRvbSA9IDAsIHJpZ2h0ID0gMSwgdG9wID0gMC41KSArDQogICBsYWJzKHggPSAiTG90IFNpemUgaW4gU3FGdCIpDQoNCm51bWVyaWNfcGxvdCh0cmFpbl9kZiwgYXZnU2Nob29sUmF0aW5nLCBmYWN0b3JfdmFyID0gaG9tZVR5cGUpICsNCiAgaW5zZXRfZWxlbWVudChudW1lcmljX2hpc3RvZ3JhbSh0cmFpbl9kZiwgYXZnU2Nob29sUmF0aW5nKSwNCiAgICAgICAgICAgICAgbGVmdCA9IDAuNiwgYm90dG9tID0gMCwgcmlnaHQgPSAxLCB0b3AgPSAwLjUpICsNCiAgIGxhYnMoeCA9ICJTY2hvb2wgUmF0aW5nIikNCg0KbnVtZXJpY19wbG90KHRyYWluX2RmLCBNZWRpYW5TdHVkZW50c1BlclRlYWNoZXIsIGZhY3Rvcl92YXIgPSBob21lVHlwZSkgKw0KICAgIGluc2V0X2VsZW1lbnQobnVtZXJpY19oaXN0b2dyYW0odHJhaW5fZGYsIE1lZGlhblN0dWRlbnRzUGVyVGVhY2hlciksDQogICAgICAgICAgICAgIGxlZnQgPSAwLjYsIGJvdHRvbSA9IDAsIHJpZ2h0ID0gMSwgdG9wID0gMC41KSArDQogICBsYWJzKHggPSAiTWVkaWFuIFN0dWRlbnRzXG5QZXIgVGVhY2hlciIpDQoNCm51bWVyaWNfcGxvdCh0cmFpbl9kZiwgbnVtT2ZCYXRocm9vbXMsIGZhY3Rvcl92YXIgPSBob21lVHlwZSkgKw0KICAgICAgaW5zZXRfZWxlbWVudChudW1lcmljX2hpc3RvZ3JhbSh0cmFpbl9kZiwgbnVtT2ZCYXRocm9vbXMpLA0KICAgICAgICAgICAgICBsZWZ0ID0gMC42LCBib3R0b20gPSAwLCByaWdodCA9IDEsIHRvcCA9IDAuNSkgKw0KICAgbGFicyh4ID0gIkJhdGhyb29tcyIpDQoNCm51bWVyaWNfcGxvdCh0cmFpbl9kZiwgbnVtT2ZCZWRyb29tcywgZmFjdG9yX3ZhciA9IGhvbWVUeXBlKSArDQogICAgICAgIGluc2V0X2VsZW1lbnQobnVtZXJpY19oaXN0b2dyYW0odHJhaW5fZGYsIG51bU9mQmVkcm9vbXMpLA0KICAgICAgICAgICAgICBsZWZ0ID0gMC42LCBib3R0b20gPSAwLCByaWdodCA9IDEsIHRvcCA9IDAuNSkgKw0KICAgbGFicyh4ID0gIkJlZHJvb21zIikNCg0KbnVtZXJpY19wbG90KHRyYWluX2RmLCB5ZWFyQnVpbHQsIGZhY3Rvcl92YXIgPSBob21lVHlwZSkgKw0KICBzY2FsZV94X2NvbnRpbnVvdXMobi5icmVha3MgPSA0KSArDQogICAgICAgICAgaW5zZXRfZWxlbWVudChudW1lcmljX2hpc3RvZ3JhbSh0cmFpbl9kZiwgeWVhckJ1aWx0KSArDQogICAgICAgICAgICAgICAgICAgICAgICAgIHNjYWxlX3hfY29udGludW91cyhuLmJyZWFrcyA9IDQpLA0KICAgICAgICAgICAgICBsZWZ0ID0gMC42NiwgYm90dG9tID0gMCwgcmlnaHQgPSAxLCB0b3AgPSAwLjUpICsNCiAgIGxhYnMoeCA9ICJZZWFyIEJ1aWx0IikNCg0KdGltZWxpbmVfcGxvdCA8LSBmdW5jdGlvbih0YmwsIG51bWVyaWNfdmFyLCBmYWN0b3JfdmFyID0gcHJpY2VSYW5nZSl7DQogIHRibCAlPiUgDQogICAgZ3JvdXBfYnkoe3tmYWN0b3JfdmFyfX0sIGRlY2FkZSA9IDEwICogeWVhckJ1aWx0ICUvJSAxMCkgJT4lIA0KICAgIHN1bW1hcml6ZShuID0gbigpLA0KICAgICAgICAgICAgICBtZWFuID0gbWVhbih7e251bWVyaWNfdmFyfX0sIG5hLnJtID0gVFJVRSksDQogICAgICAgICAgICAgIGhpZ2ggPSBxdWFudGlsZSh7e251bWVyaWNfdmFyfX0sIHByb2JzID0gMC45LCBuYS5ybSA9IFRSVUUpLA0KICAgICAgICAgICAgICBsb3cgPSBxdWFudGlsZSh7e251bWVyaWNfdmFyfX0sIHByb2JzID0gMC4xLCBuYS5ybSA9IFRSVUUpLA0KICAgICAgICAgICAgICAuZ3JvdXBzID0gImRyb3AiKSAlPiUgDQogICAgZ2dwbG90KGFlcyhkZWNhZGUsIG1lYW4sIGNvbG9yID0gcHJpY2VSYW5nZSkpICsNCiAgICBnZW9tX2xpbmUoKSArDQogICAgZ2VvbV9yaWJib24oYWVzKHltaW4gPSBsb3csIHltYXggPSBoaWdoLCBmaWxsID0gcHJpY2VSYW5nZSksIA0KICAgICAgICAgICAgICAgIGFscGhhID0gLjEsIHNpemUgPSAwLjEpICsNCiAgICBzY2FsZV95X2xvZzEwKGxhYmVscyA9IHNjYWxlczo6Y29tbWEpICsNCiAgICBzY2FsZV9maWxsX3ZpcmlkaXNfZChvcHRpb24gPSAiSCIpDQp9DQoNCnRpbWVsaW5lX3Bsb3QodHJhaW5fZGYsIGxvdFNpemVTcUZ0KSArDQogIHNjYWxlX3hfY29udGludW91cyhicmVha3MgPSBzZXEoMTkwMCwgMjAyMCwgMjApKSArDQogIHRoZW1lKGxlZ2VuZC5wb3NpdGlvbiA9IGMoMC4xNSwgMC44KSwNCiAgICAgICAgbGVnZW5kLmJhY2tncm91bmQgPSBlbGVtZW50X3JlY3QoY29sb3IgPSAid2hpdGUiKSkgKw0KICBsYWJzKHkgPSAiTWVhbiBMb3QgU2l6ZSBpbiBTcXVhcmUgRmVldCIsDQogICAgICAgdGl0bGUgPSAiVGhlcmUgbXVzdCBiZSBhbiBvdXRsaWVyIGxvdCBzaXplIHRyYW5zYWN0aW9uIGluIHRoZSAxOTgwcyIpDQoNCmBgYA0KDQojIyBNYXBzDQoNCmBgYHtyfQ0KbWFwX3Bsb3QgPC0gZnVuY3Rpb24odGJsLCBudW1lcmljX3Zhcikgew0KICB0YmwgJT4lDQogICAgZ3JvdXBfYnkobGF0aXR1ZGUgPSByb3VuZChsYXRpdHVkZSwgZGlnaXRzID0gMiksDQogICAgICAgICAgICAgbG9uZ2l0dWRlID0gcm91bmQobG9uZ2l0dWRlLCBkaWdpdHMgPSAyKSkgJT4lDQogICAgc3VtbWFyaXplKG4gPSBuKCksDQogICAgICAgICAgICAgIG1lYW4gPSBtZWFuKHt7bnVtZXJpY192YXJ9fSwgbmEucm0gPSBUUlVFKSwNCiAgICAgICAgICAgICAgLmdyb3VwcyA9ICJkcm9wIikgICU+JQ0KICAgICBmaWx0ZXIobiA8IDEwKSAlPiUgDQogICAgIGdncGxvdChhZXMobG9uZ2l0dWRlLCBsYXRpdHVkZSwgeiA9IG1lYW4pKSArDQogICAgIHN0YXRfc3VtbWFyeV9oZXgoYWxwaGEgPSAwLjksIGJpbnMgPSAyMCkgKw0KICAgICBzY2FsZV9maWxsX3ZpcmlkaXNfYihvcHRpb24gPSAiSCIsIGxhYmVscyA9IHNjYWxlczo6Y29tbWEpICsNCiAgICBjb29yZF9jYXJ0ZXNpYW4oKQ0KfQ0KDQptYXBfcGxvdCh0cmFpbl9kZiwgbG90U2l6ZVNxRnQgKSArDQogIGxhYnMoZmlsbCA9ICJNZWFuIExvdCBTaXplcyIsDQogICAgICAgdGl0bGUgPSAiT25lIGxhcmdlIGVudGl0eSwgdmFsdWVkIGluIHRoZSBsb3dlc3QgcHJpY2UgY2xhc3MiLA0KICAgICAgIHN1YnRpdGxlID0gIlRoZSBPbmUgQ29uZG8gcGFyY2VsIGlzIDM0IG1pbGxpb24gU3EgRnQsIG9yIDc4MCBhY3JlcyIpDQogICAgDQptYXBfcGxvdCh0cmFpbl9kZiwgYXZnU2Nob29sUmF0aW5nICkgKw0KICBsYWJzKGZpbGwgPSAiQXZlcmFnZSBTY2hvb2wgUmF0aW5nIiwNCiAgICAgICB0aXRsZSA9ICJTY2hvb2xzIG9uIHRoZSBXZXN0IFNpZGUgU2NvcmUgQmV0dGVyIikNCg0KbWFwX3Bsb3QodHJhaW5fZGYsIG51bU9mUGF0aW9BbmRQb3JjaEZlYXR1cmVzICApICsNCiAgbGFicyhmaWxsID0gIk1lYW4gTm8uIFBhdGlvICYgUG9yY2hlcyIsDQogICAgICAgdGl0bGUgPSAiTGFrZXNpZGUgcHJvcGVydGllcyBoYXZlIG1vcmUgcGF0aW8gZmVhdHVyZXMiKSANCg0KbWFwX3Bsb3QodHJhaW5fZGYsIGxvZyhsb3RTaXplU3FGdCkgICkgKw0KICBsYWJzKGZpbGwgPSAiTWVhbiBsb2cobG90IHNpemUpIiwNCiAgICAgICB0aXRsZSA9ICJBdXN0aW4gQXJlYSBIb21lIExvdCBTaXplIikNCg0KYGBgDQoNCkkgYW0gZ29pbmcgdG8gdGFrZSB0aGUgdW51c3VhbCBzdGVwIG9mIHJlbW92aW5nIHRoZSB1aWQgOTI0NCBjb25kbyBmcm9tIHRoZSB0cmFpbmluZyBkYXRhc2V0LCBhcyBhbiBleGNlcHRpb25hbCBvdXRsaWVyLiBUaGUgaGlzdG9ncmFtcyBvZiBjb25kbyBwcmljZXMgYW5kIG9mIGxvdCBzaXplcyBpcyBtb3JlIGNvbnNpc3RlbnQsIGFuZCBJIHN1c3BlY3QgbW9yZSBwcmVkaWN0aXZlIG9mIHByaWNlIGNsYXNzLCB3aXRoIHRoZSBvdXRsaWVyIHJlbW92ZWQuDQoNCmBgYHtyfQ0KdHJhaW5fZGYgJT4lIA0KICBmaWx0ZXIodWlkICE9IDkyNDRMKSAlPiUgDQogIG1hcF9wbG90KGxvdFNpemVTcUZ0ICkgKw0KICBsYWJzKGZpbGwgPSAiTWVhbiBMb3QgU2l6ZXMiLA0KICAgICAgIHRpdGxlID0gIkxvdCBTaXplcyIsDQogICAgICAgc3VidGl0bGUgPSAiUHJvcGVydHkgVUlEIDkyNDQgcmVtb3ZlZCIpIA0KYGBgDQoNCiMjIFRleHQgYW5hbHlzaXMNCg0KYEp1bGlhIFNpbGdlYCBhbmQgdGVhbSBkZXNlcnZlIGEgaHVnZSBhbW91bnQgb2YgY3JlZGl0IGZvciBib3RoIHRoZSBwYWNrYWdlIGRldmVsb3BtZW50IGFuZCB0aGUgbWFueSBkZW1vbnN0cmF0aW9ucyBvZiB0ZXh0IGFuYWx5c2lzIG9uIHRoZWlyIGJsb2dzIGFuZCBZb3VUdWJlLg0KDQpgYGB7ciB0ZXh0IGFuYWx5c2lzLCBmaWcuYXNwPTF9DQpkZXNjcmlwdGlvbl90aWR5IDwtIHRyYWluX2RmICU+JSANCiAgbXV0YXRlKHByaWNlUmFuZ2UgPSBwYXJzZV9udW1iZXIoYXMuY2hhcmFjdGVyKHByaWNlUmFuZ2UpKSArIDEwMDAwMCkgJT4lIA0KICB1bm5lc3RfdG9rZW5zKHdvcmQsIGRlc2NyaXB0aW9uKSAlPiUgDQogIGZpbHRlcighc3RyX2RldGVjdCh3b3JkLCJbOmRpZ2l0Ol0iICkpICU+JSANCiAgYW50aV9qb2luKHN0b3Bfd29yZHMpDQoNCnRvcF9kZXNjcmlwdGlvbiA8LSBkZXNjcmlwdGlvbl90aWR5ICU+JSANCiAgY291bnQod29yZCwgc29ydCA9IFRSVUUpICU+JSANCiAgc2xpY2VfbWF4KG4sIG4gPSAyMDApICU+JSANCiAgcHVsbCh3b3JkKQ0KDQp3b3JkX2ZyZXFzIDwtIGRlc2NyaXB0aW9uX3RpZHkgJT4lIA0KICBjb3VudCh3b3JkLCBwcmljZVJhbmdlKSAlPiUgDQogIGNvbXBsZXRlKHdvcmQsIHByaWNlUmFuZ2UsIGZpbGwgPSBsaXN0KG4gPSAwKSkgJT4lIA0KICBncm91cF9ieShwcmljZVJhbmdlKSAlPiUgDQogIG11dGF0ZShwcmljZV90b3RhbCA9IHN1bShuKSwNCiAgICAgICAgIHByb3BvcnRpb24gPSBuIC8gcHJpY2VfdG90YWwpICU+JSANCiAgdW5ncm91cCgpICU+JSANCiAgZmlsdGVyKHdvcmQgJWluJSB0b3BfZGVzY3JpcHRpb24pDQoNCndvcmRfbW9kZWxzIDwtIHdvcmRfZnJlcXMgJT4lIA0KICBuZXN0KGRhdGEgPSAtd29yZCkgJT4lIA0KICBtdXRhdGUobW9kZWwgPSBtYXAoZGF0YSwgfiBnbG0oY2JpbmQobiwgcHJpY2VfdG90YWwpIH4gcHJpY2VSYW5nZSwgLiwgZmFtaWx5ID0gImJpbm9taWFsIikpLA0KICAgICAgICAgbW9kZWwgPSBtYXAobW9kZWwsIHRpZHkpKSAlPiUgDQogIHVubmVzdChtb2RlbCkgJT4lIA0KICBmaWx0ZXIodGVybSA9PSAicHJpY2VSYW5nZSIpICU+JSANCiAgbXV0YXRlKHAudmFsdWUgPSBwLmFkanVzdChwLnZhbHVlKSkgJT4lIA0KICBhcnJhbmdlKC1lc3RpbWF0ZSkNCg0Kd29yZF9tb2RlbHMgJT4lIA0KICBnZ3Bsb3QoYWVzKGVzdGltYXRlLCBwLnZhbHVlKSkgKw0KICBnZW9tX3ZsaW5lKHhpbnRlcmNlcHQgPSAwLCBsdHkgPSAyLCBhbHBoYSA9IDAuNywgY29sb3IgPSAiZ3JheTUwIikgKw0KICBnZW9tX3BvaW50KGFscGhhID0gMC44LCBzaXplID0gMS41KSArDQogIHNjYWxlX3lfbG9nMTAoKSArDQogIGdncmVwZWw6Omdlb21fdGV4dF9yZXBlbChhZXMobGFiZWwgPSB3b3JkKSkgKw0KICBsYWJzKHRpdGxlID0gIk1vc3QgaW1wYWN0ZnVsIGRlc2NyaXB0aW9uIHdvcmRzIGZvciBlYWNoIHByaWNlIHJhbmdlIikgDQoNCmhpZ2hlc3Rfd29yZHMgPC0NCiAgd29yZF9tb2RlbHMgJT4lIA0KICBmaWx0ZXIocC52YWx1ZSA8IDAuMDUpICU+JSANCiAgc2xpY2VfbWF4KGVzdGltYXRlLCBuID0gMTIpICU+JSANCiAgcHVsbCh3b3JkKQ0KDQpsb3dlc3Rfd29yZHMgPC0NCiAgd29yZF9tb2RlbHMgJT4lIA0KICBmaWx0ZXIocC52YWx1ZSA8IDAuMDUpICU+JSANCiAgc2xpY2VfbWF4KC1lc3RpbWF0ZSwgbiA9IDEyKSAlPiUgDQogIHB1bGwod29yZCkNCg0Kd29yZF9mcmVxcyAlPiUgDQogIGZpbHRlcih3b3JkICVpbiUgaGlnaGVzdF93b3JkcykgJT4lIA0KICBnZ3Bsb3QoYWVzKHByaWNlUmFuZ2UsIHByb3BvcnRpb24sIGNvbG9yID0gd29yZCkpICsNCiAgZ2VvbV9saW5lKHNpemUgPSAyLjUsIGFscGhhID0gMC43LCBzaG93LmxlZ2VuZCA9IEZBTFNFKSArDQogIGZhY2V0X3dyYXAofiB3b3JkKSArDQogIHNjYWxlX2NvbG9yX3ZpcmlkaXNfZChvcHRpb24gPSAiSCIpICsNCiAgc2NhbGVfeF9jb250aW51b3VzKGxhYmVscyA9IHNjYWxlczo6ZG9sbGFyLCBuLmJyZWFrcyA9IDMpICsNCiAgc2NhbGVfeV9jb250aW51b3VzKGxhYmVscyA9IHNjYWxlczo6cGVyY2VudCwgbi5icmVha3MgPSAzKSArDQogIGxhYnModGl0bGUgPSAiRXhwZW5zaXZlIEhvbWUgRGVzY3JpcHRpb24gV29yZHMiKQ0KDQp3b3JkX2ZyZXFzICU+JSANCiAgZmlsdGVyKHdvcmQgJWluJSBsb3dlc3Rfd29yZHMpICU+JSANCiAgZ2dwbG90KGFlcyhwcmljZVJhbmdlLCBwcm9wb3J0aW9uLCBjb2xvciA9IHdvcmQpKSArDQogIGdlb21fbGluZShzaXplID0gMi41LCBhbHBoYSA9IDAuNywgc2hvdy5sZWdlbmQgPSBGQUxTRSkgKw0KICBmYWNldF93cmFwKH4gd29yZCkgKw0KICBzY2FsZV9jb2xvcl92aXJpZGlzX2Qob3B0aW9uID0gIkgiKSArDQogIHNjYWxlX3hfY29udGludW91cyhsYWJlbHMgPSBzY2FsZXM6OmRvbGxhciwgbi5icmVha3MgPSAzKSArDQogIHNjYWxlX3lfY29udGludW91cyhsYWJlbHMgPSBzY2FsZXM6OnBlcmNlbnQsIG4uYnJlYWtzID0gMykgKw0KICBsYWJzKHRpdGxlID0gIkNoZWFwIEhvbWUgRGVzY3JpcHRpb24gV29yZHMiKQ0KDQpgYGANCg0KLS0tLQ0KDQojIE1hY2hpbmUgTGVhcm5pbmc6IFJhbmRvbSBGb3Jlc3Qgey50YWJzZXR9DQoNCkxldCdzIHJ1biBtb2RlbHMgaW4gdHdvIHN0ZXBzLiBUaGUgZmlyc3QgaXMgYSBzaW1wbGUsIGZhc3Qgc2hhbGxvdyByYW5kb20gZm9yZXN0LCB0byBjb25maXJtIHRoYXQgdGhlIG1vZGVsIHdpbGwgcnVuIGFuZCBvYnNlcnZlIGZlYXR1cmUgaW1wb3J0YW5jZSBzY29yZXMuIFRoZSBzZWNvbmQgd2lsbCB1c2UgYHhnYm9vc3RgLiBCb3RoIHVzZSB0aGUgYmFzaWMgcmVjaXBlIHByZXByb2Nlc3NvciBmb3Igbm93Lg0KDQojIyBDcm9zcyBWYWxpZGF0aW9uDQoNCldlIHdpbGwgdXNlIDUtZm9sZCBjcm9zcyB2YWxpZGF0aW9uIGFuZCBzdHJhdGlmeSBvbiB0aGUgb3V0Y29tZSB0byBidWlsZCBtb2RlbHMgdGhhdCBhcmUgbGVzcyBsaWtlbHkgdG8gb3Zlci1maXQgdGhlIHRyYWluaW5nIGRhdGEuICBBcyBhIHNvdW5kIG1vZGVsaW5nIHByYWN0aWNlLCBJIGFtIGdvaW5nIHRvIGhvbGQgMTAlIG9mIHRoZSB0cmFpbmluZyBkYXRhIG91dCB0byBiZXR0ZXIgYXNzZXNzIHRoZSBtb2RlbCBwZXJmb3JtYW5jZSBwcmlvciB0byBzdWJtaXNzaW9uLg0KDQpgYGB7ciBjcm9zcyB2YWxpZGF0aW9ufQ0Kc2V0LnNlZWQoMjAyMSkNCg0Kc3BsaXQgPC0gaW5pdGlhbF9zcGxpdCh0cmFpbl9kZiwgcHJvcCA9IDAuOSkNCg0KdHJhaW5pbmcgPC0gdHJhaW5pbmcoc3BsaXQpDQp0ZXN0aW5nIDwtIHRlc3Rpbmcoc3BsaXQpDQoNCihmb2xkcyA8LSB2Zm9sZF9jdih0cmFpbmluZywgdiA9IDUsIHN0cmF0YSA9IHByaWNlUmFuZ2UpKQ0KDQpgYGANCg0KIyMgVGhlIHJlY2lwZQ0KDQpUbyBtb3ZlIHF1aWNrbHkgSSBzdGFydCB3aXRoIHRoaXMgYmFzaWMgcmVjaXBlLg0KDQpgYGB7ciBiYXNpYyByZWNpcGV9DQpiYXNpY19yZWMgPC0NCiAgcmVjaXBlKA0KICAgIHByaWNlUmFuZ2UgfiAgdWlkICsgbGF0aXR1ZGUgKyBsb25naXR1ZGUgKyBnYXJhZ2VTcGFjZXMgKyBoYXNTcGEgKyB5ZWFyQnVpbHQgKyBudW1PZlBhdGlvQW5kUG9yY2hGZWF0dXJlcyAgKyBsb3RTaXplU3FGdCAgKyBhdmdTY2hvb2xSYXRpbmcgKyBNZWRpYW5TdHVkZW50c1BlclRlYWNoZXIgICArIG51bU9mQmF0aHJvb21zICsgbnVtT2ZCZWRyb29tcyArIGhvbWVUeXBlLA0KICAgIGRhdGEgPSB0cmFpbmluZw0KICApICU+JSANCiAgdXBkYXRlX3JvbGUodWlkLCBuZXdfcm9sZSA9ICJJRCIpICU+JSANCiAgc3RlcF9maWx0ZXIodWlkICE9IDkyNDRMKSAlPiUgDQogIHN0ZXBfbG9nKGxvdFNpemVTcUZ0KSAlPiUgDQogIHN0ZXBfbm92ZWwoYWxsX25vbWluYWxfcHJlZGljdG9ycygpKSAlPiUgDQogIHN0ZXBfbnMobGF0aXR1ZGUsIGxvbmdpdHVkZSwgZGVnX2ZyZWUgPSA1KQ0KDQpgYGANCg0KIyMgRGF0YXNldCBmb3IgbW9kZWxpbmcNCg0KYGBge3IganVpY2UgdGhlIGRhdGFzZXR9DQpiYXNpY19yZWMgJT4lIA0KIyAgZmluYWxpemVfcmVjaXBlKGxpc3QobnVtX2NvbXAgPSAyKSkgJT4lIA0KICBwcmVwKCkgJT4lDQogIGp1aWNlKCkgDQoNCmBgYA0KDQojIyBNb2RlbCBTcGVjaWZpY2F0aW9uDQoNClRoaXMgZmlyc3QgbW9kZWwgaXMgYSBiYWdnZWQgdHJlZSwgd2hlcmUgdGhlIG51bWJlciBvZiBwcmVkaWN0b3JzIHRvIGNvbnNpZGVyIGZvciBlYWNoIHNwbGl0IG9mIGEgdHJlZSAoaS5lLiwgbXRyeSkgZXF1YWxzIHRoZSBudW1iZXIgb2YgYWxsIGF2YWlsYWJsZSBwcmVkaWN0b3JzLiBUaGUgYG1pbl9uYCBvZiAxMCBtZWFucyB0aGF0IGVhY2ggdHJlZSBicmFuY2ggb2YgdGhlIDUwIGRlY2lzaW9uIHRyZWVzIGJ1aWx0IGhhdmUgYXQgbGVhc3QgMTAgb2JzZXJ2YXRpb25zLiBBcyBhIHJlc3VsdCwgdGhlIGRlY2lzaW9uIHRyZWVzIGluIHRoZSBlbnNlbWJsZSBhbGwgYXJlIHJlbGF0aXZlbHkgc2hhbGxvdy4NCg0KYGBge3IgcmFuZG9tIGZvcmVzdCBzcGVjfQ0KDQooYmFnX3NwZWMgPC0NCiAgYmFnX3RyZWUobWluX24gPSAxMCkgJT4lDQogIHNldF9lbmdpbmUoInJwYXJ0IiwgdGltZXMgPSA1MCkgJT4lDQogIHNldF9tb2RlKCJjbGFzc2lmaWNhdGlvbiIpKQ0KDQpgYGANCg0KIyMgUGFyYWxsZWwgYmFja2VuZA0KDQpUbyBzcGVlZCB1cCBjb21wdXRhdGlvbiB3ZSB3aWxsIHVzZSBhIHBhcmFsbGVsIGJhY2tlbmQuDQoNCmBgYHtyIHBhcmFsbGVsIGJhY2tlbmR9DQphbGxfY29yZXMgPC0gcGFyYWxsZWxseTo6YXZhaWxhYmxlQ29yZXMob21pdCA9IDEpDQphbGxfY29yZXMNCg0KZnV0dXJlOjpwbGFuKCJtdWx0aXNlc3Npb24iLCB3b3JrZXJzID0gYWxsX2NvcmVzKSAjIG9uIFdpbmRvd3MNCg0KYGBgDQoNCiMjIEZpdCBhbmQgVmFyaWFibGUgSW1wb3J0YW5jZQ0KDQpMZXRzIG1ha2UgYSBjdXJzb3J5IGNoZWNrIG9mIHRoZSByZWNpcGUgYW5kIHZhcmlhYmxlIGltcG9ydGFuY2UsIHdoaWNoIGNvbWVzIG91dCBvZiBgcnBhcnRgIGZvciBmcmVlLiBUaGlzIHdvcmtmbG93IGFsc28gaGFuZGxlcyBmYWN0b3JzIHdpdGhvdXQgZHVtbWllcy4NCg0KYGBge3IgZml0IHJhbmRvbSBmb3Jlc3R9DQpiYWdfd2YgPC0NCiAgd29ya2Zsb3coKSAlPiUNCiAgYWRkX3JlY2lwZShiYXNpY19yZWMpICU+JQ0KICBhZGRfbW9kZWwoYmFnX3NwZWMpDQoNCnN5c3RlbS50aW1lKA0KICANCmJhZ19maXRfcnMgPC0gZml0X3Jlc2FtcGxlcygNCiAgYmFnX3dmLA0KICByZXNhbXBsZXMgPSBmb2xkcywNCiAgbWV0cmljcyA9IG1zZXQsDQogIGNvbnRyb2wgPSBjb250cm9sX3Jlc2FtcGxlcyhzYXZlX3ByZWQgPSBUUlVFKQ0KICAgKQ0KDQopDQpgYGANCg0KSG93IGRpZCB0aGVzZSByZXN1bHRzIHR1cm4gb3V0PyBUaGUgbWV0cmljcyBhcmUgYWNyb3NzIHRoZSBjcm9zcyB2YWxpZGF0aW9uIGhvbGRvdXRzLCBhbmQgdGhlIGNvbmZpZGVuY2UgbWF0cml4IGhlcmUgaXMgb24gdGhlIHRyYWluaW5nIGRhdGEuDQoNCmBgYHtyIHZpc3VhbGl6ZSByYW5kb20gZm9yZXN0fQ0KY29sbGVjdF9tZXRyaWNzKGJhZ19maXRfcnMpDQoNCmBgYA0KDQpUaGF0J3Mgbm90IGdyZWF0LiBXaGF0IGhhcHBlbmVkPw0KDQpgYGB7ciBmdWxsIGZpdCByYW5kb20gZm9yZXN0fQ0KYmFnX2ZpdF9iZXN0IDwtICAgDQogIHdvcmtmbG93KCkgJT4lIA0KICBhZGRfcmVjaXBlKGJhc2ljX3JlYykgJT4lIA0KICBhZGRfbW9kZWwoYmFnX3NwZWMpICU+JSANCiAgZmluYWxpemVfd29ya2Zsb3coc2VsZWN0X2Jlc3QoYmFnX2ZpdF9ycywgIm1uX2xvZ19sb3NzIikpDQoNCmJhZ19sYXN0X2ZpdCA8LSBsYXN0X2ZpdChiYWdfZml0X2Jlc3QsIHNwbGl0KQ0KDQpjb2xsZWN0X21ldHJpY3MoYmFnX2xhc3RfZml0KQ0KDQpjb2xsZWN0X3ByZWRpY3Rpb25zKGJhZ19sYXN0X2ZpdCkgJT4lIA0KICBjb25mX21hdChwcmljZVJhbmdlLCAucHJlZF9jbGFzcykgJT4lIA0KICBhdXRvcGxvdCh0eXBlID0gImhlYXRtYXAiKSANCg0KYGBgDQoNCkFsdGhvdWdoIDU5JSBhY2N1cmFjeSBvbiB0aGUgY3Jvc3MgdmFsaWRhdGlvbiBob2xkb3V0cyBpcyBub3QgZ3JlYXQsIHRoZSBwZXJmb3JtYW5jZSBzZWVtcyB0byBtYWtlIHNlbnNlLCB3aGVyZSB0aGUgZXJyb3JzIGFyZSBtb3N0bHkgb25lIGNsYXNzIG9mZiBvZiB0aGUgdHJ1dGguDQoNCkxldCdzIHRha2UgYSBsb29rIGF0IHZhcmlhYmxlIGltcG9ydGFuY2UgdG8gZXhwbG9yZSBhZGRpdGlvbmFsIGZlYXR1cmUgZW5naW5lZXJpbmcgcG9zc2liaWxpdGllcy4gQWxzbywgd2hpY2ggcHJpY2UgcmFuZ2VzIGRvZXMgdGhpcyBtb2RlbCBwcmVkaWN0IHRoZSBiZXN0Pw0KDQpgYGB7ciB2YXJpYWJsZSBpbXBvcnRhbmNlIHJhbmRvbSBmb3Jlc3R9DQoNCmV4dHJhY3RfZml0X3BhcnNuaXAoYmFnX2xhc3RfZml0KSRmaXQkaW1wICU+JQ0KICBtdXRhdGUodGVybSA9IGZjdF9yZW9yZGVyKHRlcm0sIHZhbHVlKSkgJT4lDQogIGdncGxvdChhZXModmFsdWUsIHRlcm0pKSArDQogIGdlb21fcG9pbnQoKSArDQogIGdlb21fZXJyb3JiYXJoKGFlcygNCiAgICB4bWluID0gdmFsdWUgLSBgc3RkLmVycm9yYCAvIDIsDQogICAgeG1heCA9IHZhbHVlICsgYHN0ZC5lcnJvcmAgLyAyDQogICksDQogIGhlaWdodCA9IC4zKSArDQogIGxhYnModGl0bGUgPSAiRmVhdHVyZSBJbXBvcnRhbmNlIiwNCiAgICAgICB4ID0gTlVMTCwgeSA9IE5VTEwpDQoNCmNvbGxlY3RfcHJlZGljdGlvbnMoYmFnX2xhc3RfZml0KSAlPiUNCiAgcm9jX2N1cnZlKHByaWNlUmFuZ2UsIGAucHJlZF8wLTI1MDAwMGA6YC5wcmVkXzY1MDAwMCtgKSAlPiUNCiAgZ2dwbG90KGFlcygxIC0gc3BlY2lmaWNpdHksIHNlbnNpdGl2aXR5LCBjb2xvciA9IC5sZXZlbCkpICsNCiAgZ2VvbV9hYmxpbmUobHR5ID0gMiwgY29sb3IgPSAiZ3JheTgwIiwgc2l6ZSA9IDEuNSkgKw0KICBnZW9tX3BhdGgoYWxwaGEgPSAwLjgsIHNpemUgPSAxKSArDQogIGNvb3JkX2VxdWFsKCkgKw0KICBsYWJzKGNvbG9yID0gTlVMTCwNCiAgICAgICB0aXRsZSA9ICJQZXJmb3JtcyBXZWxsIHdpdGggSGlnaGVzdCAmIExvd2VzdCBDbGFzc2VzIikNCg0KYGBgDQoNCmBgYHtyIHN1Ym1pc3Npb24gcmFuZG9tIGZvcmVzdCwgZXZhbCA9IEZBTFNFfQ0KDQpiZXN0X2ZpdCA8LSBmaXQoYmFnX2ZpdF9iZXN0LCBkYXRhID0gdHJhaW5fZGYpDQoNCmhvbGRvdXRfcmVzdWx0IDwtIGF1Z21lbnQoYmVzdF9maXQsIGhvbGRvdXRfZGYpDQoNCnN1Ym1pc3Npb24gPC0gaG9sZG91dF9yZXN1bHQgJT4lIA0KICAgIHNlbGVjdCh1aWQsIGAwLTI1MDAwMGAgPSBgLnByZWRfMC0yNTAwMDBgLA0KICAgICAgICAgICBgMjUwMDAwLTM1MDAwMGAgPSBgLnByZWRfMjUwMDAwLTM1MDAwMGAsDQogICAgICAgICAgIGAzNTAwMDAtNDUwMDAwYCA9IGAucHJlZF8zNTAwMDAtNDUwMDAwYCwNCiAgICAgICAgICAgYDQ1MDAwMC02NTAwMDBgID0gYC5wcmVkXzQ1MDAwMC02NTAwMDBgLA0KICAgICAgICAgICBgNjUwMDAwK2AgPSBgLnByZWRfNjUwMDAwK2ApDQpgYGANCg0KDQpgYGB7ciB3cml0ZSBjc3YgcmFuZG9tIGZvcmVzdCwgZXZhbCA9IEZBTFNFfQ0KDQp3cml0ZV9jc3Yoc3VibWlzc2lvbiwgZmlsZSA9IHBhdGhfZXhwb3J0KQ0KDQpgYGANCg0KYGBge3IgcG9zdCBjc3YgcmFuZG9tIGZvcmVzdCwgZXZhbCA9IEZBTFNFfQ0Kc2hlbGwoZ2x1ZTo6Z2x1ZSgna2FnZ2xlIGNvbXBldGl0aW9ucyBzdWJtaXQgLWMgeyBjb21wZXRpdGlvbl9uYW1lIH0gLWYgeyBwYXRoX2V4cG9ydCB9IC1tICJTaW1wbGUgcmFuZG9tIGZvcmVzdCBtb2RlbCAxIicpKQ0KYGBgDQoNCiMgey19DQoNCi0tLS0NCg0KIyBNYWNoaW5lIExlYXJuaW5nOiBYR0Jvb3N0IE1vZGVsIDEgey50YWJzZXR9DQoNCiMjIE1vZGVsIFNwZWNpZmljYXRpb24NCg0KTGV0J3Mgc3RhcnQgd2l0aCBhIGJvb3N0ZWQgZWFybHkgc3RvcHBpbmcgWEdCb29zdCBtb2RlbCB0aGF0IHJ1bnMgZmFzdCBhbmQgZ2l2ZXMgYW4gZWFybHkgaW5kaWNhdGlvbiBvZiB3aGljaCBoeXBlcnBhcmFtZXRlcnMgbWFrZSB0aGUgbW9zdCBkaWZmZXJlbmNlIGluIG1vZGVsIHBlcmZvcm1hbmNlLg0KDQpgYGB7ciB4Z2Jvb3N0IHNwZWMgb25lfQ0KKA0KICB4Z2Jvb3N0X3NwZWMgPC0gYm9vc3RfdHJlZSgNCiAgICB0cmVlcyA9IDUwMCwNCiAgICB0cmVlX2RlcHRoID0gdHVuZSgpLA0KICAgIG1pbl9uID0gdHVuZSgpLA0KICAgIGxvc3NfcmVkdWN0aW9uID0gdHVuZSgpLCAjIyBmaXJzdCB0aHJlZTogbW9kZWwgY29tcGxleGl0eQ0KICAgIHNhbXBsZV9zaXplID0gdHVuZSgpLA0KICAgIG10cnkgPSB0dW5lKCksICMjIHJhbmRvbW5lc3MNCiAgICBsZWFybl9yYXRlID0gdHVuZSgpLCAjIyBzdGVwIHNpemUNCiAgICBzdG9wX2l0ZXIgPSB0dW5lKCkNCiAgKSAlPiUNCiAgICBzZXRfZW5naW5lKCJ4Z2Jvb3N0IiwgdmFsaWRhdGlvbiA9IDAuMikgJT4lDQogICAgc2V0X21vZGUoImNsYXNzaWZpY2F0aW9uIikNCikNCmBgYA0KDQojIyBUdW5pbmcgYW5kIFBlcmZvcm1hbmNlDQoNCldlIHdpbGwgc3RhcnQgd2l0aCB0aGUgYmFzaWMgcmVjaXBlIGZyb20gYWJvdmUuIFRoZSB0dW5pbmcgZ3JpZCB3aWxsIGV2YWx1YXRlIGh5cGVycGFyYW1ldGVyIGNvbWJpbmF0aW9ucyBhY3Jvc3Mgb3VyIHJlc2FtcGxlIGZvbGRzIGFuZCByZXBvcnQgb24gdGhlIGJlc3QgYXZlcmFnZS4gDQoNCmBgYHtyIHR1bmUgZ3JpZCB4Z2Jvb3N0IG9uZSByZWNpcGV9DQoNCnhnYm9vc3RfcmVjIDwtDQogIHJlY2lwZSgNCiAgICBwcmljZVJhbmdlIH4gdWlkICsgbGF0aXR1ZGUgKyBsb25naXR1ZGUgKyBnYXJhZ2VTcGFjZXMgKyBoYXNTcGEgKyB5ZWFyQnVpbHQgKyBudW1PZlBhdGlvQW5kUG9yY2hGZWF0dXJlcyAgKyBsb3RTaXplU3FGdCAgKyBhdmdTY2hvb2xSYXRpbmcgKyBNZWRpYW5TdHVkZW50c1BlclRlYWNoZXIgICArIG51bU9mQmF0aHJvb21zICsgbnVtT2ZCZWRyb29tcyArIGRlc2NyaXB0aW9uICsgaG9tZVR5cGUsDQogICAgZGF0YSA9IHRyYWluaW5nDQogICkgJT4lDQogIHN0ZXBfZmlsdGVyKHVpZCAhPSA5MjQ0TCkgJT4lIA0KICB1cGRhdGVfcm9sZSh1aWQsIG5ld19yb2xlID0gIklEIikgJT4lIA0KICBzdGVwX2xvZyhsb3RTaXplU3FGdCkgJT4lIA0KICBzdGVwX25zKGxhdGl0dWRlLCBsb25naXR1ZGUsIGRlZ19mcmVlID0gNSkgJT4lIA0KICBzdGVwX3JlZ2V4KGRlc2NyaXB0aW9uLCANCiAgICAgICAgICAgICBwYXR0ZXJuID0gc3RyX2ZsYXR0ZW4oaGlnaGVzdF93b3JkcywgY29sbGFwc2UgPSAifCIpLA0KICAgICAgICAgICAgIHJlc3VsdCA9ICJoaWdoX3ByaWNlX3dvcmRzIikgJT4lIA0KICBzdGVwX3JlZ2V4KGRlc2NyaXB0aW9uLCANCiAgICAgICAgICAgICBwYXR0ZXJuID0gc3RyX2ZsYXR0ZW4obG93ZXN0X3dvcmRzLCBjb2xsYXBzZSA9ICJ8IiksDQogICAgICAgICAgICAgcmVzdWx0ID0gImxvd19wcmljZV93b3JkcyIpICU+JSANCiAgc3RlcF9ybShkZXNjcmlwdGlvbikgJT4lDQogIHN0ZXBfbm92ZWwoYWxsX25vbWluYWxfcHJlZGljdG9ycygpKSAlPiUNCiAgc3RlcF91bmtub3duKGFsbF9ub21pbmFsX3ByZWRpY3RvcnMoKSkgJT4lIA0KICBzdGVwX290aGVyKGhvbWVUeXBlLCB0aHJlc2hvbGQgPSAwLjAyKSAlPiUgDQogIHN0ZXBfZHVtbXkoYWxsX25vbWluYWxfcHJlZGljdG9ycygpLCBvbmVfaG90ID0gVFJVRSkgJT4lDQogIHN0ZXBfbnp2KGFsbF9wcmVkaWN0b3JzKCkpIA0KICAgIA0Kc3RvcHBpbmdfZ3JpZCA8LQ0KICBncmlkX2xhdGluX2h5cGVyY3ViZSgNCiAgICBmaW5hbGl6ZShtdHJ5KCksIHhnYm9vc3RfcmVjICU+JSBwcmVwKCkgJT4lIGp1aWNlKCkpLCAjIyBmb3IgdGhlIH42OCBjb2x1bW5zIGluIHRoZSBqdWljZWQgdHJhaW5pbmcgc2V0DQogICAgbGVhcm5fcmF0ZShyYW5nZSA9IGMoLTIsIC0xKSksICMjIGtlZXAgcHJldHR5IGJpZw0KICAgIHN0b3BfaXRlcihyYW5nZSA9IGMoMTBMLCA1MEwpKSwgIyMgYmlnZ2VyIHRoYW4gZGVmYXVsdA0KICAgIHRyZWVfZGVwdGgoYyg1TCwgMTBMKSksDQogICAgbWluX24oYygxMEwsIDQwTCkpLA0KICAgIGxvc3NfcmVkdWN0aW9uKCksICMjIGZpcnN0IHRocmVlOiBtb2RlbCBjb21wbGV4aXR5DQogICAgc2FtcGxlX3NpemUgPSBzYW1wbGVfcHJvcChjKDAuNSwgMS4wKSksDQogICAgc2l6ZSA9IDMwDQogICkNCmBgYA0KDQoNCmBgYHtyIHIgdHVuZSBncmlkIHhnYm9vc3Qgb25lIG5vZXZhbCwgZXZhbD1GQUxTRX0NCnN5c3RlbS50aW1lKA0KDQpjdl9yZXNfeGdib29zdCA8LQ0KICB3b3JrZmxvdygpICU+JSANCiAgYWRkX3JlY2lwZSh4Z2Jvb3N0X3JlYykgJT4lIA0KICBhZGRfbW9kZWwoeGdib29zdF9zcGVjKSAlPiUgDQogIHR1bmVfZ3JpZCggICAgDQogICAgcmVzYW1wbGVzID0gZm9sZHMsDQogICAgZ3JpZCA9IHN0b3BwaW5nX2dyaWQsDQogICAgbWV0cmljcyA9IG1zZXQsDQogICAgY29udHJvbCA9IGNvbnRyb2xfZ3JpZChzYXZlX3ByZWQgPSBUUlVFKQ0KICAgKQ0KDQopDQpgYGANCg0KYGBge3IgciB0dW5lIGdyaWQgeGdib29zdCBvbmUgbm9pbmNsdWRlLCBpbmNsdWRlPUZBTFNFfQ0KaWYgKGZpbGUuZXhpc3RzKGhlcmU6OmhlcmUoImRhdGEiLCJhdXN0aW5Ib21lVmFsdWUucmRzIikpKSB7DQpjdl9yZXNfeGdib29zdCA8LSByZWFkX3JkcyhoZXJlOjpoZXJlKCJkYXRhIiwiYXVzdGluSG9tZVZhbHVlLnJkcyIpKQ0KfSBlbHNlIHsNCiAgDQpzeXN0ZW0udGltZSgNCg0KY3ZfcmVzX3hnYm9vc3QgPC0NCiAgd29ya2Zsb3coKSAlPiUgDQogIGFkZF9yZWNpcGUoeGdib29zdF9yZWMpICU+JSANCiAgYWRkX21vZGVsKHhnYm9vc3Rfc3BlYykgJT4lIA0KICB0dW5lX2dyaWQoICAgIA0KICAgIHJlc2FtcGxlcyA9IGZvbGRzLA0KICAgIGdyaWQgPSBzdG9wcGluZ19ncmlkLA0KICAgIG1ldHJpY3MgPSBtc2V0LA0KICAgIGNvbnRyb2wgPSBjb250cm9sX2dyaWQoc2F2ZV9wcmVkID0gVFJVRSkNCiAgICkNCg0KKQ0Kd3JpdGVfcmRzKGN2X3Jlc194Z2Jvb3N0LCBoZXJlOjpoZXJlKCJkYXRhIiwiYXVzdGluSG9tZVZhbHVlLnJkcyIpKQ0KfQ0KICANCmBgYA0KDQpgYGB7ciByIHR1bmUgZ3JpZCB4Z2Jvb3N0IG9uZSBwZXJmb3JtYW5jZX0NCmF1dG9wbG90KGN2X3Jlc194Z2Jvb3N0KSArDQogIHRoZW1lKHN0cmlwLnRleHQueCA9IGVsZW1lbnRfdGV4dChzaXplID0gMTIvLnB0KSkNCg0Kc2hvd19iZXN0KGN2X3Jlc194Z2Jvb3N0LCBtZXRyaWMgPSAibW5fbG9nX2xvc3MiKSANCg0KYGBgDQoNCk9uIHRoZSBzdXJmYWNlLCB0aGlzIGZpcnN0IFhHQm9vc3QgcGFzcyBkb2VzIG5vdCBxdWl0ZSBhcHBlYXIgdG8gYmUgYXMgZ29vZCBhcyB0aGUgcmFuZG9tIGZvcmVzdCB3YXMuIExldOKAmXMgdXNlIGBsYXN0X2ZpdCgpYCB0byBmaXQgb25lIGZpbmFsIHRpbWUgdG8gdGhlIHRyYWluaW5nIGRhdGEgYW5kIGV2YWx1YXRlIG9uIHRoZSB0ZXN0aW5nIGRhdGEsIHdpdGggdGhlIG51bWVyaWNhbGx5IG9wdGltYWwgcmVzdWx0LiANCg0KYGBge3IgeGdib29zdCBvbmUgbGFzdCBmaXR9DQpiZXN0X3hnMV93ZiA8LSAgd29ya2Zsb3coKSAlPiUgDQogIGFkZF9yZWNpcGUoeGdib29zdF9yZWMpICU+JSANCiAgYWRkX21vZGVsKHhnYm9vc3Rfc3BlYykgJT4lIA0KICBmaW5hbGl6ZV93b3JrZmxvdyhzZWxlY3RfYmVzdChjdl9yZXNfeGdib29zdCwgbWV0cmljID0gIm1uX2xvZ19sb3NzIikpIA0KDQp4ZzFfbGFzdF9maXQgPC0gbGFzdF9maXQoYmVzdF94ZzFfd2YsIHNwbGl0KQ0KDQpjb2xsZWN0X3ByZWRpY3Rpb25zKHhnMV9sYXN0X2ZpdCkgJT4lIA0KICBjb25mX21hdChwcmljZVJhbmdlLCAucHJlZF9jbGFzcykgJT4lIA0KICBhdXRvcGxvdCh0eXBlID0gImhlYXRtYXAiKSANCg0KY29sbGVjdF9wcmVkaWN0aW9ucyh4ZzFfbGFzdF9maXQpICU+JSANCiAgcm9jX2N1cnZlKHByaWNlUmFuZ2UsIGAucHJlZF8wLTI1MDAwMGA6YC5wcmVkXzY1MDAwMCtgKSAlPiUgDQogIGdncGxvdChhZXMoMSAtIHNwZWNpZmljaXR5LCBzZW5zaXRpdml0eSwgY29sb3IgPSAubGV2ZWwpKSArDQogIGdlb21fcGF0aChhbHBoYSA9IDAuOCwgc2l6ZSA9IDEuMikgKw0KICBjb29yZF9lcXVhbCgpICsNCiAgbGFicyhjb2xvciA9IE5VTEwpDQoNCmNvbGxlY3RfcHJlZGljdGlvbnMoeGcxX2xhc3RfZml0KSAlPiUgDQogIG1uX2xvZ19sb3NzKHByaWNlUmFuZ2UsIGAucHJlZF8wLTI1MDAwMGA6YC5wcmVkXzY1MDAwMCtgKQ0KDQpgYGANCg0KQW5kIHRoZSB2YXJpYWJsZSBpbXBvcnRhbmNlDQoNCmBgYHtyLCBmaWcuYXNwPTF9DQpleHRyYWN0X3dvcmtmbG93KHhnMV9sYXN0X2ZpdCkgJT4lIA0KICBleHRyYWN0X2ZpdF9wYXJzbmlwKCkgJT4lIA0KICB2aXAoZ2VvbSA9ICJwb2ludCIsIG51bV9mZWF0dXJlcyA9IDE4KQ0KDQpgYGANCg0KSXQncyBpbnRlcmVzdGluZyB0byBtZSBob3cgdGhlIGxvdCBzaXplIGlzIHRoZSBtb3N0IGltcG9ydGFudCBmZWF0dXJlIGluIHRoaXMgbW9kZWwsIGFuZCB0aGF0IHRoZSBkZXNjcmlwdGlvbiB3b3JkcyBhcmUgc28gbG93IGluIHRoZSBsaXN0Lg0KDQpJIHdpbGwgdHJ5IHRvIHN1Ym1pdCB0aGlzIHRvIEthZ2dsZSwgYnV0IGl0J3Mgbm90IGxpa2VseSB0byB0YWtlIGl0IGJlY2F1c2UgdGhlIHBlcmZvcm1hbmNlIGlzIHdvcnNlLg0KDQpgYGB7ciB4Z2Jvb3N0IG9uZSBmaXQsIGV2YWwgPSBGQUxTRX0NCmJlc3RfeGcxX2ZpdCA8LSBmaXQoYmVzdF94ZzFfd2YsIGRhdGEgPSB0cmFpbl9kZikNCg0KaG9sZG91dF9yZXN1bHQgPC0gYXVnbWVudChiZXN0X3hnMV9maXQsIGhvbGRvdXRfZGYpDQoNCnN1Ym1pc3Npb24gPC0gaG9sZG91dF9yZXN1bHQgJT4lIA0KICAgIHNlbGVjdCh1aWQsIGAwLTI1MDAwMGAgPSBgLnByZWRfMC0yNTAwMDBgLA0KICAgICAgICAgICBgMjUwMDAwLTM1MDAwMGAgPSBgLnByZWRfMjUwMDAwLTM1MDAwMGAsDQogICAgICAgICAgIGAzNTAwMDAtNDUwMDAwYCA9IGAucHJlZF8zNTAwMDAtNDUwMDAwYCwNCiAgICAgICAgICAgYDQ1MDAwMC02NTAwMDBgID0gYC5wcmVkXzQ1MDAwMC02NTAwMDBgLA0KICAgICAgICAgICBgNjUwMDAwK2AgPSBgLnByZWRfNjUwMDAwK2ApDQpgYGANCg0KSSBhbSBnb2luZyB0byBhdHRlbXB0IHRvIHBvc3QgdGhpcyBzZWNvbmQgc3VibWlzc2lvbiB0byBLYWdnbGUsIGFuZCB3b3JrIG1vcmUgd2l0aCBgeGdib29zdGAgYW5kIGEgbW9yZSBhZHZhbmNlZCByZWNpcGUgdG8gZG8gYmV0dGVyLg0KDQpgYGB7ciB3cml0ZSBjc3YgeGdib29zdDEsIGV2YWwgPSBGQUxTRX0NCg0Kd3JpdGVfY3N2KHN1Ym1pc3Npb24sIGZpbGUgPSBwYXRoX2V4cG9ydCkNCg0KYGBgDQoNCmBgYHtyIHBvc3QgY3N2IHhnYm9vc3QsIGV2YWwgPSBGQUxTRX0NCnNoZWxsKGdsdWU6OmdsdWUoJ2thZ2dsZSBjb21wZXRpdGlvbnMgc3VibWl0IC1jIHsgY29tcGV0aXRpb25fbmFtZSB9IC1mIHsgcGF0aF9leHBvcnQgfSAtbSAiRmlyc3QgWEdCb29zdCBNb2RlbCInKSkNCmBgYA0KDQojIHstfQ0KDQotLS0tDQoNCiMgTWFjaGluZSBMZWFybmluZzogWEdCb29zdCBNb2RlbCAyIHsudGFic2V0fQ0KDQpMZXQncyB1c2Ugd2hhdCB3ZSBsZWFybmVkIGFib3ZlIHRvIGNob29zZSBiZXR0ZXIgaHlwZXJwYXJhbWV0ZXJzLiBUaGlzIHRpbWUsIGxldCdzIHRyeSB0aGVgdHVuZV9yYWNlX2Fub3ZhYCB0ZWNobmlxdWUgZm9yIHNraXBwaW5nIHRoZSBwYXJ0cyBvZiB0aGUgZ3JpZCBzZWFyY2ggdGhhdCBkbyBub3QgcGVyZm9ybSB3ZWxsLg0KDQojIyBNb2RlbCBTcGVjaWZpY2F0aW9uDQoNCmBgYHtyIHNwZWMgeGdib29zdCB0d299DQoNCih4Z2Jvb3N0X3NwZWMgPC0gYm9vc3RfdHJlZSh0cmVlcyA9IDUwMCwNCiAgICAgICAgICAgICAgICAgICAgICAgICAgICBtaW5fbiA9IHR1bmUoKSwNCiAgICAgICAgICAgICAgICAgICAgICAgICAgICBsb3NzX3JlZHVjdGlvbiA9IDAuMzM3LA0KICAgICAgICAgICAgICAgICAgICAgICAgICAgIG10cnkgPSB0dW5lKCksDQogICAgICAgICAgICAgICAgICAgICAgICAgICAgc2FtcGxlX3NpemUgPSAuOTcwLA0KICAgICAgICAgICAgICAgICAgICAgICAgICAgIHRyZWVfZGVwdGggPSA2LA0KICAgICAgICAgICAgICAgICAgICAgICAgICAgIHN0b3BfaXRlciA9IDI5LA0KICAgICAgICAgICAgICAgICAgICAgICAgICAgIGxlYXJuX3JhdGUgPSAuMDUpICU+JSANCiAgc2V0X2VuZ2luZSgieGdib29zdCIpICU+JQ0KICBzZXRfbW9kZSgiY2xhc3NpZmljYXRpb24iKSkNCg0KYGBgDQoNCiMjIFR1bmluZyBhbmQgUGVyZm9ybWFuY2UNCg0KYGBge3IgcmFjZSBncmlkIHhnYm9vc3QgdHdvfQ0KDQpyYWNlX2Fub3ZhX2dyaWQgPC0NCiAgZ3JpZF9sYXRpbl9oeXBlcmN1YmUoDQogICAgbXRyeShyYW5nZSA9IGMoNDAsNjYpKSwNCiAgICBtaW5fbihyYW5nZSA9IGMoOCwgMjApKSwNCiAgICBzaXplID0gMjANCiAgKQ0KYGBgDQoNCmBgYHtyIHJhY2UgZ3JpZCB4Z2Jvb3N0IHR3byBub2V2YWwsIGV2YWw9RkFMU0V9DQpzeXN0ZW0udGltZSgNCg0KY3ZfcmVzX3hnYm9vc3QgPC0NCiAgd29ya2Zsb3coKSAlPiUgDQogIGFkZF9yZWNpcGUoeGdib29zdF9yZWMpICU+JSANCiAgYWRkX21vZGVsKHhnYm9vc3Rfc3BlYykgJT4lIA0KICB0dW5lX3JhY2VfYW5vdmEoICAgIA0KICAgIHJlc2FtcGxlcyA9IGZvbGRzLA0KICAgIGdyaWQgPSByYWNlX2Fub3ZhX2dyaWQsDQogICAgY29udHJvbCA9IGNvbnRyb2xfcmFjZSh2ZXJib3NlX2VsaW0gPSBUUlVFLA0KICAgICAgICAgICAgICAgICAgICAgICAgICAgcGFyYWxsZWxfb3ZlciA9ICJyZXNhbXBsZXMiLA0KICAgICAgICAgICAgICAgICAgICAgICAgICAgc2F2ZV9wcmVkID0gVFJVRSksDQogICAgbWV0cmljcyA9IG1zZXQNCiAgICApDQoNCikNCmBgYA0KDQpgYGB7ciByYWNlIGdyaWQgeGdib29zdCB0d28gbm9pbmNsdWRlLCBpbmNsdWRlPUZBTFNFfQ0KaWYgKGZpbGUuZXhpc3RzKGhlcmU6OmhlcmUoImRhdGEiLCJhdXN0aW5Ib21lVmFsdWUyLnJkcyIpKSkgew0KY3ZfcmVzX3hnYm9vc3QgPC0gcmVhZF9yZHMoaGVyZTo6aGVyZSgiZGF0YSIsImF1c3RpbkhvbWVWYWx1ZTIucmRzIikpDQp9IGVsc2Ugew0Kc3lzdGVtLnRpbWUoDQoNCmN2X3Jlc194Z2Jvb3N0IDwtDQogIHdvcmtmbG93KCkgJT4lIA0KICBhZGRfcmVjaXBlKHhnYm9vc3RfcmVjKSAlPiUgDQogIGFkZF9tb2RlbCh4Z2Jvb3N0X3NwZWMpICU+JSANCiAgdHVuZV9yYWNlX2Fub3ZhKCAgICANCiAgICByZXNhbXBsZXMgPSBmb2xkcywNCiAgICBncmlkID0gcmFjZV9hbm92YV9ncmlkLA0KICAgIGNvbnRyb2wgPSBjb250cm9sX3JhY2UodmVyYm9zZV9lbGltID0gVFJVRSwNCiAgICAgICAgICAgICAgICAgICAgICAgICAgIHBhcmFsbGVsX292ZXIgPSAicmVzYW1wbGVzIiwNCiAgICAgICAgICAgICAgICAgICAgICAgICAgIHNhdmVfcHJlZCA9IFRSVUUpLA0KICAgIG1ldHJpY3MgPSBtc2V0DQogICAgKQ0KDQopDQp3cml0ZV9yZHMoY3ZfcmVzX3hnYm9vc3QsIGhlcmU6OmhlcmUoImRhdGEiLCJhdXN0aW5Ib21lVmFsdWUyLnJkcyIpKQ0KfQ0KYGBgDQoNCldlIGNhbiB2aXN1YWxpemUgaG93IHRoZSBwb3NzaWJsZSBwYXJhbWV0ZXIgY29tYmluYXRpb25zIHdlIHRyaWVkIGRpZCBkdXJpbmcgdGhlIOKAnHJhY2Uu4oCdIE5vdGljZSBob3cgd2Ugc2F2ZWQgYSBUT04gb2YgdGltZSBieSBub3QgZXZhbHVhdGluZyB0aGUgcGFyYW1ldGVyIGNvbWJpbmF0aW9ucyB0aGF0IHdlcmUgY2xlYXJseSBkb2luZyBwb29ybHkgb24gYWxsIHRoZSByZXNhbXBsZXM7IHdlIG9ubHkga2VwdCBnb2luZyB3aXRoIHRoZSBnb29kIHBhcmFtZXRlciBjb21iaW5hdGlvbnMuDQoNCmBgYHtyfQ0KcGxvdF9yYWNlKGN2X3Jlc194Z2Jvb3N0KQ0KYGBgDQoNCkxldCdzIGxvb2sgYXQgdGhlIHRvcCByZXN1bHRzDQoNCmBgYHtyfQ0KYXV0b3Bsb3QoY3ZfcmVzX3hnYm9vc3QpICsNCiAgdGhlbWUoc3RyaXAudGV4dC54ID0gZWxlbWVudF90ZXh0KHNpemUgPSAxMi8ucHQpKQ0KDQpzaG93X2Jlc3QoY3ZfcmVzX3hnYm9vc3QsDQogICAgICAgICAgbWV0cmljID0gIm1uX2xvZ19sb3NzIikNCg0KYGBgDQoNCkknbSBub3QgbWFraW5nIG11Y2ggcHJvZ3Jlc3MgaGVyZSBpbiBpbXByb3ZpbmcgbG9nbG9zcy4NCg0KYGBge3IgeGdib29zdCB0d28gbGFzdCBmaXR9DQpiZXN0X3hnMl93ZiA8LSAgd29ya2Zsb3coKSAlPiUgDQogIGFkZF9yZWNpcGUoeGdib29zdF9yZWMpICU+JSANCiAgYWRkX21vZGVsKHhnYm9vc3Rfc3BlYykgJT4lIA0KICBmaW5hbGl6ZV93b3JrZmxvdyhzZWxlY3RfYmVzdChjdl9yZXNfeGdib29zdCwgbWV0cmljID0gIm1uX2xvZ19sb3NzIikpIA0KDQp4ZzJfbGFzdF9maXQgPC0gbGFzdF9maXQoYmVzdF94ZzJfd2YsIHNwbGl0KQ0KDQpjb2xsZWN0X3ByZWRpY3Rpb25zKHhnMl9sYXN0X2ZpdCkgJT4lIA0KICBjb25mX21hdChwcmljZVJhbmdlLCAucHJlZF9jbGFzcykgJT4lIA0KICBhdXRvcGxvdCh0eXBlID0gImhlYXRtYXAiKSANCg0KY29sbGVjdF9wcmVkaWN0aW9ucyh4ZzJfbGFzdF9maXQpICU+JSANCiAgcm9jX2N1cnZlKHByaWNlUmFuZ2UsIGAucHJlZF8wLTI1MDAwMGA6YC5wcmVkXzY1MDAwMCtgKSAlPiUgDQogIGdncGxvdChhZXMoMSAtIHNwZWNpZmljaXR5LCBzZW5zaXRpdml0eSwgY29sb3IgPSAubGV2ZWwpKSArDQogIGdlb21fcGF0aChhbHBoYSA9IDAuOCwgc2l6ZSA9IDEuMikgKw0KICBjb29yZF9lcXVhbCgpICsNCiAgbGFicyhjb2xvciA9IE5VTEwpDQoNCmNvbGxlY3RfcHJlZGljdGlvbnMoeGcyX2xhc3RfZml0KSAlPiUgDQogIG1uX2xvZ19sb3NzKHByaWNlUmFuZ2UsIGAucHJlZF8wLTI1MDAwMGA6YC5wcmVkXzY1MDAwMCtgKQ0KDQpgYGANCg0KQW5kIHRoZSB2YXJpYWJsZSBpbXBvcnRhbmNlDQoNCmBgYHtyfQ0KZXh0cmFjdF93b3JrZmxvdyh4ZzJfbGFzdF9maXQpICU+JSANCiAgZXh0cmFjdF9maXRfcGFyc25pcCgpICU+JSANCiAgdmlwKGdlb20gPSAicG9pbnQiLCBudW1fZmVhdHVyZXMgPSAxNSkNCg0KYmVzdF94ZzJfZml0IDwtIGZpdChiZXN0X3hnMl93ZiwgZGF0YSA9IHRyYWluX2RmKQ0KDQpgYGANCg0KU3RyYW5nZSB0aGF0IHRoaXMgbW9kZWwgY2hhbmdlcyB1cCB2YXJpYWJsZSBpbXBvcnRhbmNlIHlldCBhZ2Fpbi4gTGV0J3Mgc3VibWl0IHRvIEthZ2dsZS4NCg0KYGBge3IgeGdib29zdCB0d28gZml0LCBldmFsID0gRkFMU0V9DQoNCmhvbGRvdXRfcmVzdWx0IDwtIGF1Z21lbnQoYmVzdF94ZzJfZml0LCBob2xkb3V0X2RmKQ0KDQpzdWJtaXNzaW9uIDwtIGhvbGRvdXRfcmVzdWx0ICU+JSANCiAgICBzZWxlY3QodWlkLCBgMC0yNTAwMDBgID0gYC5wcmVkXzAtMjUwMDAwYCwNCiAgICAgICAgICAgYDI1MDAwMC0zNTAwMDBgID0gYC5wcmVkXzI1MDAwMC0zNTAwMDBgLA0KICAgICAgICAgICBgMzUwMDAwLTQ1MDAwMGAgPSBgLnByZWRfMzUwMDAwLTQ1MDAwMGAsDQogICAgICAgICAgIGA0NTAwMDAtNjUwMDAwYCA9IGAucHJlZF80NTAwMDAtNjUwMDAwYCwNCiAgICAgICAgICAgYDY1MDAwMCtgID0gYC5wcmVkXzY1MDAwMCtgKQ0KYGBgDQoNCiMgey19DQoNCmBgYHtyLCBmaWcuYXNwPTF9DQpjb2xsZWN0X3ByZWRpY3Rpb25zKHhnMl9sYXN0X2ZpdCkgJT4lDQogIHJvY19jdXJ2ZShwcmljZVJhbmdlLCBgLnByZWRfMC0yNTAwMDBgOmAucHJlZF82NTAwMDArYCkgJT4lDQogIGdncGxvdChhZXMoMSAtIHNwZWNpZmljaXR5LCBzZW5zaXRpdml0eSwgY29sb3IgPSAubGV2ZWwpKSArDQogIGdlb21fYWJsaW5lKGx0eSA9IDIsIGNvbG9yID0gImdyYXk4MCIsIHNpemUgPSAxLjUpICsNCiAgZ2VvbV9wYXRoKGFscGhhID0gMC44LCBzaXplID0gMSkgKw0KICBjb29yZF9lcXVhbCgpICsNCiAgbGFicyhjb2xvciA9IE5VTEwsDQogICAgICAgdGl0bGUgPSAiUGVyZm9ybXMgV2VsbCB3aXRoIEhpZ2hlc3QgJiBMb3dlc3QgQ2xhc3NlcyIpDQpgYGANCg0KV2UncmUgb3V0IG9mIHRpbWUuIFRoaXMgd2lsbCBiZSBhcyBnb29kIGFzIGl0IGdldHMsIGFuZCBvdXIgZmluYWwgc3VibWlzc2lvbi4NCg0KYGBge3IgcG9zdCBjc3YgeGdib29zdDIsIGV2YWwgPSBGQUxTRX0NCnNoZWxsKGdsdWU6OmdsdWUoJ2thZ2dsZSBjb21wZXRpdGlvbnMgc3VibWl0IC1jIHsgY29tcGV0aXRpb25fbmFtZSB9IC1mIHsgcGF0aF9leHBvcnQgfSAtbSAiWEdib29zdCB3aXRoIGFkdmFuY2VkIHByZXByb2Nlc3NpbmcgbW9kZWwgMiInKSkNCmBgYA0KDQoNCg==