Astronaut Missions

Jim Gruman

July 15, 2020

Last updated: 2021-09-24

Checks: 7 0

Knit directory: myTidyTuesday/

This reproducible R Markdown analysis was created with workflowr (version 1.6.2). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20210907) was run prior to running the code in the R Markdown file. Setting a seed ensures that any results that rely on randomness, e.g. subsampling or permutations, are reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility.

The results in this page were generated with repository version 40f1f61. See the Past versions tab to see a history of the changes made to the R Markdown and HTML files.

Note that you need to be careful to ensure that all relevant files for the analysis have been committed to Git prior to generating the results (you can use wflow_publish or wflow_git_commit). workflowr only checks the R Markdown file, but you know if there are other scripts or data files that it depends on. Below is the status of the Git repository when the results were generated:

Ignored files:

Ignored: .Rhistory

Ignored: .Rproj.user/

Ignored: catboost_info/

Ignored: data/2021-09-08/

Ignored: data/CNHI_Excel_Chart.xlsx

Ignored: data/CommunityTreemap.jpeg

Ignored: data/Community_Roles.jpeg

Ignored: data/YammerDigitalDataScienceMembership.xlsx

Ignored: data/acs_poverty.rds

Ignored: data/fmhpi.rds

Ignored: data/grainstocks.rds

Ignored: data/hike_data.rds

Ignored: data/us_states.rds

Ignored: data/us_states_hexgrid.geojson

Ignored: data/weatherstats_toronto_daily.csv

Untracked files:

Untracked: code/YammerReach.R

Untracked: code/work list batch targets.R

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the repository in which changes were made to the R Markdown (analysis/Astronaut.Rmd) and HTML (docs/Astronaut.html) files. If you’ve configured a remote Git repository (see ?wflow_git_remote), click on the hyperlinks in the table below to view the files as they were in that past version.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| Rmd | 40f1f61 | opus1993 | 2021-09-24 | adopt viridis H palette and add the tweet |

This week’s R4DS Online Learning Community #TidyTuesday event is Astronaut missions. The goal of TidyTuesday is to apply your R skills, get feedback, explore other’s work, and connect with the greater #RStats community!

Load the weekly Data

Download the weekly data and make available in the tt object.

tt <- tidytuesdayR::tt_load("2020-07-14")

Downloading file 1 of 1: `astronauts.csv`Readme

Take a look at the readme for the weekly data to get insight on the dataset. This includes a data dictionary, source, and a link to an article on the data.

Glimpse Data

Take an initial look at the format of the data available.

astronauts <- tt$astronauts

astronauts %>%

count(in_orbit, sort = TRUE) %>%

dplyr::slice(1:10) %>%

knitr::kable()| in_orbit | n |

|---|---|

| ISS | 174 |

| Mir | 71 |

| Salyut 6 | 24 |

| Salyut 7 | 24 |

| STS-42 | 8 |

| explosion | 7 |

| STS-103 | 7 |

| STS-107 | 7 |

| STS-109 | 7 |

| STS-110 | 7 |

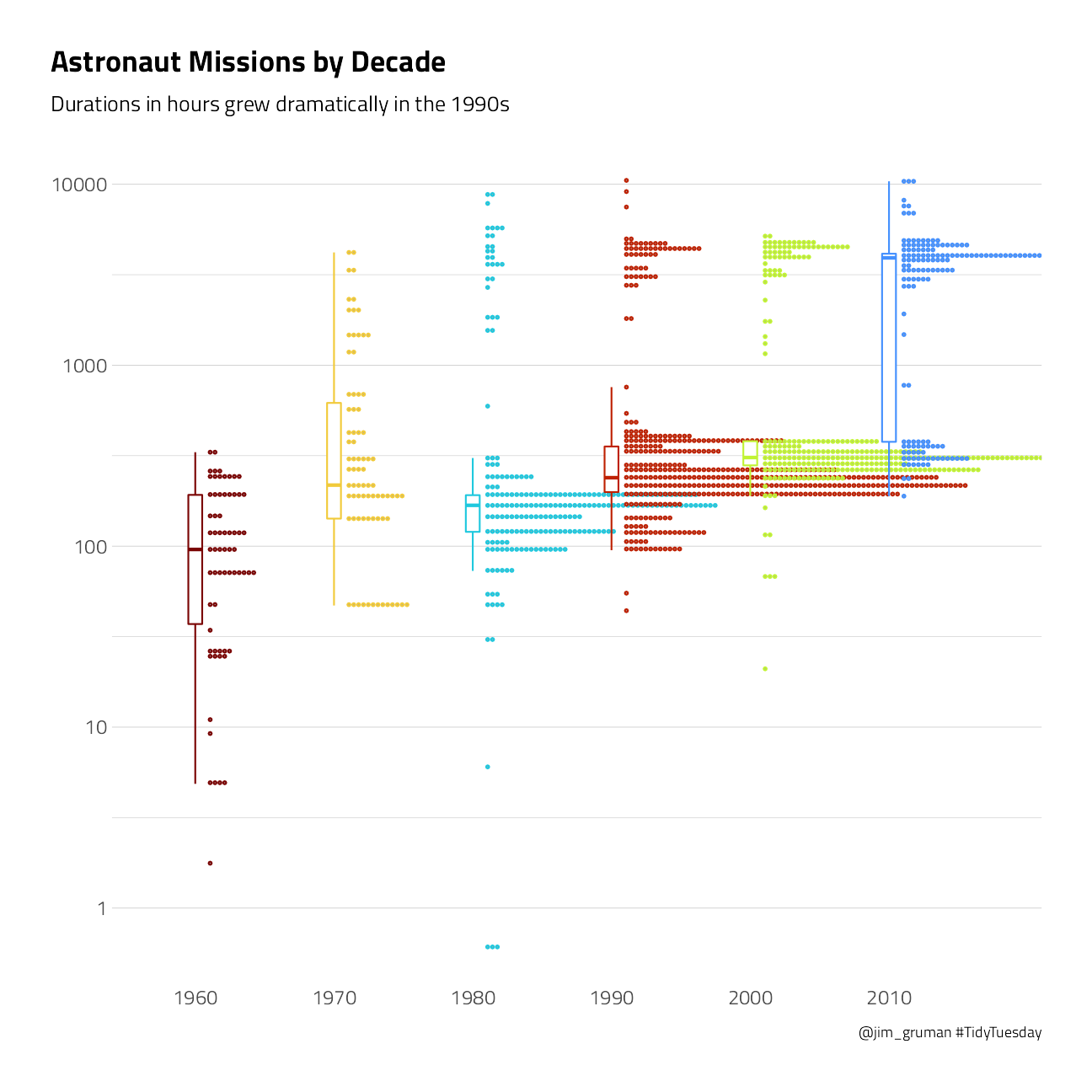

How has the duration of missions changed over time?

p1 <- astronauts %>%

mutate(

year_of_mission = 10 * (year_of_mission %/% 10),

year_of_mission = factor(year_of_mission)

) %>%

ggplot(aes(year_of_mission,

hours_mission,

color = year_of_mission

)) +

ggdist::stat_dots(

side = "top",

justification = -0.1,

binwidth = 0.025,

show.legend = FALSE

) +

geom_boxplot(

width = 0.1,

outlier.shape = NA,

show.legend = FALSE

) +

scale_y_log10() +

labs(

x = NULL, y = NULL,

subtitle = "Durations in hours grew dramatically in the 1990s",

title = "Astronaut Missions by Decade",

caption = "@jim_gruman #TidyTuesday"

) +

theme(panel.grid.major.x = element_blank())

p1

This duration is what we will to build a model to predict, using the other information in this per-astronaut-per-mission dataset. Let’s get ready for modeling next, by bucketing some of the spacecraft together and taking the logarithm of the mission length.

astronauts_df <- astronauts %>%

select(

name,

mission_title,

hours_mission,

military_civilian,

occupation,

year_of_mission,

in_orbit

) %>%

mutate(in_orbit = case_when(

str_detect(in_orbit, "^Salyut") ~ "Salyut",

str_detect(in_orbit, "^STS") ~ "STS",

TRUE ~ in_orbit

)) %>%

filter(hours_mission > 0) %>%

mutate(hours_mission = log(hours_mission)) %>%

na.omit()Julia Silge advises that it may make more sense to perform transformations like taking the logarithm of the outcome during data cleaning, before feature engineering and using any tidymodels packages like recipes. This kind of transformation is deterministic and can cause problems for tuning and resampling. OK…

Build a Model

We can start by loading the tidymodels metapackage, and splitting our data into training and testing sets.

astro_split <- initial_split(astronauts_df,

strata = hours_mission

)

astro_train <- training(astro_split)

astro_test <- testing(astro_split)Next, let’s preprocess our data to get it ready for modeling.

astro_recipe <- recipe(hours_mission ~ ., data = astro_train) %>%

update_role(name, mission_title, new_role = "id") %>%

step_other(occupation, in_orbit,

threshold = 0.005, other = "Other"

) %>%

step_dummy(all_nominal(), -has_role("id"))Let’s walk through the steps in this recipe.

First, we must tell the recipe() what our model is going to be (using a formula here) and what data we are using.

Next, update the role for the two columns that are not predictors or outcome. This way, we can keep them in the data for identification later.

There are a lot of different occupations and spacecraft in this dataset, so let’s collapse some of the less frequently occurring levels into an “Other” category, for each predictor.

Finally, we can create indicator variables.

We’re going to use this recipe in a workflow() so we don’t need to stress about whether to prep() or not.

astro_wf <- workflow() %>%

add_recipe(astro_recipe)

astro_wf== Workflow ====================================================================

Preprocessor: Recipe

Model: None

-- Preprocessor ----------------------------------------------------------------

2 Recipe Steps

* step_other()

* step_dummy()For this analysis, we are going to build a bagging, i.e. bootstrap aggregating, model. This is an ensembling and model averaging method that:

improves accuracy and stability

reduces overfitting and variance

In tidymodels, you can create bagging ensemble models with baguette, a parsnip-adjacent package. The baguette functions create new bootstrap training sets by sampling with replacement and then fit a model to each new training set. These models are combined by averaging the predictions for the regression case, like what we have here (by voting, for classification).

Let’s make two bagged models, one with decision trees and one with MARS models.

tree_spec <- bag_tree() %>%

set_engine("rpart", times = 25) %>%

set_mode("regression")

tree_specBagged Decision Tree Model Specification (regression)

Main Arguments:

cost_complexity = 0

min_n = 2

Engine-Specific Arguments:

times = 25

Computational engine: rpart mars_spec <- bag_mars() %>%

set_engine("earth", times = 25) %>%

set_mode("regression")

mars_specBagged MARS Model Specification (regression)

Engine-Specific Arguments:

times = 25

Computational engine: earth Let’s fit these models to the training data.

tree_rs <- astro_wf %>%

add_model(tree_spec) %>%

fit(astro_train)

tree_rs== Workflow [trained] ==========================================================

Preprocessor: Recipe

Model: bag_tree()

-- Preprocessor ----------------------------------------------------------------

2 Recipe Steps

* step_other()

* step_dummy()

-- Model -----------------------------------------------------------------------

Bagged CART (regression with 25 members)

Variable importance scores include:

# A tibble: 13 x 4

term value std.error used

<chr> <dbl> <dbl> <int>

1 year_of_mission 802. 31.7 25

2 in_orbit_Other 591. 50.4 25

3 in_orbit_STS 310. 25.9 25

4 occupation_flight.engineer 264. 27.6 25

5 in_orbit_Mir 118. 12.1 25

6 occupation_MSP 103. 11.8 25

7 occupation_pilot 102. 17.9 25

8 in_orbit_Salyut 96.9 7.55 25

9 military_civilian_military 43.6 3.47 25

10 occupation_Other 32.7 2.58 25

11 occupation_PSP 23.8 4.37 25

12 occupation_Other..space.tourist. 19.3 3.14 23

13 in_orbit_Mir.EP 13.5 1.68 25mars_rs <- astro_wf %>%

add_model(mars_spec) %>%

fit(astro_train)

mars_rs== Workflow [trained] ==========================================================

Preprocessor: Recipe

Model: bag_mars()

-- Preprocessor ----------------------------------------------------------------

2 Recipe Steps

* step_other()

* step_dummy()

-- Model -----------------------------------------------------------------------

Bagged MARS (regression with 25 members)

Variable importance scores include:

# A tibble: 12 x 4

term value std.error used

<chr> <dbl> <dbl> <int>

1 in_orbit_STS 100 0 25

2 in_orbit_Other 92.2 1.66 25

3 year_of_mission 65.1 4.19 25

4 in_orbit_Salyut 26.2 1.85 24

5 in_orbit_Mir.EP 24.4 1.54 25

6 occupation_Other 6.57 1.58 16

7 occupation_flight.engineer 3.80 6.78 4

8 military_civilian_military 3.24 0.903 10

9 occupation_pilot 1.11 0.387 6

10 occupation_PSP 0.880 0.856 3

11 occupation_MSP 0.471 0.312 2

12 in_orbit_Mir 0.184 0 1The models return aggregated variable importance scores, and we can see that the spacecraft and year are importance in both models.

Evaluate the Models

Let’s evaluate how well these two models did by evaluating performance on the test data.

test_rs <- astro_test %>%

bind_cols(predict(tree_rs, astro_test)) %>%

rename(.pred_tree = .pred) %>%

bind_cols(predict(mars_rs, astro_test)) %>%

rename(.pred_mars = .pred)

test_rs# A tibble: 318 x 9

name mission_title hours_mission military_civili~ occupation year_of_mission

<chr> <chr> <dbl> <chr> <chr> <dbl>

1 Tito~ Vostok 2 3.22 military pilot 1961

2 Niko~ Vostok 3 4.54 military pilot 1962

3 Popo~ Vostok 4 4.26 military pilot 1962

4 Schi~ Mercury-Atla~ 2.22 military pilot 1962

5 Tere~ Vostok 6 4.26 military pilot 1963

6 Koma~ Voskhod 1 3.19 military commander 1964

7 Gris~ Gemini 3 1.58 military commander 1965

8 Staf~ gemini 6A 3.25 military pilot 1965

9 Cern~ Apollo 17 5.71 military commander 1972

10 Cunn~ Apollo 7 5.56 civilian pilot 1968

# ... with 308 more rows, and 3 more variables: in_orbit <chr>,

# .pred_tree <dbl>, .pred_mars <dbl>We can use the yardstick::metrics() function for both sets of predictions.

test_rs %>%

metrics(hours_mission, .pred_tree)# A tibble: 3 x 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 rmse standard 0.778

2 rsq standard 0.716

3 mae standard 0.393test_rs %>%

metrics(hours_mission, .pred_mars)# A tibble: 3 x 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 rmse standard 0.712

2 rsq standard 0.760

3 mae standard 0.383Both models performed pretty similarly.

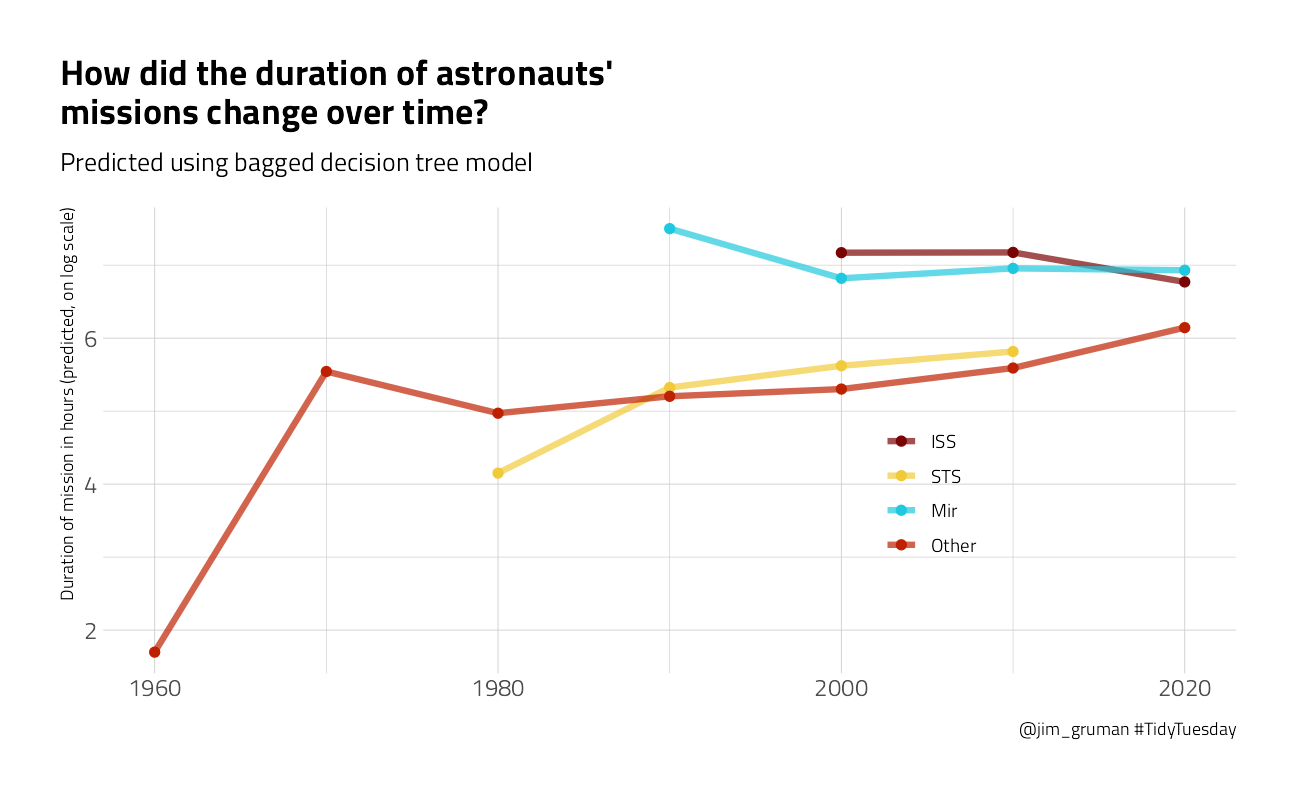

Let’s make some “new” astronauts to understand the kinds of predictions our bagged tree model is making.

new_astronauts <-

tidyr::crossing(

in_orbit = fct_inorder(c("ISS", "STS", "Mir", "Other")),

military_civilian = "civilian",

occupation = "Other",

year_of_mission = seq(1960, 2020, by = 10),

name = "id", mission_title = "id"

) %>%

filter(

!(in_orbit == "ISS" & year_of_mission < 2000),

!(in_orbit == "Mir" & year_of_mission < 1990),

!(in_orbit == "STS" & year_of_mission > 2010),

!(in_orbit == "STS" & year_of_mission < 1980)

)Let’s start with the decision tree model.

p2 <- new_astronauts %>%

bind_cols(predict(tree_rs, new_astronauts)) %>%

ggplot(aes(year_of_mission, .pred, color = in_orbit)) +

geom_line(size = 1.5, alpha = 0.7) +

geom_point(size = 2) +

labs(

x = NULL, y = "Duration of mission in hours (predicted, on log scale)",

color = NULL, title = "How did the duration of astronauts' \nmissions change over time?",

subtitle = "Predicted using bagged decision tree model",

caption = "@jim_gruman #TidyTuesday"

) +

theme(legend.position = c(0.73, 0.4))

p2

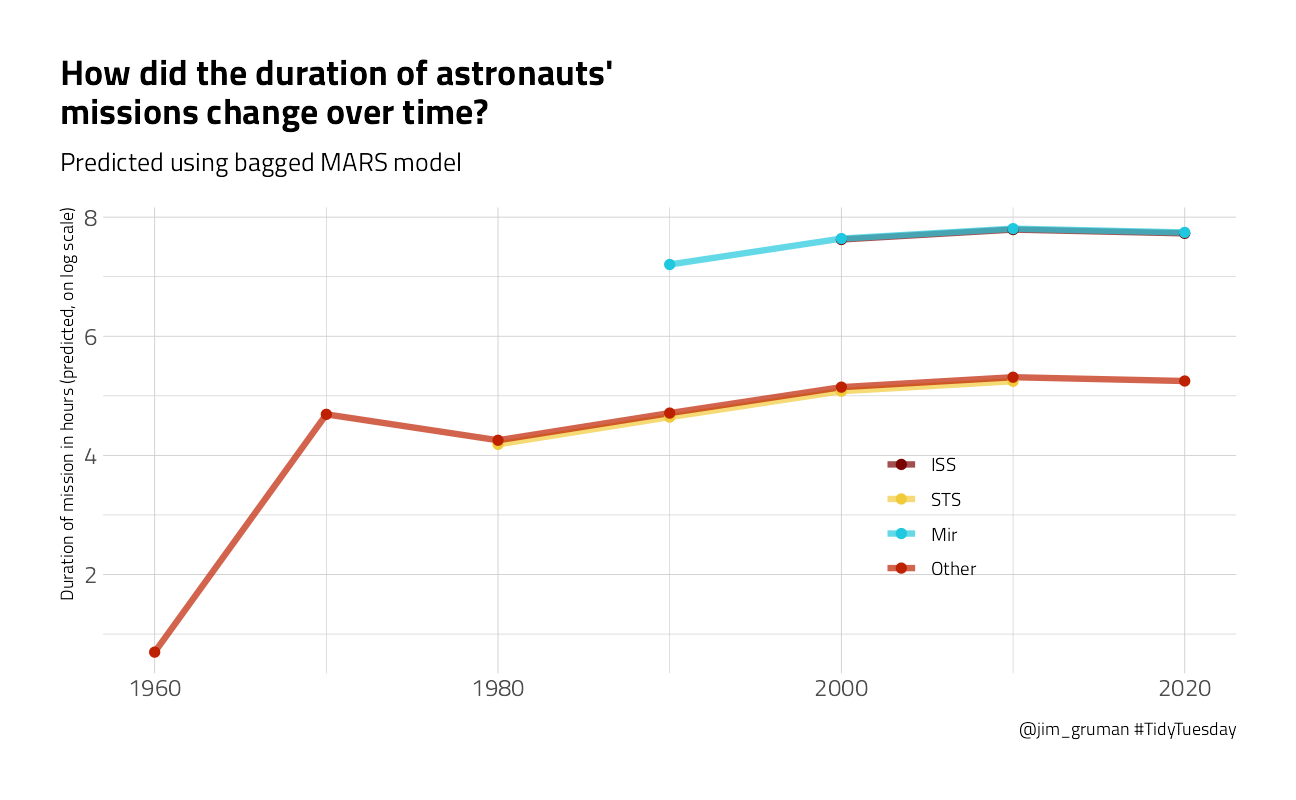

What about the MARS model?

p3 <- new_astronauts %>%

bind_cols(predict(mars_rs, new_astronauts)) %>%

ggplot(aes(year_of_mission, .pred, color = in_orbit)) +

geom_line(size = 1.5, alpha = 0.7) +

geom_point(size = 2) +

labs(

x = NULL, y = "Duration of mission in hours (predicted, on log scale)",

color = NULL, title = "How did the duration of astronauts' \nmissions change over time?",

subtitle = "Predicted using bagged MARS model",

caption = "@jim_gruman #TidyTuesday"

) +

theme(legend.position = c(0.73, 0.35))

p3

You can really get a sense of how these two kinds of models work from the differences in these plots (tree vs. splines with knots), but from both, we can see that missions to space stations are longer, and missions in that “Other” category change characteristics over time pretty dramatically.

Finally, my tweet:

tweetrmd::include_tweet("https://twitter.com/jim_gruman/status/1283799248212054020")In a time crunch this week for the #TidyTuesday on Astronauts, so I shamelessly followed @juliasilge's blog post on {baguette} https://t.co/xbFbCOYFQehttps://t.co/3YckY97hLB pic.twitter.com/M3KHILkvkh

— Jim Gruman📚🚵♂️⚙ (@jim_gruman) July 16, 2020

sessionInfo()R version 4.1.1 (2021-08-10)

Platform: x86_64-w64-mingw32/x64 (64-bit)

Running under: Windows 10 x64 (build 19043)

Matrix products: default

locale:

[1] LC_COLLATE=English_United States.1252

[2] LC_CTYPE=English_United States.1252

[3] LC_MONETARY=English_United States.1252

[4] LC_NUMERIC=C

[5] LC_TIME=English_United States.1252

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] baguette_0.1.1 yardstick_0.0.8 workflowsets_0.1.0 workflows_0.2.3

[5] tune_0.1.6 rsample_0.1.0 recipes_0.1.16 parsnip_0.1.7.900

[9] modeldata_0.1.1 infer_1.0.0 dials_0.0.10 scales_1.1.1

[13] broom_0.7.9 tidymodels_0.1.3 forcats_0.5.1 stringr_1.4.0

[17] dplyr_1.0.7 purrr_0.3.4 readr_2.0.1 tidyr_1.1.3

[21] tibble_3.1.4 ggplot2_3.3.5 tidyverse_1.3.1 workflowr_1.6.2

loaded via a namespace (and not attached):

[1] readxl_1.3.1 backports_1.2.1 systemfonts_1.0.2

[4] selectr_0.4-2 plyr_1.8.6 tidytuesdayR_1.0.1

[7] splines_4.1.1 listenv_0.8.0 usethis_2.0.1

[10] butcher_0.1.5 digest_0.6.27 foreach_1.5.1

[13] htmltools_0.5.2 viridis_0.6.1 earth_5.3.1

[16] fansi_0.5.0 magrittr_2.0.1 tzdb_0.1.2

[19] globals_0.14.0 modelr_0.1.8 gower_0.2.2

[22] extrafont_0.17 vroom_1.5.5 R.utils_2.10.1

[25] extrafontdb_1.0 hardhat_0.1.6 colorspace_2.0-2

[28] rvest_1.0.1 ggdist_3.0.0 textshaping_0.3.5

[31] haven_2.4.3 xfun_0.26 crayon_1.4.1

[34] jsonlite_1.7.2 libcoin_1.0-8 survival_3.2-11

[37] iterators_1.0.13 glue_1.4.2 gtable_0.3.0

[40] ipred_0.9-12 distributional_0.2.2 R.cache_0.15.0

[43] tweetrmd_0.0.9 Rttf2pt1_1.3.9 future.apply_1.8.1

[46] mvtnorm_1.1-2 DBI_1.1.1 Rcpp_1.0.7

[49] plotrix_3.8-2 viridisLite_0.4.0 Cubist_0.3.0

[52] bit_4.0.4 GPfit_1.0-8 Formula_1.2-4

[55] lava_1.6.10 prodlim_2019.11.13 httr_1.4.2

[58] ellipsis_0.3.2 farver_2.1.0 R.methodsS3_1.8.1

[61] pkgconfig_2.0.3 nnet_7.3-16 sass_0.4.0

[64] dbplyr_2.1.1 utf8_1.2.2 here_1.0.1

[67] labeling_0.4.2 tidyselect_1.1.1 rlang_0.4.11

[70] DiceDesign_1.9 reshape2_1.4.4 later_1.3.0

[73] cachem_1.0.6 munsell_0.5.0 TeachingDemos_2.12

[76] cellranger_1.1.0 tools_4.1.1 cli_3.0.1

[79] generics_0.1.0 evaluate_0.14 fastmap_1.1.0

[82] ragg_1.1.3 yaml_2.2.1 bit64_4.0.5

[85] knitr_1.34 fs_1.5.0 future_1.22.1

[88] whisker_0.4 R.oo_1.24.0 xml2_1.3.2

[91] compiler_4.1.1 rstudioapi_0.13 curl_4.3.2

[94] reprex_2.0.1 lhs_1.1.3 bslib_0.3.0

[97] stringi_1.7.4 highr_0.9 plotmo_3.6.1

[100] gdtools_0.2.3 hrbrthemes_0.8.0 lattice_0.20-44

[103] Matrix_1.3-4 styler_1.6.1 conflicted_1.0.4

[106] vctrs_0.3.8 pillar_1.6.2 lifecycle_1.0.1

[109] furrr_0.2.3 jquerylib_0.1.4 httpuv_1.6.3

[112] R6_2.5.1 promises_1.2.0.1 gridExtra_2.3

[115] C50_0.1.5 parallelly_1.28.1 codetools_0.2-18

[118] MASS_7.3-54 assertthat_0.2.1 rprojroot_2.0.2

[121] withr_2.4.2 parallel_4.1.1 hms_1.1.0

[124] grid_4.1.1 rpart_4.1-15 timeDate_3043.102

[127] class_7.3-19 rmarkdown_2.11 inum_1.0-4

[130] git2r_0.28.0 partykit_1.2-15 pROC_1.18.0

[133] lubridate_1.7.10